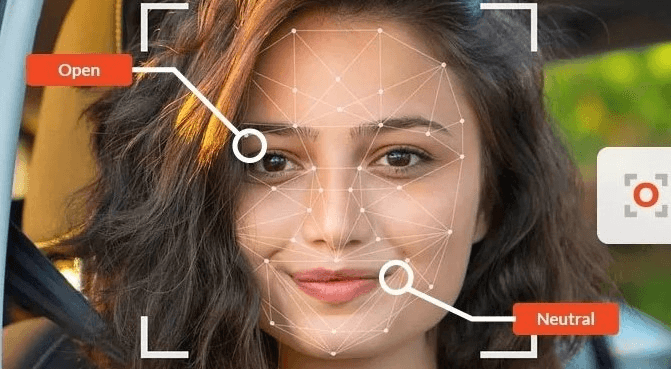

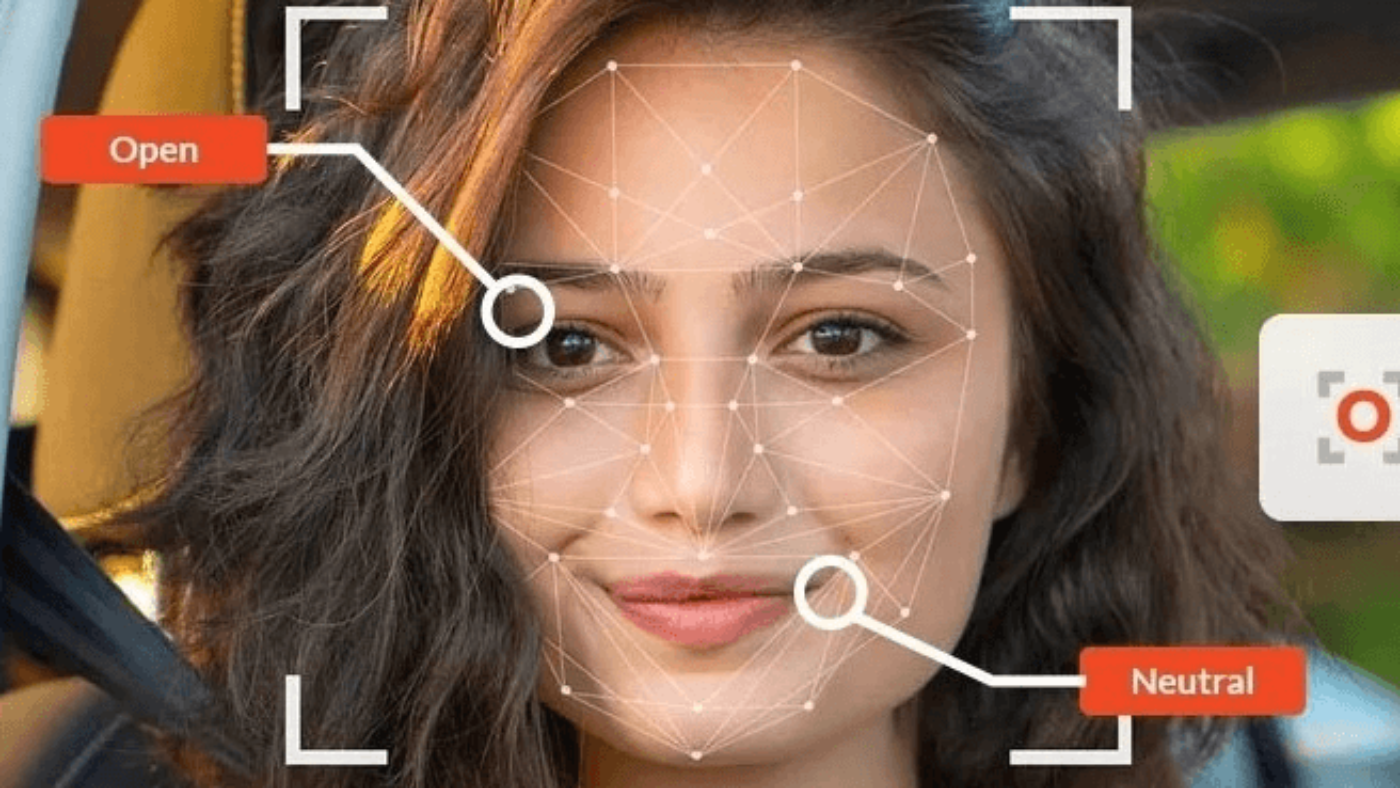

Facial expression analysis involves decoding emotions through emotional expressions and facial cues, using face detection to identify subtle expressions. This analysis is done by utilizing a face model. This article provides a brief overview of the research methodology, theory, characteristics, and challenges in this intriguing field. It discusses the subjects involved and the use of Google Scholar for gathering relevant information. Understanding facial expressions, including face detection and emotion detection, offers valuable insights into human behavior and communication. This makes it an area of keen interest for researchers worldwide. Speech emotion recognition is also an important aspect of understanding human emotions. From the detection to extraction and representation of facial features, each chapter in this exploration unveils the appearance-based methods used for emotion recognition, including the use of face models, basic emotions, subtle expressions, and deep learning. The article serves as a brief overview and guide for those interested in selecting subjects at international conferences or pursuing studies on this captivating topic using Google Scholar.

Understanding Facial Expression Analysis

Emotion and Expression

Emotions are complex psychological states that people express through facial expressions. Face detection allows for the analysis of these expressions and can provide insights into affect, arousal, and EEG measurements. Facial emotions, such as happiness, sadness, surprise, anger, fear, and disgust, can be conveyed through facial actions. Facial emotion recognition and emotion detection can analyze the way we smile, frown, or raise our eyebrows. These facial expressions, also known as basic emotions, play a crucial role in emotion detection and communicating our feelings and social signals to others. Multimodal emotion recognition techniques often rely on face detection to analyze these expressions. It’s fascinating to note that different cultures may interpret facial emotions differently. Numerous studies on facial emotions and their interpretation have been conducted, with researchers using vision as a key tool to understand these expressions. To explore this further, one can refer to the extensive collection of research papers available on Google Scholar. For instance, a smile, a facial expression recognized in facial action and facial expression recognition, might indicate happiness universally but could also be seen as nervousness or embarrassment in some cultures that study multimodal emotion. This research highlights the importance of cross-cultural studies in understanding how facial expressions are perceived and interpreted by participants. It emphasizes the significance of multimodal emotion and face detection in this field.

Researchers use measures like action units and FACS coding. These measures help break down facial expressions into smaller components for analysis in studies on multimodal emotion detection methods, such as EEG. For example, in facial expression recognition, when someone is happy, their cheeks might rise (action unit 6) while the corners of their lips pull up (action unit 12). This is a common activity in facial expression detection. By analyzing these action units using computer vision techniques, neural networks, and machine learning algorithms helps identify subtle changes in expression that reveal underlying emotions. These detection methods can be applied to various types of data, such as EEG signals or image analysis.

Techniques and Measures

Various techniques such as deep learning and computer vision algorithms have significantly improved the accuracy of facial expression recognition systems by enabling real-time detection and analysis of facial expressions using the facereader. These techniques analyze images of subjects to detect and interpret their facial expressions. The choice of technique for facial expression recognition and detection depends on the specific goals of the analysis. Whether it’s about identifying basic emotions like happiness or sadness or delving deeper into more nuanced emotional states, facial expression databases and detection methods are crucial.

For instance:

Computer vision utilizes cameras to capture images or video footage of faces and applies algorithms designed for facial expression recognition and detection. These algorithms analyze the captured data to identify patterns related to various emotions. This process often involves referencing facial expression databases and detecting specific facial actions.

Machine learning involves training models on large datasets containing labeled examples of different facial expressions so they can accurately recognize similar patterns in new image data. This comput-based process is essential for detection and recognition tasks in databases.

These technologies have enabled advancements not only in research but also applications such as emotion-aware human-computer interfaces used for personalized learning experiences. They have also been utilized in mental health monitoring tools, capable of detecting signs of depression based on changes in facial expression over time. These subjects are often explored and discussed in the field of computer vision, and can be found in various research papers on platforms like Google Scholar.

Technology in Analysis

Advancements in technology, specifically deep learning algorithms, have revolutionized facial expression analysis. These algorithms can now detect even subtle micro-expressions, which are brief involuntary flashes revealing concealed emotions. This breakthrough is particularly valuable for high-stakes situations like security screenings or law enforcement interviews, where the use of a facereader to analyze images can provide crucial insights. Technologies like deep learning have significantly improved the accuracy of facial expression recognition and facial expression detection by allowing systems to automatically discover intricate features within raw data from a dataset without needing explicit programming instructions. These advancements have revolutionized the field of computer vision.

The Science of Facial Expressions

Facial Nerve Function

The facial nerve plays a vital role in controlling facial muscles, allowing for a range of facial expressions. This is particularly important in the field of computer vision and image processing, as the ability to accurately detect and analyze faces is essential for various applications. In fact, the IEEE (Institute of Electrical and Electronics Engineers) has devoted significant research to advancing facial recognition technology. Damage to the facial nerve can lead to difficulties in facial expression detection, expressing or recognizing facial actions and emotions. Understanding the deep neural mechanisms behind face learning and facial expression detection helps analyze them effectively. For instance, when someone has a spontaneous and genuine smile, the brain sends signals through the facial nerve to activate specific muscle groups, creating a deep and authentic expression during their performance.

Understanding the methods of deep face detection helps in developing technologies that accurately detect and interpret micro-expressions of the facial nerve. This is particularly beneficial in fields like law enforcement and psychology where deep face detection and subtle changes in facial expressions play a significant role. Google Scholar can be a useful resource for finding relevant research on this topic.

Emotion Classification

Face emotion classification involves categorizing facial expressions using deep features into different emotional states such as happiness, sadness, anger, surprise, fear, and disgust. Face detection is an essential step in this process. Machine learning algorithms can be trained using vast amounts of data from diverse individuals’ faces to classify emotions based on visual features extracted from faces. This process is known as facial expression detection or expression recognition and relies on facial action. To achieve accurate results, it is essential to have access to comprehensive databases.

Accurate emotion classification is essential for various applications including human-computer interaction systems that adapt their responses based on user emotions detected through webcams or other sensors. One method used for accurate emotion classification is facial expression detection and expression recognition. These techniques analyze the face to determine the user’s emotional state. This technology is often implemented in systems that utilize webcams or other sensors to detect and recognize facial expressions, allowing for more personalized and responsive interactions. The Institute of Electrical and Electronics Engineers (IEEE) is a leading organization in the development and advancement of facial expression detection and expression recognition technologies. In healthcare settings, accurate emotion classification using face detection enables better assessment of patients’ well-being by analyzing their facial expressions during telehealth appointments. This can be achieved by leveraging the IEEE database for improved accuracy.

Affect Program Theory

According to the IEEE, deep learning algorithms can automatically detect and analyze facial expressions that are hardwired within humans, triggered by specific emotions. For example, when people experience fear universally, they tend to widen their eyes and raise their eyebrows slightly – a facial action linked with feeling fearful. This automatic response can be detected through facial expression detection, using deep learning techniques. This theory provides a framework for understanding the link between emotions and facial expressions across cultures despite differences in individual experiences or socialization processes. It is especially useful for researchers studying emotions and facial expressions, as it can be applied to various fields such as psychology, sociology, and anthropology. Researchers can utilize databases like Google Scholar or IEEE to access relevant studies and articles on this topic.

Analyzing Emotions Through Expressions

Behavioral Metrics

Behavioral metrics involve analyzing specific facial movements associated with different emotions. Face detection methods, such as those found on Google Scholar, can be used to identify and analyze these movements. Action units, defined by FACS coding, provide a standardized way of quantifying facial expression detection movements in the methods and face database. For example, the facial expression detection of a smile is linked to the spontaneous contraction of the zygomatic major muscle. This information can be found on Google Scholar. These behavioral metrics help in objectively measuring and comparing facial expressions across individuals and cultures. The face features can be analyzed using IEEE standards to ensure accurate results. The resulting data can be accessed through a DOI for easy reference.

By using action units, researchers can precisely measure subtle expressions like micro-smiles or frowns that might not be easily detectable through casual observation. This method is particularly useful for face detection and can be found in various studies on Google Scholar. These action units are key features in analyzing facial expressions. This allows for a more detailed understanding of emotional responses in various situations, by analyzing facial actions captured in a face database. The full text provides valuable insights. Moreover, behavioral metrics play a crucial role in fields such as psychology, market research, and human-computer interaction by providing valuable insights into human emotional states. These insights can be found in the ieee database and accessed through platforms like Google Scholar. Additionally, behavioral metrics can also be used to analyze facial expressions and reactions.

In addition to analyzing facial expressions using face detection, physiological responses such as changes in heart rate or skin conductance can complement this analysis. This can be further explored by referring to research on the topic in Google Scholar. When combined with face detection and facial expression analysis, these physiological responses offer a more comprehensive understanding of emotional states. The full text and svp are essential for a deeper understanding.

For instance, while someone may try to conceal their emotions through their facial expressions, physiological measures such as face detection can accurately reveal underlying emotional arousal and intensity. SVP can also be used to analyze the full text of a person’s emotions. By incorporating both database and full text data, researchers gain deeper insights into an individual’s emotional experiences and reactions. This can be particularly useful when analyzing facial expressions and utilizing tools like Google Scholar.

The combination of behavioral metrics and physiological responses enhances the accuracy and reliability of emotion recognition systems used in various domains including healthcare (e.g., pain assessment), education (e.g., student engagement), marketing (e.g., consumer sentiment analysis), and face detection. These systems utilize facial action to analyze emotions, making them relevant in fields such as healthcare, education, marketing, and Google Scholar.

Automatic Facial Coding Technology

Face Recognition Models

Face recognition models, with their ability to detect and analyze facial expressions, are essential in the field. These models rely on features stored in a database and can be further enhanced by utilizing resources like Google Scholar. They are designed to detect and track individual faces in images or videos, forming the basis for many facial expression analysis systems. These systems rely on a database of facial images and videos, which can be accessed through platforms like Google Scholar. Additionally, each detected face can be assigned a unique identifier, such as a DOI, for easy reference and retrieval. The accuracy of facial action recognition is vital for reliable emotion detection and analysis. This requires a comprehensive database of facial actions for full text understanding. Imagine a computer program that can accurately detect and recognize different faces from a live video feed or still images using advanced face recognition models. This is made possible by the detection capabilities of the program, which allows it to identify and locate faces in the input data. The program then uses a database of known faces to compare and match the detected faces, providing accurate recognition results. Additionally, the program utilizes full text search algorithms to efficiently retrieve relevant information from the database. To ensure the reliability and credibility of the recognized faces, the program also incorporates DOI (Digital Object Identifier) technology, which assigns unique identifiers to each face in the database.

These face detection models, found on Google Scholar, are essential for recognizing individuals and understanding their emotions through their facial expressions. For instance, when someone smiles, the detection system needs to accurately identify the person’s face before analyzing their smile as an expression of happiness. This can be done using tools like Google Scholar to access full-text articles and research on the topic. Additionally, the system can utilize SVP (Support Vector Machines) algorithms for efficient face detection and analysis. Therefore, accurate face recognition ensures that subsequent emotion analysis is based on correct identification. Additionally, the detection of faces using full text helps to improve the accuracy of the recognition process. Researchers can also benefit from using Google Scholar to find relevant articles and studies related to face recognition and emotion analysis. Furthermore, including the DOI (Digital Object Identifier) of a publication in a research paper allows readers to easily access the full text of the article.

The Facial Action Coding System (FACS) uses recognized faces for the detection and analysis of specific muscle movements linked to emotional expressions. This system can be found on Google Scholar, where full text articles about FACS and its applications can be accessed. Google Scholar is a valuable resource for researchers and developers in the field of face analysis. By accessing the full text articles and utilizing DOIs, they can gain a deeper understanding of the specific facial muscles involved in different expressions such as joy, surprise, and anger. This knowledge empowers them to enhance their algorithms for automatic expression detection.

Deep Learning Techniques

Deep learning techniques have significantly enhanced facial expression analysis, especially with the use of convolutional neural networks (CNNs). With the help of face detection, these CNNs are able to accurately analyze and interpret facial expressions. Researchers and scholars can access the full text of these studies on Google Scholar. These networks automatically learn complex features from facial images, leading to improved performance in recognizing emotions accurately from people’s expressions. Face detection, SVP, and Google Scholar play a significant role in enhancing the accuracy and efficiency of these networks.

Think of CNNs as virtual artists who can analyze thousands of pictures of smiling people using Google Scholar and learn what makes those smiles unique compared to other types of smiles or neutral expressions. With the help of CNNs, researchers can access the full text of relevant articles and gain valuable insights into facial expressions. This ability enables deep learning-based systems to detect and recognize emotions, including happiness or sadness, as well as subtle variations within each emotion category. With the help of Google Scholar, researchers can access the full text of relevant studies on this topic.

Furthermore, deep learning has revolutionized the field of facial expression analysis, achieving state-of-the-art results. Researchers can easily access relevant studies on this topic by using Google Scholar. Additionally, the detection of facial expressions can be further improved by implementing advanced algorithms. To access the full study, users can simply search for the DOI number associated with it. For example, it has enabled automatic detection systems used in applications ranging from security surveillance cameras detecting suspicious behavior based on people’s expressions to assistive technologies helping individuals with autism spectrum disorder better understand social cues through automated emotion recognition. With the help of face recognition technology, these systems can analyze facial expressions using tools like Google Scholar and SVP.

Challenges in Expression Analysis

Real-Time Recognition Issues

Real-time facial expression analysis, including face detection, encounters difficulties due to the need for rapid processing and low latency. With the advancements in technology, researchers can now utilize tools like Google Scholar to access relevant studies and findings related to this field. Efficient algorithms and hardware acceleration techniques are essential for achieving real-time performance in face detection. Google Scholar provides a valuable resource for researching and accessing relevant academic papers on this topic. For instance, emotion-aware systems and interactive technologies require overcoming these issues related to face detection et al to provide seamless user experiences. Users can find relevant research on this topic by searching on Google Scholar.

Implementing efficient algorithms is crucial for addressing real-time recognition challenges in face detection and expression analysis. By utilizing advanced techniques and leveraging the latest research from Google Scholar, we can ensure accurate and timely detection of facial expressions. These face detection algorithms must be designed to process facial data swiftly, ensuring that the system can recognize and respond to expressions without delays. Google Scholar is a valuable resource for researching advancements in this field. Hardware acceleration techniques, such as face detection, are crucial for enhancing the speed of processing and achieving real-time performance. These techniques have been extensively studied and researched by experts in the field, with numerous scholarly articles available on platforms like Google Scholar.

Developing robust solutions that address real-time recognition and detection issues is paramount for various applications such as interactive technologies, emotion-aware systems, and Google Scholar. By focusing on efficient algorithm design and leveraging hardware acceleration techniques, developers can overcome these challenges in facial expression analysis. This is especially important for detection and analysis of facial expressions, which can be facilitated by using tools such as Google Scholar.

Data Availability Constraints

Limited availability of labeled datasets poses significant hurdles when training accurate facial expression analysis models. However, utilizing Google Scholar can aid in the detection of relevant research papers and studies. To ensure the reliability and generalizability of the models, collecting large-scale datasets with diverse populations becomes imperative. This can be achieved by using Google Scholar for detection purposes. Overcoming data availability constraints is fundamental for building robust and unbiased facial expression analysis systems. This is especially important for detection of facial expressions. Researchers often rely on data from Google Scholar to gather relevant information and improve their analysis systems.

The scarcity of labeled datasets impedes the development of accurate models for expression analysis, particularly when it comes to utilizing Google Scholar for detection. Collecting comprehensive datasets, such as those available on Google Scholar, enables researchers to train more reliable models capable of accurate detection of a wide range of expressions using AL.

Addressing data availability constraints involves concerted efforts towards gathering labeled datasets from various demographic groups worldwide. One valuable resource for accessing such datasets is Google Scholar, which provides a vast collection of scholarly articles and publications. By utilizing Google Scholar, researchers can access a wide range of information and data to overcome the limitations posed by data availability. This inclusive approach ensures that facial expression analysis models, when used with Google Scholar, are not biased towards specific populations or demographics but instead offer broad applicability across different groups.

Application Fields and Opportunities

Healthcare Emotional States

Facial expression analysis finds applications in healthcare, especially in evaluating patients’ emotional states using Google Scholar et. By analyzing facial expressions, healthcare professionals can diagnose and monitor mental health conditions using Google Scholar. For instance, a patient’s smile or frown can indicate their emotional well-being, providing valuable insights for medical practitioners. This information can be found on platforms such as Google Scholar. The use of objective tools, such as Google Scholar, in understanding patients’ emotional states can significantly benefit healthcare professionals et.

Moreover, facial expression analysis under varying lighting conditions offers an opportunity to develop robust systems that accurately assess emotional states using Google Scholar. This is particularly important as different lighting environments are encountered in various healthcare settings such as hospitals and clinics. Google Scholar can provide valuable research on this topic.

Multimodal Recognition

Another promising area for facial expression analysis is multimodal recognition, including the use of Google Scholar and other relevant resources. This involves combining facial expressions with other modalities like voice or body language to enhance the accuracy and robustness of emotion recognition systems, such as Google Scholar. For example, when a person speaks softly while displaying a sad facial expression and closed body posture, it indicates a high likelihood of being upset or unhappy. This can be further explored using Google Scholar.

Furthermore, integrating multiple modalities enables a more comprehensive analysis of human emotions by capturing subtle cues from different sources simultaneously, such as using Google Scholar et. This approach not only provides a deeper understanding of individuals’ emotions but also enhances the overall effectiveness of emotion recognition systems, especially when utilizing Google Scholar.

Spontaneous vs Posed Expressions

Genuine vs Posed Databases

Differentiating between genuine and posed facial expressions is crucial for accurate facial expression analysis. With the help of Google Scholar, researchers can access a vast database of scholarly articles to enhance their understanding of this topic. Databases containing both genuine and posed expressions are essential for training and evaluating emotion recognition models. Google Scholar et is a valuable resource for finding research papers on this topic. For instance, a database comprising authentic smiles versus forced or fake ones helps in developing algorithms that can accurately distinguish between the two. This is particularly useful when using Google Scholar to search for scholarly articles on the topic of facial expressions and emotion recognition. This differentiation enhances the precision of systems designed to interpret human emotions, especially when using Google Scholar and AL.

Recognizing the authenticity of facial expressions improves the reliability of emotion analysis systems, especially when using Google Scholar for research purposes. By leveraging databases such as Google Scholar with a wide range of spontaneous emotions, researchers can create more robust models that can identify subtle nuances in human expression (et al). For example, by analyzing databases from Google Scholar containing genuine surprise versus feigned surprise, developers can refine their algorithms to detect minute differences in eye movement or micro-expressions associated with authentic emotional responses.

Understanding the disparities between spontaneous and posed expressions allows for more comprehensive training data for facial expression analysis models, including Google Scholar. This leads to improved accuracy when these models are deployed across various applications such as mental health assessment tools, customer sentiment analysis in business settings, or even security measures like identifying suspicious behavior through surveillance footage using Google Scholar.

Advanced Recognition Systems

Muscle Movement Analysis

Facial expression analysis, including muscle movement analysis, is crucial to understand emotions being expressed. This analysis can be conducted using tools like Google Scholar et al. By identifying and quantifying specific muscle movements, known as action units et al, we gain insights into the underlying emotions. This research can be found on Google Scholar. For example, a smile can be analyzed using Google Scholar by detecting the activation of certain facial muscles. This detailed analysis contributes to a comprehensive understanding of spontaneous and posed expressions, as well as their relevance in the context of Google Scholar.

Google Scholar is a valuable resource for researchers and scholars to find relevant articles and studies. Action unit detection, as studied on Google Scholar, is crucial in recognizing various emotional states based on subtle changes in facial muscle movements. These action units help in categorizing different types of smiles or frowns, leading to more accurate emotion recognition systems, according to Google Scholar and et al. For instance, during a genuine smile, distinct muscle movements around the eyes contribute to its authenticity, according to Google Scholar et al.

Muscle movement analysis, including facial expressions, plays an essential role in enhancing computer interaction systems by enabling them to accurately interpret human emotions. This analysis can be further enhanced by utilizing tools such as Google Scholar. This technology has been widely discussed and showcased at major conferences such as IEEE Conference on Computer Vision and Pattern Recognition (CVPR) and has been extensively studied by researchers on platforms like Google Scholar, et al.

Texture Feature Techniques

In addition to analyzing muscle movements, texture feature techniques are employed for advanced facial expression analysis using Google Scholar. These techniques involve extracting visual patterns from facial images that relate to skin texture, wrinkles, and other attributes unique to each individual’s face. These techniques can be implemented using Google Scholar and other similar platforms.

Texture features, along with other visual features, are crucial for emotion recognition models. These features capture fine-grained details of facial expressions, making them an essential component. Google Scholar provides a comprehensive collection of scholarly articles that discuss the importance and implementation of texture features in emotion recognition models. For instance, when someone furrows their brows out of concern or worry, these subtle textural changes can be detected using sophisticated algorithms designed for texture feature extraction. This can be particularly useful when conducting research on facial expressions and emotions, as it allows researchers to analyze and quantify these changes. One tool that can aid in this analysis is Google Scholar, which provides access to a vast database of scholarly articles and research papers related to various topics, including facial expression analysis. By utilizing Google Scholar, researchers can stay up-to-date with the latest findings and incorporate relevant studies into their own work.

The integration of texture feature techniques enhances the accuracy of emotion recognition models by providing additional layers of information about spontaneous vs posed expressions. This is especially true when using Google Scholar to find relevant research articles on the subject. The combination of both muscle movement analysis and texture feature techniques, when applied to human emotions, results in more robust computer-based systems capable of effectively interpreting emotions. This is especially true when using tools like Google Scholar to access relevant research and studies.

Future Directions in Expression Analysis

Evolution of Emotion Recognition

Emotion recognition has come a long way, evolving from manual coding to automated computer-based methods. With the help of Google Scholar, researchers can easily access a vast array of academic resources on this topic. Initially, researchers used to manually code facial expressions by analyzing images or videos frame by frame using Google Scholar et. However, with advancements in technology, automated systems, such as those developed by et al, have been created that can accurately and efficiently recognize emotions. These systems can be easily accessed through platforms like Google Scholar. These systems, like Google Scholar, utilize sophisticated algorithms and machine learning techniques to analyze facial expressions and interpret underlying emotions.

Advancements in technology, such as Google Scholar, have played a pivotal role in the evolution of emotion recognition. With the introduction of more powerful computing capabilities and the availability of large datasets for training machine learning models, emotion recognition systems have become more accurate and reliable over time. This is especially true with the help of Google Scholar and the advancements in Artificial Intelligence (AI) algorithms. Understanding the evolution of facial expression analysis is crucial as it allows us to appreciate the significant progress made in this field. Google Scholar and other resources provide valuable insights into this topic.

Multimodal System Developments

The future of facial expression analysis lies in multimodal systems that integrate various technologies, including Google Scholar, to provide a comprehensive approach to emotion recognition. These multimodal systems combine computer vision, machine learning, physiological signals, audio processing, and other modalities to gain a deeper understanding of human emotions. Google Scholar and et are useful resources for exploring research on these topics. By leveraging multiple sources of data, including Google Scholar, these systems can offer more holistic insights into an individual’s emotional state.

The integration of various modalities such as computer vision, machine learning, and Google Scholar holds promise for future advancements in emotion recognition. For example, combining facial expression analysis with physiological signals like heart rate variability can provide a more nuanced understanding of an individual’s emotional responses as found in Google Scholar et. Ongoing developments in multimodal systems, such as Google Scholar, are expected to lead to even more accurate and robust emotion recognition capabilities.

Conclusion

You’ve now delved into the intricate world of facial expression analysis, uncovering its scientific foundations, technological advancements, real-world applications, and the role of Google Scholar. Understanding the nuances of spontaneous versus posed expressions and the challenges in accurate recognition has shed light on the complexity of this field, especially when using google scholar. As we look to the future, the potential for advanced recognition systems, such as Google Scholar, to revolutionize various industries is both exciting and promising.

So, what’s next? Dive deeper into this fascinating realm by exploring the latest research on Google Scholar, engaging with experts in the field, or even considering how these insights could be applied in your own endeavors. The world of facial expression analysis, including Google Scholar, is constantly evolving, and your curiosity and involvement can contribute to its continuous growth and innovation.

Frequently Asked Questions

What is facial expression analysis?

Facial expression analysis, including emotions, intentions, and psychological states, can be examined using Google Scholar. It involves using technology, such as Google Scholar, to interpret micro-expressions and subtle changes in the face. This technology is often referred to as “AL”.

How does automatic facial coding technology work?

Automatic facial coding technology uses algorithms to analyze facial muscle movements and map them to specific emotions, making it a valuable tool for researchers and academics using Google Scholar. By detecting even the most fleeting expressions, Google Scholar provides insights into a person’s emotional state without relying on self-reporting.

What are some challenges in expression analysis?

Challenges in expression analysis using Google Scholar include accounting for cultural differences in expressions, dealing with variations due to age or gender, and ensuring accuracy when distinguishing between spontaneous and posed expressions.

In what fields can facial expression analysis be applied?

Facial expression analysis has applications in various fields such as market research, psychology, human-computer interaction, healthcare (e.g., pain assessment using Google Scholar), security (e.g., lie detection using Google Scholar), and entertainment (e.g., gaming using Google Scholar).

What are the future directions in expression analysis?

Future directions involve enhancing real-time emotion recognition systems for improved user experiences across different domains like virtual reality environments, personalized advertising strategies based on emotional responses, and mental health diagnostics.

Add a Comment