How does the Viola-Jones Framework contribute to live face detection in AR?

What are some JavaScript and WebGL libraries used for live face detection in AR?

How can multiple faces be detected simultaneously in live AR environments?

What technologies are involved “under the hood” of AR face detection?

How can engaging AR facial filters be created using live face detection technology?

Did you know that live face detection using the jeelizfacefilter library in augmented reality (AR) powered by WebGL is shaping the future of interactive experiences? With the ability to access the camera and analyze real-time images, AR applications can now seamlessly integrate facial recognition technology for immersive user interactions. This groundbreaking jeelizfacefilter technology, capable of identifying and tracking human faces in real-time, has become a game-changer across various industries. It utilizes the camera to capture and process images, enhancing the capabilities of applications. By using the camera and analyzing facial features, the jeelizfacefilter library enables live face detection in webGL. This technology paves the way for immersive AR applications that can manipulate and augment the image of the user’s face in real-time. The evolution of the camera has not only revolutionized user experiences but also significantly enhanced the realism and interactivity of AR filters and effects. With the integration of WebGL and the JeelizFaceFilter library, users can now apply these effects to their own images in real-time. From gaming to marketing, entertainment to communication, this innovation finds diverse applications. Whether it’s a demo for a new game or an image editing tool for enhancing photos, the canvas of possibilities for face filters is vast. Live face detection with jeelizfacefilter enables real-time face tracking using WebGL and canvas for interactive image filters, virtual makeup trials, character animation, and even allows users to try on virtual products or share augmented selfies — all contributing to heightened user engagement.

The Viola-Jones Framework

Object detection principles involve training machine learning models to recognize specific objects or features within an image or video. With the use of face filters, a canvas library, and WebGL technology, these models can accurately identify and track objects in real-time. This process utilizes face filter techniques, deep learning algorithms, convolutional neural networks (CNNs), and image canvas library. By leveraging the jeelizfacefilter library, accurate identification and tracking of faces in real-time using image and canvas become achievable. For instance, when using the jeelizfacefilter library, a live camera feed can be analyzed and the algorithm can swiftly identify and track faces as they move within the frame. The image is processed on a canvas.

Facial Detection Intuition

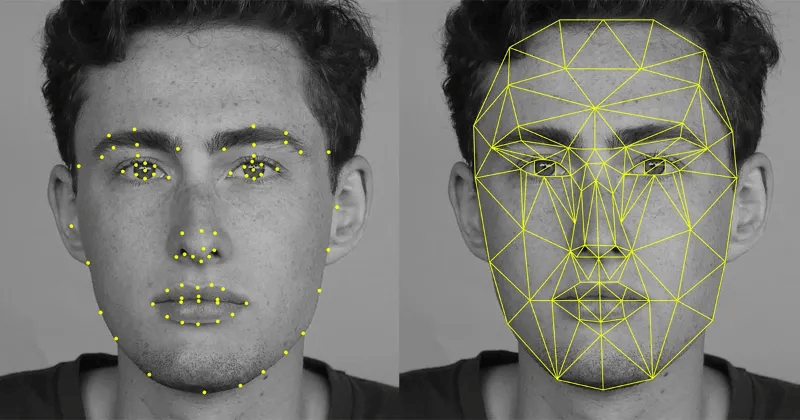

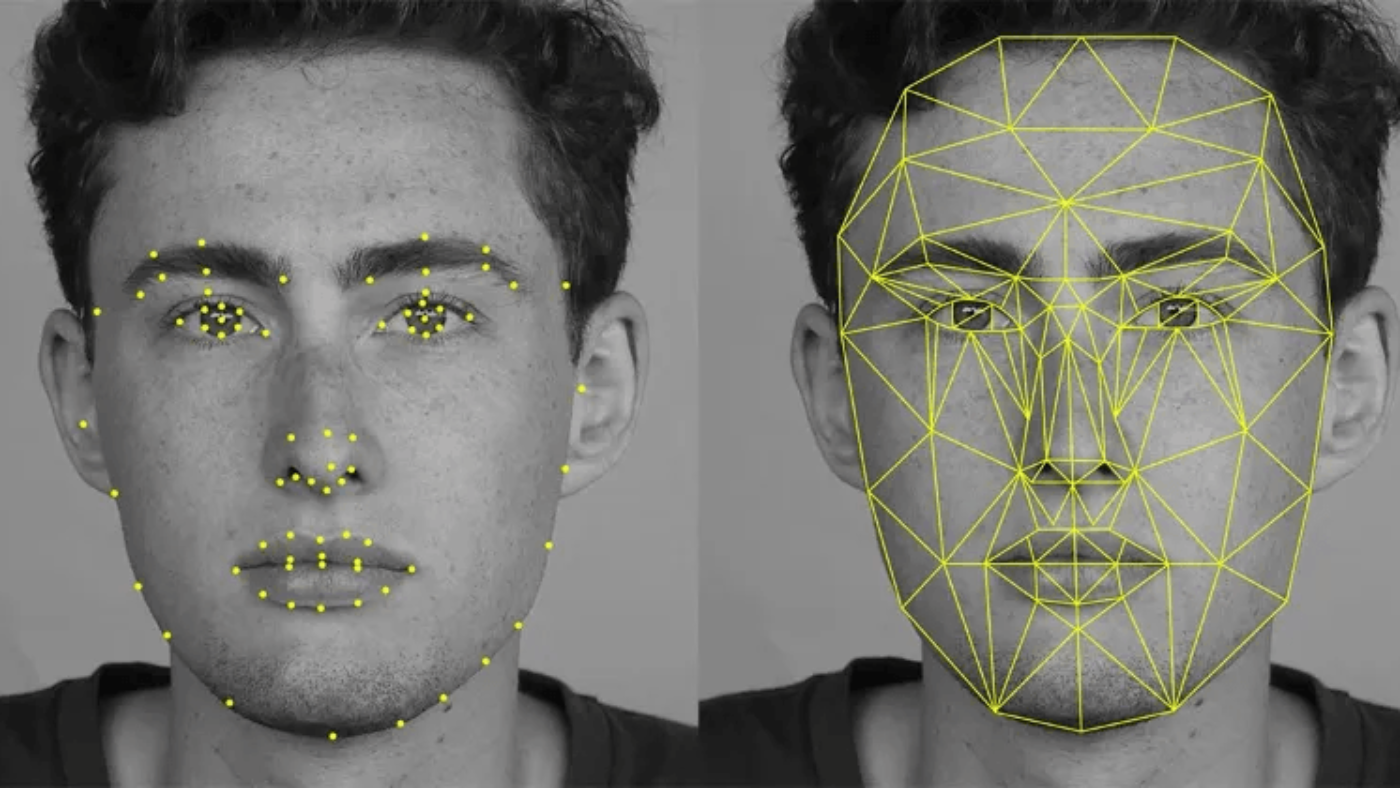

Facial detection intuition, also known as face filter, focuses on identifying key facial landmarks such as eyes, nose, mouth, etc. Algorithms are used to analyze facial geometry and extract unique features for identification purposes. The jeelizfacefilter library allows for easy integration of face filters into an image or canvas. For example, by using the jeelizfacefilter library, a system can effectively detect individual faces even amidst varying lighting conditions or angles. This is achieved by recognizing distinctive patterns in the arrangement of facial landmarks using predefined values or arrays. The detected faces can then be displayed on a canvas image.

In contrast to traditional image processing methods that require explicit instructions for feature extraction and analysis, the jeelizfacefilter library enables machines to learn from vast amounts of data through training. This library provides a canvas for object detection principles, allowing for the implementation of face filters. As a result of this continuous learning process based on diverse examples and scenarios, the accuracy and reliability of live face detection in AR applications significantly improve over time. This improvement is achieved through the use of the jeelizfacefilter library, which enables real-time face detection and tracking. The library allows developers to integrate advanced image processing algorithms into their applications, enhancing the capabilities of AR experiences. By leveraging the power of the canvas element, the jeelizfacefilter library offers a seamless integration with web-based AR applications, providing a smooth and immersive user experience.

By employing the jeelizfacefilter library, which utilizes convolutional neural networks (CNNs), specialized in analyzing visual imagery data like photographs or videos, live face detection systems can promptly scan through numerous frames per second from a video stream while maintaining precision. The jeelizfacefilter library uses the canvas element to efficiently process and display the detected faces. This capability is essential for real-time applications that utilize the jeelizfacefilter library, where swift responses are crucial for seamless user experiences on the canvas with face filters.

Furthermore,

The Viola-Jones framework, along with the jeelizfacefilter library, has been widely utilized for efficient face detection capabilities. It rapidly scans images at multiple scales using the canvas, making it a powerful tool.

The utilization of deep learning algorithms enables the system to discern intricate patterns within different layers of visual data, including the application of face filters from the library.

Real-time tracking provided by object detection principles ensures that AR applications seamlessly integrate face filters and virtual elements from the library with users’ movements.

By implementing these advanced techniques into augmented reality environments, facial recognition technologies in libraries have greatly improved their accuracy and responsiveness when detecting faces in real time.

ARKit and Face Tracking

Anatomy of AR Face

Understanding the anatomy of an AR face is crucial for implementing live face detection in AR, especially when using a library. Different facial regions like eyes, eyebrows, lips, etc., play a significant role in this process of using face filters. Each region can be targeted for applying specific AR effects or filters. For instance, tracking the movement of the eyes enables the placement of virtual sunglasses that move realistically with the user’s head movements.

This precise mapping of virtual elements onto the user’s face allows for immersive experiences where digital objects seamlessly interact with real-world surroundings. By recognizing and understanding each facial feature, developers can create engaging applications that respond to users’ expressions and movements.

Live face detection technology facilitates accurate identification and tracking of these features in real-time, ensuring seamless integration with augmented reality applications. This creates opportunities to develop interactive games, educational tools, or social media filters that react dynamically to users’ facial gestures and expressions.

Smartphone Security Integration

Integrating live face detection with smartphone security systems offers a secure and convenient way to authenticate users based on their unique facial features. With advancements in facial recognition technology integrated into smartphones, individuals can unlock their devices simply by looking at them.

This not only streamlines access but also enhances security by ensuring that only authorized individuals can unlock the device or access specific applications. The use of live face detection further strengthens this capability by continuously verifying the user’s identity during interaction with various apps or services on their smartphone.

For instance, banking apps may utilize live face detection as part of their multi-factor authentication process to ensure secure access to sensitive financial information. By integrating this technology into diverse areas such as mobile payments and document verification processes, it becomes an integral part of enhancing overall smartphone security measures.

AR Foundation Overview

Project Setup

Setting up a live face detection in AR project involves choosing the right software development tools and frameworks. This includes accessing camera APIs to capture real-time video feed, a crucial aspect for detecting facial features accurately. Integration of face detection libraries or APIs into the project is essential to enable the identification and tracking of facial landmarks and expressions.

For instance, when developing an application with live face detection in AR using AR Foundation, developers need to ensure that they have access to robust software tools such as Unity3D and appropriate SDKs like ARCore or ARKit. These tools provide the necessary environment for integrating camera APIs seamlessly while also offering support for implementing advanced functionalities such as custom textures and filters.

Developers may opt to use platforms like OpenCV, Dlib, or Google’s ML Kit for incorporating powerful face detection capabilities into their projects. By leveraging these libraries or APIs within the chosen development framework, developers can achieve accurate real-time facial feature recognition essential for creating engaging augmented reality experiences.

Custom AR Textures

Custom AR textures empower developers to craft unique and personalized filters by applying custom textures, colors, or patterns onto specific facial regions detected through live face tracking in AR applications. This level of customization enhances creativity by allowing developers to design bespoke filters tailored to individual preferences.

For example, utilizing custom AR textures enables developers to create interactive virtual makeup applications that precisely overlay various cosmetic products on users’ faces during live video sessions. Leveraging this technology not only provides immersive user experiences but also opens up opportunities for brands within the beauty industry seeking innovative ways to engage with consumers through augmented reality.

Custom AR textures offer extensive creative freedom when designing virtual masks or accessories that seamlessly align with users’ facial movements and expressions. By enabling precise placement of customized elements onto different parts of the face in real time, developers can deliver captivating interactions that resonate with users across diverse demographics.

JavaScript and WebGL Libraries

Lightweight Face Tracking

Lightweight face tracking is crucial for real-time AR experiences, especially on devices with limited resources. By using lightweight face tracking algorithms, it optimizes performance even on low-end smartphones. These algorithms focus on achieving smooth and responsive live face detection in AR while keeping the computational requirements to a minimum.

This type of optimization ensures that the AR content aligns seamlessly with the user’s facial movements without causing any lag or delay. For instance, libraries like Three.js leverage WebGL to create lightweight yet powerful 3D visualizations directly in the web browser. This allows for efficient real-time rendering of complex scenes, including live face detection and tracking within an AR environment.

Efficient performance on resource-constrained devices

Real-time face tracking with minimal computational requirements

Smooth and responsive AR experiences even on low-end smartphones

Robust AR Filters

Robust AR filters play a vital role in ensuring that virtual elements accurately track facial movements and expressions in various scenarios. By leveraging WebGL contexts through JavaScript libraries, these filters enhance the realism and believability of augmented reality effects by precisely aligning virtual objects with different facial features.

For example, some libraries use advanced computer vision techniques combined with WebGL capabilities to create robust filters that can accurately overlay digital masks or effects onto users’ faces during live video streams. The seamless integration of these robust filters into web-based applications enables engaging and immersive interactions between users and augmented reality content.

Optimization Techniques

Canvas Resolutions

Canvas resolutions, or the dimensions of the virtual canvas where AR effects are rendered, play a pivotal role in live face detection in AR. Optimizing these resolutions is crucial for achieving high-quality visuals while maintaining optimal performance. By balancing resolution with device capabilities, developers can ensure an immersive and seamless user experience.

Finding the right balance is essential. Higher resolutions offer sharper visuals but may strain device resources, leading to laggy performance. On the other hand, lower resolutions may compromise visual quality. Therefore, striking a balance that aligns with the capabilities of different devices is imperative for delivering consistent user experiences across various platforms.

For instance:

An AR application designed for smartphones with varying processing power should adapt its canvas resolution based on each device’s specifications.

By optimizing canvas resolutions based on specific devices’ capabilities, developers can ensure that users enjoy smooth and visually appealing AR experiences regardless of their hardware.

Efficiently managing video streams from a device’s camera is fundamental to successful live face detection in AR applications. Video handling techniques encompass tasks such as frame extraction, compression, and real-time analysis for accurate face tracking within augmented reality scenarios.

By implementing robust video handling techniques, developers can ensure smooth video playback while enabling real-time face detection and tracking in AR environments. This not only enhances user engagement but also contributes to creating compelling and interactive augmented reality experiences.

For example:

When developing an AR filter application that incorporates live face detection features, efficient video handling ensures that facial movements are accurately tracked without compromising overall app performance.

Through effective frame extraction and compression methods during video processing stages, developers can optimize resource utilization without sacrificing accuracy in live face detection algorithms.

Multiple Faces and Videos

Frontend Frameworks Integration

Integrating live face detection in AR with frontend frameworks simplifies development. It allows developers to leverage existing UI components and libraries for building interactive interfaces, streamlining the integration of face detection functionalities into web or mobile applications.

This integration enables the seamless combination of live face detection capabilities with popular frontend frameworks such as React, Vue.js, or Angular. For instance, in a web-based AR application developed using React, developers can incorporate a face prefab directly into their components to enable real-time face tracking within the augmented environment. This not only enhances user engagement but also provides a more intuitive and immersive experience.

Moreover, by integrating live face detection with frontend frameworks, developers gain access to extensive documentation and community support associated with these technologies. As a result, they can efficiently troubleshoot issues and enhance the performance of their AR applications through collaborative problem-solving within these developer communities.

Native Hosting

Native hosting refers to deploying live face detection models directly on the user’s device. This approach reduces reliance on cloud-based processing while enabling offline functionality in AR applications. By utilizing native hosting for faces, users can enjoy enhanced privacy as sensitive facial data remains localized on their devices without being transmitted over external networks.

In addition to improved privacy measures, native hosting significantly reduces latency in AR applications that incorporate live face detection features. When users interact with an augmented reality experience that involves multiple faces or videos, having local processing capabilities ensures smoother real-time rendering without network-related delays.

Furthermore, this deployment method aligns with growing trends towards edge computing where computational tasks are performed closer to the end-user rather than relying solely on remote servers. As a result, users benefit from faster response times and reduced data transfer requirements when engaging with diverse AR experiences featuring dynamic facial recognition elements.

Under the Hood of AR Face Detection

The live face detection in AR relies on advanced technology, such as machine learning algorithms like Haar cascades or deep neural networks. These algorithms are trained using vast amounts of data to recognize patterns and features associated with human faces. For instance, Haar cascades use a series of classifiers to identify facial features like the eyes, nose, and mouth. On the other hand, deep neural networks employ complex layers of interconnected nodes to detect faces based on various visual cues.

By utilizing this technology, AR face detection enables accurate and real-time identification of faces in different environments. This means that even when multiple faces are present within a video feed in an AR application, the system can swiftly pinpoint each individual face without compromising accuracy.

This advanced technology allows for seamless integration with various platforms including iOS, Android, and web browsers. Ensuring compatibility across different platforms is crucial for broadening the reach of live face detection in AR applications. By adapting the implementation to platform-specific APIs and frameworks, developers can ensure that their AR applications can be accessed by a wide range of users regardless of their device preferences.

Creating Engaging AR Facial Filters

To create captivating live face detection in AR filters, developers need to grasp the fundamentals of filter design. This involves understanding color spaces, blending modes, and image processing techniques. By experimenting with different effects and adjusting parameters, developers can produce visually appealing and realistic AR filters.

Exploring various color spaces such as RGB (red, green, blue) or HSL (hue, saturation, lightness) is essential for achieving vibrant and eye-catching visual effects. Mastering blending modes enables the seamless integration of virtual elements with real-world surroundings in AR applications.

Developers should also delve into image processing techniques like edge detection and blurring to enhance the overall quality of facial filters. For instance, applying edge detection algorithms allows for precise outlining of facial features within an AR environment. Ultimately, by honing these skills and techniques in filter design basics, developers can elevate the appeal and realism of their AR facial filters.

User Experience Enhancement

The integration of live face detection technology not only enriches user experience but also facilitates interactive engagement with personalized AR content. Through live face detection capabilities incorporated via tools like Augmented Faces API or JeelizFaceFilter library,

users are empowered to interact with virtual elements in real-time using their own facial expressions. This immersive experience captivates users by enabling them to see themselves reflected in a dynamic digital environment.

By leveraging live face detection functionality within augmented reality applications, developers can enable users to try on virtual makeup products or experiment with animated accessories that respond directly to their movements—enhancing interactivity while providing a personalized touch. Ultimately, the seamless integration of live face detection elevates user engagement within AR experiences, fostering a deeper connection between users and digital content.

Conclusion

You’ve now delved into the intricate world of live face detection in augmented reality. From understanding the Viola-Jones framework to exploring ARKit and AR Foundation, you’ve gained insight into the fascinating technologies driving this innovative field. As you navigate the complexities of optimizing face detection, creating engaging AR facial filters, and dealing with multiple faces and videos, remember that the possibilities are as limitless as your creativity.

So, go ahead and dive into the realm of AR face detection with confidence. Experiment, innovate, and push the boundaries because the future of AR is in your hands. Keep exploring, keep creating, and keep pushing for new breakthroughs in this exciting domain!

Frequently Asked Questions

How does the Viola-Jones Framework contribute to live face detection in AR using the augmented faces API? The Viola-Jones Framework is a popular method for detecting human faces in camera video. By integrating this framework with AR technology, developers can create interactive and immersive experiences using the JeelizFaceFilter library. This allows for real-time detection and tracking of human faces in camera video, enabling the application of various AR effects and filters.

The Viola-Jones Framework is a key player in face detection, utilizing features like Haar-like features and integral images. It forms the foundation for real-time face detection by efficiently analyzing image regions.

What are some JavaScript and WebGL libraries used for live face detection in AR? One popular library is the augmented faces API, which allows developers to detect and track human faces in real-time. Another widely used library is jeelizfacefilter, which provides powerful face detection and tracking capabilities. These libraries enable the creation of engaging AR experiences by seamlessly integrating live face detection into web applications.

JavaScript and WebGL libraries like Three.js and A-Frame play a crucial role in enabling interactive 3D experiences within web browsers. They provide the necessary tools for creating immersive facial filters and effects.

How can multiple faces be detected simultaneously in live AR environments using the jeelizfacefilter and camera video? The neural network model enables the detection of multiple faces in real-time, whether it is an image or a video.

Optimization techniques such as parallel processing, efficient data structures, and hardware acceleration enable the simultaneous detection of multiple faces. These methods ensure that real-time performance is maintained even with multiple faces present.

What technologies are involved “under the hood” of AR face detection? The augmented faces API and JeelizFaceFilter use camera technology to detect and track human faces.

ARKit, ARCore, or other similar frameworks work under the hood to power advanced functionalities like environment tracking, plane estimation, lighting estimation, and occlusion handling. These technologies enhance the accuracy of facial feature tracking within an augmented reality setting.

How can engaging AR facial filters be created using live face detection technology with the help of jeelizfacefilter? The jeelizfacefilter library utilizes camera input and advanced image rendering techniques to apply real-time filters to the detected faces.

Engaging AR facial filters are crafted using a combination of computer vision algorithms, 3D modeling techniques, texture mapping, shader programming, and user interaction design. This fusion results in captivating effects that respond dynamically to users’ facial movements.

Add a Comment