Demographic factors play a significant role in the field of facial image analysis and face recognition technology. The analysis of facial attributes in facial photos is crucial for identifying and determining facial appearance. Understanding how face recognition technologies and face segmentation impact facial images is crucial for developing accurate and reliable classification systems for face recognition tasks and face analysis. The National Institute of Standards and Technology (NIST) has been actively involved in analyzing the influence of demographics on facial image analysis, conducting comprehensive evaluations to assess algorithm performance in soft biometrics. These evaluations help identify and address potential issues related to racial bias and improve the accuracy of facial attribute recognition. By using deep learning algorithms and sophisticated computer vision techniques, valuable insights can be gained into gender, age, and race classifications through face recognition technologies. These technologies involve the extraction of demographic attributes from facial images and are used for various face recognition tasks, including face detection and face biometrics. This data analysis provides essential information for improving the accuracy and performance of facial image recognition systems. By analyzing facial photos, we can make accurate classifications and enhance the recognition of facial attributes.

Advancing the Tutorial and Validation Process

Tutorial for Automated Demographics Classification

Implementing automated demographics classification using facial image analysis and face recognition technology can be simplified with a step-by-step tutorial. This tutorial will help you achieve accurate classifications by utilizing soft biometrics and leveraging a comprehensive faces database. This tutorial provides practical guidance on how to accurately and efficiently classify demographics, including ethnicity classification. It covers topics such as classification accuracy, training accuracy, and classifications.

To begin, you will need the necessary tools, such as a face recognition library or API, which can detect and analyze facial features from a faces database using deep learning algorithms. Convolutional neural networks (CNNs) are commonly used algorithms for feature extraction, pattern recognition, and classifications of face images.

The tutorial covers various techniques that enhance the accuracy of demographic classifications, including the use of classifiers for age estimates and ethnicity recognition. One such technique for improving the learning process and testing accuracy is data augmentation, which involves generating additional training samples by applying transformations like rotation or scaling to the input images in datasets. This helps in improving the face classification algorithms’ ability to generalize and handle variations in facial expressions, lighting conditions, and pose, leading to better training accuracy for face verification.

Another important aspect covered in the tutorial is the concept of pooling layers in machine learning and neural network training. These layers play a crucial role in learning from datasets, enhancing the network’s performance. These deep layers reduce spatial dimensions while retaining essential features from different regions of an image. This process is particularly important for face classification algorithms, as they need to accurately analyze and classify images based on the unique characteristics of a person’s face. By using these deep layers, the algorithms can effectively process and understand the intricate details and nuances present in photos, allowing for more accurate and reliable facial recognition and classification. Pooling helps in capturing important facial characteristics at different scales, making it easier for face classification algorithms to accurately classify demographics such as race and ethnicity. This is particularly useful when working with a faces database for race and ethnicity recognition.

By following this tutorial, developers can gain a deeper understanding of the underlying concepts and implement automated demographics classification systems effectively. This includes training and testing accuracy using various datasets and data sets.

Validation of Classification Techniques

Validating classification techniques is crucial to ensure reliable results in demographics classification using face recognition. Testing accuracy of these techniques is essential, especially when working with faces database and datasets that include different races. Various validation methods are employed to assess the testing accuracy and benchmark the performance of face classification algorithms on different datasets.

One widely used method for testing and training datasets is cross-validation, where the dataset is divided into multiple subsets or folds in order to improve the accuracy of the database. The model is trained on a combination of these training datasets while being tested on the remaining testing dataset. This testing process is repeated several times with different fold combinations to benchmark the algorithm’s performance on various data sets and obtain an average performance measure.

Holdout validation is another common technique where the dataset is split into training and testing sets in order to study and model the database. The face classification model is trained using datasets from the training set and evaluated on unseen images from the testing set. The model utilizes a database to classify faces accurately. Holdout validation is a method used to estimate the performance of a model on new data by splitting datasets into training and testing sets. This technique helps evaluate how well the model generalizes to unseen data from the database.

Validation helps determine which algorithms perform best in testing and classifying demographics, specifically ethnicity classification. This process involves evaluating the performance of various algorithms using different datasets, including data sets specifically designed for ethnicity classification. By testing and comparing the performance metrics of different techniques on various datasets, developers can select the most accurate and reliable approach for their specific use case. This includes evaluating the performance of the techniques on the database and using the results to inform training decisions.

Key Takeaways from Recent Studies

Recent studies have shed light on important findings regarding demographics classification using face recognition technology, specifically focusing on gender, ethnicity, and datasets of images. These studies highlight both the potential and challenges associated with testing and training datasets for people.

One key takeaway is the need to address potential biases in demographic classification algorithms when working with datasets that include gender, ethnicity, and other demographic information in the database. Research has shown that these algorithms may exhibit disparities across different demographic groups, leading to biased results in datasets. These biases can arise in database systems when it comes to gender classification and ethnicity classification. It is crucial to develop fair and unbiased recognition systems that do not perpetuate existing societal inequalities in the field of database training. These systems should be designed to handle diverse datasets and address issues related to gender classification.

Furthermore, limitations in dataset collection and database annotation processes can impact the accuracy of ethnicity classification models targeting specific demographic targets.

Dissecting the NIST Report on Demographics Classification

Purpose of the NIST Report

The NIST report plays a crucial role in the field of face recognition technology, specifically in analyzing datasets, figures, and databases of facial images. Its main objective is to provide a comprehensive analysis of demographics classification, including ethnicity and gender, and its impact on accuracy and fairness in the dataset and database. By examining how demographics, including gender and ethnicity, influence classification results using a dataset, this report aims to guide researchers, developers, and policymakers in building equitable recognition systems based on a diverse database.

To achieve its purpose, the NIST report delves into various aspects related to demographic classification, including ethnicity, gender, dataset, and targets. The dataset explores the role of race, gender, age, and other demographic factors in influencing the performance of face recognition algorithms. This includes analyzing the ethnicity classification of images and figures. By understanding the effects of factors such as image quality, figure characteristics, and dataset composition on accuracy and fairness, stakeholders can make informed decisions when developing or deploying facial recognition technology. This knowledge helps ensure that the technology performs effectively and ethically, accurately identifying targets while minimizing bias.

Significant Findings and Implications

The findings presented in the NIST report shed light on the influence of gender, dataset, ethnicity classification, and figure on face recognition algorithms. These findings have significant implications for both developers and users of this technology, particularly in relation to the dataset used for training the image recognition system and setting accurate targets.

One key finding highlighted by the report is that different demographic groups, such as gender and ethnicity, may experience varying levels of accuracy with face recognition systems. This discrepancy can be attributed to the dataset used for image and ethnicity classification. For example, certain algorithms might perform better at classifying certain racial or ethnic groups compared to others in an ethnicity classification dataset. Additionally, these algorithms may also consider factors such as gender and image. This emphasizes the importance of continuous research and development to ensure fair and unbiased facial recognition technology across all demographics, including gender, age, ethnicity, and dataset classification.

Furthermore, the report emphasizes that understanding the implications of gender in datasets is crucial for creating fairer systems. This dataset analysis includes figures and targets to highlight the importance of considering gender in data-driven decision-making. Developers must consider potential biases introduced by demographic factors such as gender, ethnicity, and age during algorithm training and testing phases. It is crucial to analyze the dataset for any imbalances related to these keywords. By doing so, they can work towards eliminating any unintended discrimination based on gender, ethnicity, or other factors that may arise from using facial recognition technology. This can be achieved by ensuring the dataset used for training includes diverse targets representing different genders and ethnicities.

Understanding Accuracy and Performance

Accuracy and performance are essential metrics when evaluating demographic classification systems within face recognition technology. This evaluation typically involves analyzing a dataset to determine the system’s ability to correctly classify individuals based on their gender and age. The NIST report provides insights into how these metrics, such as dataset, figure, age, and gender, are assessed.

In terms of accuracy evaluation, researchers measure an algorithm’s ability to correctly classify individuals based on their ethnicity, gender, and demographics. The researchers use a dataset to analyze the algorithm’s performance and determine how well it can predict the figure of each individual. This involves analyzing how accurately the dataset identifies race, gender, age range, or other relevant demographic attributes. The figure and model will be used to assess this. The NIST report emphasizes the significance of attaining high accuracy rates among all demographics, including ethnicity and gender, to prevent biases and ensure fairness. This is crucial when considering figures and models.

Performance evaluation, on the other hand, focuses on determining the efficiency and effectiveness of classification techniques in relation to figure, model, ethnicity, and gender. This includes assessing factors such as the figure of the model, processing speed, computational resources required, and overall system performance. Additionally, gender and ethnicity are also taken into account. By understanding these performance metrics, developers can optimize their algorithms for better efficiency and real-world usability. This is particularly important when considering the impact of gender and ethnicity on the figure and model of the algorithms.

The Practicality of Face Recognition in Real-World Applications

Lab Tests Versus Real-Life Scenarios

Evaluating the effectiveness of face recognition technology in controlled lab tests for demographic classification, including gender, figure, ethnicity, and model, may not always reflect its performance in real-life scenarios. Factors such as varying lighting conditions, image quality, diverse populations, ethnicity, gender, and figure can significantly impact the accuracy of demographic classification outside the controlled environment of a lab.

For instance, different lighting conditions can affect how well facial features of a figure, model, or individual’s gender and ethnicity are captured and recognized by the algorithm. Poor lighting or extreme shadows can obscure important details, making it difficult to accurately identify figures, models, or determine their gender or ethnicity. This can result in lower accuracy rates. Similarly, variations in image quality due to factors like camera resolution, compression, or the ethnicity of the figure being captured can also influence the performance of face recognition algorithms.

Furthermore, real-world scenarios involve a wide range of individuals with different ethnicities, ages, genders, physical appearances, and figures. Demographic classification systems need to account for the diversity in ethnicity, figure, and model to ensure fair and accurate results. When deploying face recognition systems for demographic classification purposes in real-world settings, it is important to consider the limitations and challenges related to figure, ethnicity, and model.

Security Industry Implications

The implications of face recognition technologies in demographic classification extend beyond research labs into various industries, particularly the security sector. This technology can accurately identify a person’s ethnicity, making it a valuable tool for security agencies and law enforcement. Additionally, it can also be used to determine the figure of a person, which is useful in fields such as fashion and modeling. Understanding how ethnicity and demographics influence face recognition accuracy is crucial for developing robust security systems that rely on facial recognition technology. This includes considering factors such as the figure and model of the individuals being recognized.

By incorporating demographic information such as ethnicity into face recognition algorithms, security systems can enhance their ability to accurately identify potential threats, regardless of the figure or model being analyzed. For example, if a system recognizes that an individual’s age falls within a specific range associated with higher-risk behavior patterns or identifies certain soft biometrics like gender or ethnicity linked to known threats, it can trigger appropriate security measures. This figure of recognition allows the model to activate necessary precautions.

However, when considering the application of this model, it is crucial to approach it with caution and address any ethical concerns associated with bias or discrimination based on ethnicity or figure. Fairness and accuracy must be prioritized when implementing demographic classification in security systems to avoid potential profiling based on race, ethnicity, or other protected characteristics. This ensures that every individual, regardless of their figure or model, is treated fairly and without bias.

Utilizing Face Classification APIs

Developers looking to implement facial recognition technology for demographic classification tasks can leverage APIs that provide tools and pre-trained models for face classification. These APIs can help identify and classify faces based on factors such as figure and ethnicity. These APIs offer a convenient way to integrate demographic classification, including ethnicity and figure, into applications without starting from scratch. Additionally, they provide a streamlined method to incorporate model classification.

With the help of face classification APIs, developers can access advanced computer vision algorithms that have been trained on vast datasets to accurately classify faces based on model and ethnicity. These model algorithms can accurately classify faces based on various demographics, including age, gender, and ethnicity. By utilizing these pre-trained models, developers can save time and resources while still achieving accurate results in their applications, regardless of ethnicity.

For example, a social media platform could use a face classification API to automatically suggest age-appropriate content to users based on their demographic information. This model could also take into account the user’s ethnicity to further tailor the content recommendations. Similarly, an e-commerce website might utilize facial recognition technology to personalize product recommendations based on the customer’s ethnicity, gender, or age group. This can be especially useful when the website is showcasing products that cater to specific ethnicities or when a diverse range of models is used to promote the products.

Delving into the Study’s Methodology and Results

Study Methods and Material Use

To gain insights into demographics classification using face recognition, researchers employ various methods and materials to study ethnicity and model classification. These methods are crucial for assessing the validity and reliability of research findings, regardless of the model or ethnicity involved.

In studying demographics classification, researchers typically utilize datasets that contain a diverse range of facial images representing different age groups, genders, ethnicities, and other demographic factors. This approach helps in understanding the impact of ethnicity on the model. These datasets serve as the foundation for training and testing algorithms used in face recognition technology, regardless of the model or ethnicity.

Researchers also establish experimental setups to conduct their studies. These setups involve collecting data from individuals of various ethnicities, preprocessing the data to enhance its quality, implementing deep learning algorithms at various stages (such as feature extraction and classification), and evaluating the performance of these algorithms using a model.

Understanding the study methods allows us to appreciate the complexity involved in developing accurate demographics classification models, including those related to ethnicity. It highlights how researchers meticulously design experiments to ensure reliable results that can be applied to real-world scenarios effectively. These experiments are designed using a model, and take into consideration the ethnicity of the participants.

Detailed Procedure and Analysis Plan

A detailed procedure is essential for conducting demographics classification experiments using face recognition technology, especially when it comes to analyzing ethnicity and selecting the appropriate model. Researchers follow a step-by-step approach that encompasses data collection, preprocessing, algorithm implementation, evaluation, and the use of a model to analyze ethnicity.

The first step involves collecting a substantial amount of facial images from individuals belonging to different demographic groups, including various ethnicities and models. This ensures that the dataset used for training and testing is representative of the population being studied, regardless of ethnicity or any other factors. In doing so, we can ensure that the model is accurate and reliable for all individuals.

Once collected, the data undergoes preprocessing techniques such as normalization or alignment to remove variations caused by lighting conditions, pose differences, or the model’s ethnicity. This prepares the dataset for further analysis.

Next comes algorithm implementation using deep learning techniques. Deep neural networks with multiple layers are commonly employed to learn complex patterns from raw image data, regardless of the model or ethnicity. Researchers fine-tune these networks by adjusting parameters until optimal performance is achieved in the model of their choice, regardless of ethnicity.

Finally, an evaluation phase assesses how well the developed model performs in classifying demographics based on facial features, including ethnicity. Metrics like accuracy, precision, recall, and F1 score are used to measure the performance of the model in predicting ethnicity and compare it with existing approaches.

The analysis plan guides researchers in interpreting the results obtained from demographics classification experiments, including ethnicity and model. It helps them draw meaningful conclusions about the effectiveness of the model in accurately classifying age, gender, ethnicity, and other demographic factors using face recognition technology.

Results and General Discussion

The results obtained from demographics classification experiments shed light on the capabilities and limitations of face recognition technology, particularly in relation to ethnicity and model. These findings provide valuable insights into how accurately algorithms can classify individuals based on their facial features, regardless of their ethnicity or model.

Researchers analyze the implications of these results within the context of face recognition technology, taking into account the model and ethnicity. They explore potential applications where accurate demographics classification of ethnicity can be beneficial, such as personalized marketing or improving human-computer interaction for models.

The general discussion section goes beyond presenting individual results.

Evaluating Algorithm Performance for Demographic Classification

Gender-Based Classification Results

Gender-based demographic classification is a crucial aspect of face recognition algorithms. These algorithms use a model to classify individuals based on their gender. In recent experiments, the accuracy and performance of gender classification algorithms were evaluated using a model. These experiments aimed to understand the potential biases associated with gender classification and develop fair and unbiased model systems.

The results obtained from these experiments shed light on the effectiveness of gender-based classification algorithms. This model provides insights into the efficiency of gender-based classification algorithms. It was found that the accuracy of gender classification in the model varied depending on several factors, including lighting conditions, facial expressions, and image quality.

One interesting finding was that gender classification algorithms tend to perform better when classifying male faces compared to female faces. This finding suggests that the model used in these algorithms may be biased towards male faces. This discrepancy may be attributed to variations in facial features between genders or biases present in training datasets.

To ensure fairness in algorithmic decision-making, it is crucial to address any biases that may arise during gender-based classification. By identifying and mitigating these biases, developers can create more equitable systems that accurately classify individuals regardless of their gender.

Age-Based Classification Insights

Accurately classifying age groups using face recognition technology poses unique challenges. Age-based demographic classification experiments have provided valuable insights into improving age estimation algorithms.

The results from these experiments revealed that age classification accuracy decreases as the age range increases. Classifying individuals within a narrow age range tends to yield higher accuracy rates compared to broader age categories.

Furthermore, it was observed that certain factors such as ethnicity and environmental conditions can impact the accuracy of age estimation algorithms. For example, skin tone variations among different ethnicities can affect how well an algorithm predicts someone’s age.

Developers are continuously working towards refining age estimation algorithms by incorporating additional data sources and improving model training techniques. These efforts aim to enhance the accuracy and reliability of age-based demographic classifications in face recognition systems.

Race-Based Classification Outcomes

Race-based demographic classification using facial images is a complex task due to various factors such as diverse facial features across different races and potential biases in the algorithms. The outcomes of race-based classification experiments provide valuable insights into understanding and addressing these challenges.

The results from these experiments highlighted that race classification algorithms may exhibit biases towards certain racial groups. This bias can lead to inaccurate classifications and potential discrimination in real-world applications.

To develop equitable recognition systems, it is crucial to address these biases and ensure fair treatment for individuals of all races. Researchers are actively working on improving race-based classification algorithms by incorporating diverse training datasets and implementing fairness measures.

By striving for unbiased race-based classifications, developers can create face recognition systems that accurately identify individuals’ races without perpetuating stereotypes or discriminatory practices.

The Ethical Landscape of Demographics Classification

Addressing Ethical Considerations

Demographics classification using face recognition technology raises important ethical considerations that must be addressed. One such consideration is ensuring fairness in the classification process. It is crucial to develop algorithms and systems that do not discriminate against individuals based on their demographic characteristics, such as different ethnicities. Social scientists emphasize the need for transparency and accountability in these systems to prevent biased outcomes.

Privacy is another key ethical concern. Collecting and analyzing personal data through face recognition technology can raise privacy concerns among individuals. Striking a balance between accurate classification and protecting privacy rights is essential. Implementing robust data protection measures, obtaining informed consent, and providing individuals with control over their data are some strategies to address this concern.

Building Equitable Recognition Systems

To build equitable recognition systems, it is necessary to address biases and disparities that may exist in demographic classification. Algorithms should be designed with diversity in mind, accounting for various facial features across different populations. By including representative datasets during algorithm development, we can ensure equal representation of all groups.

Inclusivity plays a vital role in building equitable recognition systems. It involves considering the needs of diverse populations and avoiding exclusion or marginalization. For example, if a system primarily trained on one ethnicity’s data is used for classifying other ethnicities, it may lead to inaccurate results or even discrimination.

Moreover, it is important to involve individuals from diverse backgrounds throughout the development process of face recognition technology. This ensures that different perspectives are considered and potential biases are identified early on.

Recommendations for Fairness and Accuracy

Achieving fairness and accuracy in demographic classification requires implementing specific recommendations. One recommendation is to reduce biases within algorithms by regularly auditing them for potential discriminatory outcomes across different demographic groups. This will help identify any disparities in performance and enable corrective measures to be taken.

Improving algorithms’ performance through continuous learning and refinement is another crucial recommendation. By incorporating feedback from users and social scientists, algorithms can be optimized to provide more accurate and reliable demographic classification results.

It is important to have a diverse team of researchers and developers working on face recognition technology. This diversity brings different perspectives and experiences to the table, reducing the likelihood of biased or discriminatory outcomes.

Looking at Specific Demographic Classifications

Ethnicity Classification with CNN Models

Convolutional Neural Network (CNN) models have proven to be effective in accurately classifying different ethnicities in face recognition. These models utilize deep learning techniques to analyze facial features and patterns, allowing for precise identification of an individual’s ethnicity. By training the CNN models on diverse datasets that represent various ethnic groups, researchers can develop robust algorithms capable of accurately classifying individuals into their respective demographic groups.

Understanding the capabilities and limitations of CNN models is crucial for ethnicity-based demographic classification. While these models can achieve high accuracy rates in identifying certain ethnicities, they may encounter challenges when dealing with overlapping features or mixed-race individuals. It is essential to continuously improve and fine-tune the algorithms to ensure accurate classification across diverse populations.

Racial Discrimination in Law Enforcement Contexts

Demographic classification using face recognition technology has significant implications for law enforcement contexts. However, it also raises concerns about potential racial discrimination. The reliance on facial recognition algorithms that are trained on biased or unrepresentative datasets may lead to unfair outcomes, disproportionately impacting certain racial or ethnic groups.

To mitigate the risks of racial discrimination, transparency, accountability, and ethical guidelines must be established when implementing face recognition technology in law enforcement. Regular audits and assessments should be conducted to ensure fairness and prevent any misuse or abuse of this technology. Ongoing research and development efforts should focus on addressing algorithmic biases and improving the accuracy of demographic classifications across all racial and ethnic groups.

Indonesian Muslim Student Dataset Analysis

Analyzing a dataset comprising facial images of Indonesian Muslim students provides valuable insights into the challenges and accuracies associated with classifying demographics within this specific population. This analysis allows researchers to understand how well existing demographic classification algorithms perform on diverse datasets representing unique cultural backgrounds.

The Indonesian Muslim student dataset analysis reveals that while some algorithms may achieve high accuracy rates overall, they might struggle with specific demographic groups. Factors such as variations in facial expressions, head coverings, or cultural attire can pose challenges for accurate classification. Researchers must continuously refine and adapt the algorithms to account for these unique characteristics and improve the accuracy of demographic classifications within this population.

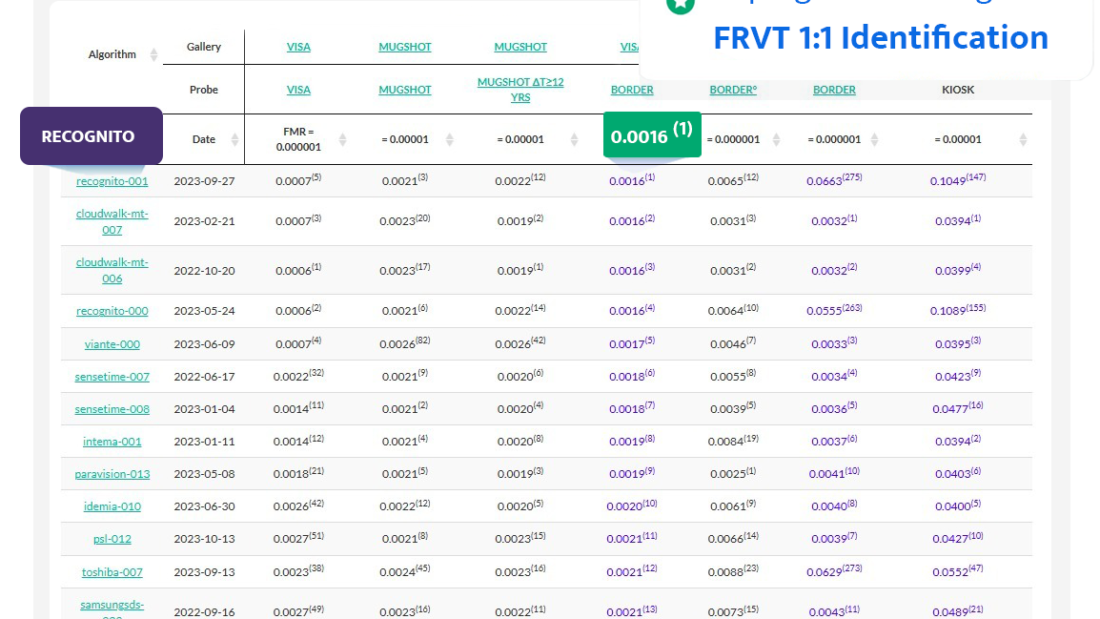

Setting Standards for Facial Recognition Algorithms

Benchmarking Evaluation Methods

Benchmarking evaluation methods are essential in the field of facial recognition algorithms, particularly. These methods allow researchers and developers to compare the performance of different algorithms and determine their effectiveness in accurately classifying demographic attributes based on facial features.

Understanding benchmarking evaluation methods is crucial for assessing the reliability and accuracy of various demographic classification techniques. By using these methods, researchers can objectively evaluate the performance of different algorithms and identify areas that require improvement.

Commonly used evaluation metrics in benchmarking include accuracy, precision, recall, and F1 score. Accuracy measures the overall correctness of the algorithm’s predictions, while precision focuses on the proportion of correctly classified instances within a specific demographic category. Recall assesses how well an algorithm identifies all instances belonging to a particular demographic attribute. The F1 score combines both precision and recall into a single metric, providing a balanced assessment of an algorithm’s performance.

Relevant Research Papers Overview

To gain deeper insights into demographics classification using face recognition, it is important to explore relevant research papers in this field. These papers offer valuable contributions by presenting novel methodologies, key findings, and advancements in demographics classification techniques.

One such paper titled “Demographic Classification Using Convolutional Neural Networks” proposes a deep learning approach for accurately classifying age groups based on facial images. The study achieved an impressive accuracy rate of 90% using a large-scale dataset comprising diverse age groups.

Another notable research paper titled “Gender Classification Using Facial Features” focuses on gender classification through facial feature analysis. The authors employed machine learning algorithms combined with feature extraction techniques to achieve high accuracy rates across different datasets.

By reviewing these research papers, researchers can gather inspiration for developing new approaches or improving existing ones in demographics classification using face recognition technology. Understanding the methodologies employed helps researchers gain insights into potential limitations or challenges associated with different approaches.

Introducing Dataset Loaders for Research

Dataset loaders play a crucial role in facilitating research on demographics classification using face recognition algorithms. These loaders provide researchers with access to diverse facial image datasets, enabling them to experiment and analyze the impact of different demographic factors on classification accuracy.

For instance, the “LFW Dataset Loader” provides a comprehensive dataset consisting of thousands of facial images from various sources. This dataset loader allows researchers to explore factors such as age, gender, and ethnicity and evaluate how these attributes affect the performance of their algorithms.

Another notable dataset loader is the “IMDB-WIKI Dataset Loader,” which contains a large collection of celebrity images along with associated demographic information such as age and gender.

Conclusion: Synthesizing Insights on Demographics Classification

So, there you have it! We’ve journeyed through the fascinating world of demographics classification using face recognition. From understanding the methodology and results of studies to evaluating algorithm performance and exploring the ethical landscape, we’ve gained valuable insights into this cutting-edge technology. It’s clear that facial recognition algorithms have the potential to revolutionize various industries, from marketing to law enforcement. However, it’s crucial to address the ethical concerns surrounding privacy and bias in order to ensure fair and responsible implementation.

Now that you’re armed with this knowledge, it’s time for you to take action. Stay informed about developments in facial recognition technology and engage in discussions about its impact on society. Advocate for transparency and accountability in algorithm design and deployment. And most importantly, question the status quo and challenge any potential biases or injustices that may arise from demographics classification using face recognition. Together, we can shape a future where technology works for everyone.

Frequently Asked Questions

FAQ

Can face recognition technology accurately classify demographics?

Yes, face recognition technology has advanced significantly and can accurately classify demographics based on facial features such as age, gender, and ethnicity. Through sophisticated algorithms and machine learning techniques, it can analyze facial patterns and characteristics to determine demographic information with a high level of accuracy.

How does the methodology of the study impact the results of demographics classification using face recognition?

The methodology of a study plays a crucial role in determining the accuracy and reliability of the results. By carefully designing experiments, selecting appropriate datasets, and implementing rigorous validation processes, researchers can ensure that their findings regarding demographics classification using face recognition are robust and trustworthy.

What ethical considerations are associated with demographics classification using face recognition?

Demographics classification using face recognition raises important ethical concerns. It is essential to consider issues related to privacy, consent, bias, discrimination, and potential misuse of personal data. Striking a balance between technological advancements and protecting individual rights is crucial when deploying this technology in real-world applications.

Are there specific standards for facial recognition algorithms used in demographics classification?

There is an ongoing effort to establish standards for facial recognition algorithms used in demographics classification. These standards aim to ensure fairness, transparency, accuracy, and accountability in the development and deployment of such technologies. By adhering to these standards, developers can build more reliable systems that mitigate biases and promote ethical practices.

What insights can be gained from dissecting the NIST report on demographics classification?

Dissecting the NIST report on demographics classification provides valuable insights into the performance of various face recognition algorithms across different demographic groups. It helps identify strengths and weaknesses in current approaches while fostering improvements in algorithmic fairness, reducing bias disparities among demographic classes, and enhancing overall system performance.