Real-time face tracking, powered by cutting-edge computer vision algorithms such as OpenCV, is revolutionizing user experiences across various industries. With the use of faceware realtime technology, these algorithms are able to accurately track and recognize faces in real-time. This advancement in technology has opened up new possibilities for industries that rely on facial recognition, such as security, marketing, and entertainment. This facial tracking software, using Faceware Realtime and OpenCV, swiftly detects and tracks human faces in video streams or images. It enables personalized interactions and immersive experiences in augmented reality (AR) and virtual reality (VR) applications. The recognizer technology is crucial for these capabilities. Moreover, real-time face tracking using OpenCV plays a pivotal role in security systems, emotion recognition software, animation industry advancements, and gaming technologies. Faceware technology enables accurate detection and tracking of faces, while the face recognizer allows for identification and classification of individuals.

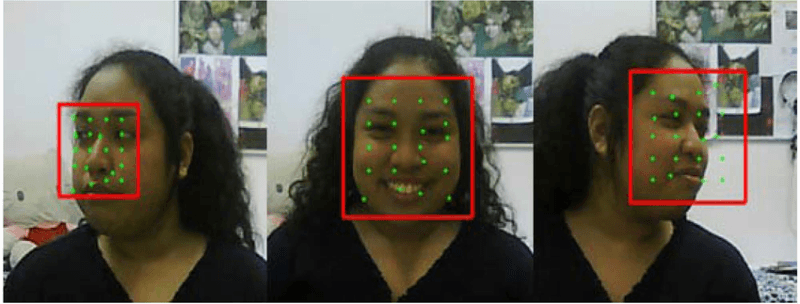

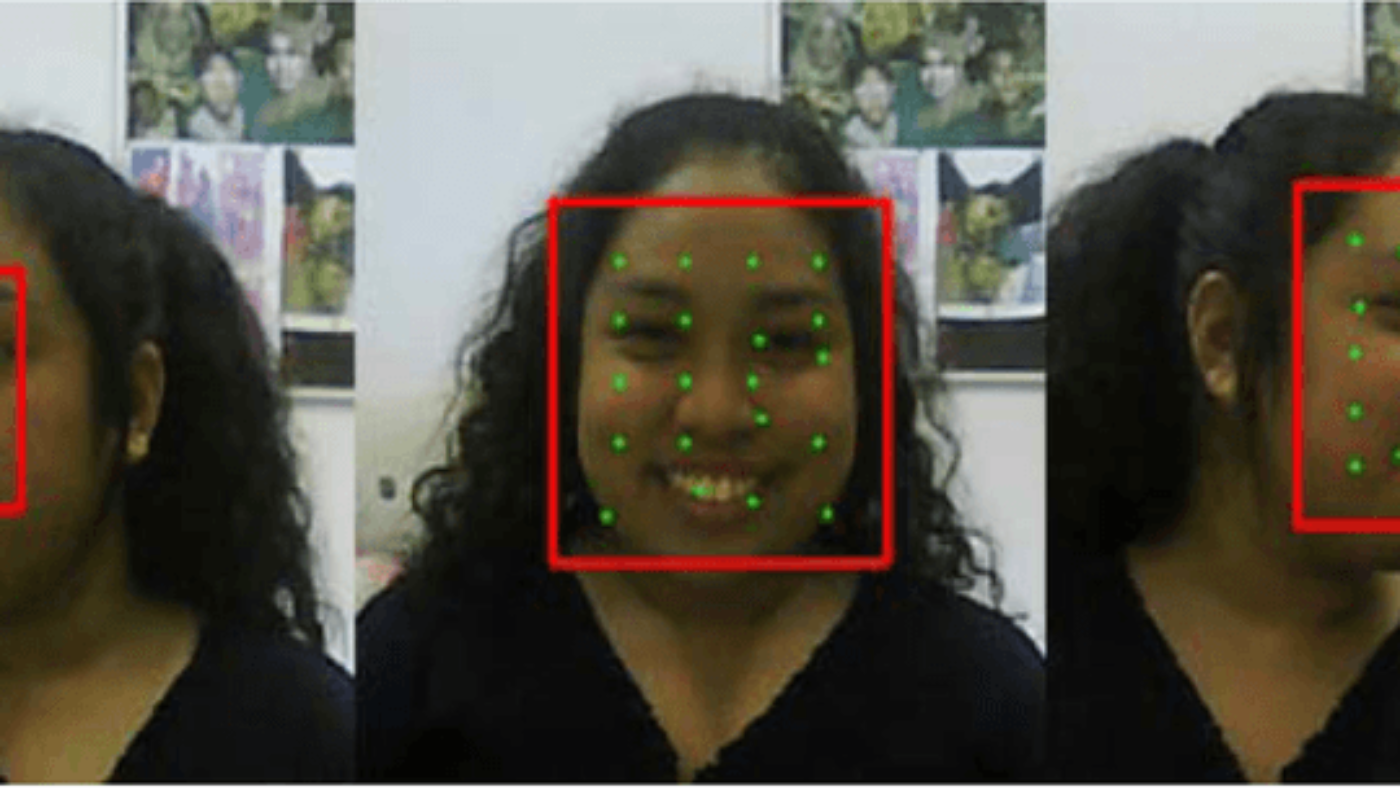

Utilizing advanced techniques such as feature detection and deep learning models like Haar cascades and Viola-Jones algorithms, OpenCV has become an integral component of modern technological solutions for real-time face tracking with Faceware. The utilization of state-of-the-art technologies like OpenCV and OpenVINO toolkit further enhances the efficiency of implementing real-time face tracking projects by incorporating faceware and a recognizer to track and analyze faces in images.

Real-Time Face Detection Methods

Implementing Projects

Developers interested in implementing real-time face tracking projects must consider a combination of hardware and software, such as faceware and OpenCV. By utilizing these tools, developers can create a face recognizer that accurately tracks and identifies faces in real-time. The hardware involves the use of cameras for face detection and facial tracking, while the software includes computer vision libraries like OpenCV for face recognition. Choosing suitable algorithms for face recognition using OpenCV in Python is crucial for accurate results. Proper calibration and testing are essential to achieve precise real-time face tracking using OpenCV and Faceware. The accuracy of tracking faces in the image greatly depends on the effective calibration and thorough testing.

For instance, when creating a security system that uses facial tracking software to track individuals’ faces in real time, developers need to carefully select the appropriate camera setup and computer vision algorithms, such as OpenCV and Faceware, to analyze the image data. This ensures that the face detection and face recognition system, powered by facial tracking software and OpenCV, can accurately identify and track individuals as they move within the monitored area.

When developing applications for augmented reality filters or effects that require real-time face tracking using OpenCV, developers must ensure that their chosen algorithms can swiftly detect faces and facial features even with varying lighting conditions or different facial orientations. This is especially important when working with Faceware, a popular model for face tracking used in social media platforms.

OpenCV 3 Installation

Installing OpenCV 3 is a fundamental step in developing applications for real-time face tracking using Python and AR. The model for face tracking relies on the installation of OpenCV 3. This process typically involves downloading the OpenCV library, which includes face detection, face recognition, and facial tracking algorithms, from its official website. Detailed installation instructions are available for various operating systems, making it accessible to a wide range of developers working with faces, model, face detection, and face recognition.

For example, by following these installation instructions on their preferred operating system (such as Windows or Linux), developers can seamlessly integrate OpenCV facial tracking into their development environment to start working on projects related to real-time face detection and recognition. This allows them to effectively track and analyze faces using the OpenCV model.

Testing Camera Setup

Before initiating real-time face tracking using AR technology, it’s critical to verify that the camera setup is correctly capturing and detecting faces. This step ensures the accurate functioning of the AR model. This verification process includes checking camera connectivity for face detection, ensuring optimal resolution settings for faces, and confirming an adequate frame rate for the face AR SDK model. Maintaining proper lighting conditions is vital for achieving optimal performance during real-time face tracking activities with AR models.

When setting up a surveillance system with real-time facial recognition capabilities, it is crucial to verify correct camera functionality to accurately identify individuals passing through entry points of buildings or public areas like airports or train stations. This ensures accurate identification of faces and enhances the performance of the model.

Data Gathering Techniques

Capture Profiles

Capture profiles are a vital component in real-time face tracking as they define the characteristics of the faces to be tracked. These profiles serve as a model for identifying and following the specified faces. These model profiles encompass parameters such as face size, orientation, and color. For instance, if an application requires tracking individuals with specific facial features or attributes, different capture profiles using face AR SDK can be created to facilitate this process and accurately model the faces. By tailoring capture profiles, developers can ensure that the real-time face tracking system focuses on the desired subjects while ignoring irrelevant details, thus optimizing the performance of the AR technology.

Moreover, imagine a security system that utilizes face recognition technology (face ar) to track only authorized personnel within a facility. In this scenario, creating distinct capture profiles for each individual or group allows for precise and efficient data gathering without unnecessary distractions from other faces in the vicinity.

Differentiating between various groups of people based on their facial characteristics

Establishing specific parameters such as color and orientation for accurate real-time face tracking

Feature Filters

Feature filters are crucial tools for improving the accuracy of real-time face tracking in augmented reality (AR). They help eliminate noise and unwanted features from captured images or video frames, ensuring a more precise AR experience. Common feature filters for image processing include Gaussian blur, median blur, and adaptive thresholding techniques. These filters are used to enhance the quality and clarity of images by reducing noise and improving edge detection. Gaussian blur is a popular filter that smooths out an image by averaging the pixel values in a neighborhood. Median blur is another technique that replaces each pixel’s value with the median value of its neighborhood, effectively reducing salt-and-pepper noise. Adaptive thresholding is a method that dynamically determines the threshold value for each pixel based on its local neighborhood, allowing for better contrast These ar filters effectively refine the visual data obtained during real-time face detection methods.

Consider an example where a camera captures footage in varying lighting conditions leading to inconsistencies in facial recognition accuracy. By applying feature filters like Gaussian blur or adaptive thresholding, these discrepancies can be minimized significantly—ensuring more reliable data collection for subsequent analysis of ar.

Eliminating unwanted noise and enhancing image quality through feature filtering techniques using AR.

Improving accuracy by refining captured images using ar Gaussian blur and median blur.

Audio Synchronization

Incorporating audio synchronization into real-time face tracking is pivotal for aligning audio data with facial movements accurately, especially when using Augmented Reality (AR) technology. This technique ensures that expressions and lip movements correspond seamlessly with audio cues—enhancing user experience across applications like virtual avatars or lip-syncing functionalities.

For instance, when utilizing virtual avatar technology in gaming environments or video conferencing platforms where users interact through personalized avatars representing their facial expressions in real time—audio synchronization becomes indispensable for ensuring realistic interactions aligned with spoken words.

Software for Face Tracking

Best Software Selection

Choosing the right facial tracking software is crucial for successful implementation of real-time face tracking projects. Factors like compatibility, performance, and available features should be considered when making a selection. For instance, OpenCV is an open-source solution that offers flexibility and a wide range of functionalities. On the other hand, proprietary solutions provide ready-to-use options with dedicated support but may come at a cost.

When selecting facial tracking software, it’s important to consider the specific requirements of the project. For instance, if customization and community support are essential elements, open-source software like OpenCV might be more suitable. However, if immediate technical assistance and comprehensive features are needed without significant budget constraints, proprietary solutions could be the better choice.

Proprietary vs Open Source

The decision between proprietary and open-source face tracking software depends on project requirements and budget constraints. Proprietary solutions offer dedicated support services along with advanced features but may involve higher costs compared to open-source alternatives such as OpenCV or Dlib.

Open-source facial tracking software provides developers with flexibility in modifying code according to their needs while benefiting from community-driven updates and improvements. Conversely, proprietary options limit access to source code but often deliver comprehensive functionality out-of-the-box along with professional technical assistance.

Customizable Features

Real-time face tracking systems often provide customizable features to meet specific application needs such as gesture recognition or emotion detection capabilities. These customizations allow developers to tailor the system according to their unique requirements by integrating it seamlessly into their existing technologies or applications.

For example:

Developers working on augmented reality (AR) applications can leverage customizable face-tracking tools like Face AR SDKs.

Facial tracking systems can integrate faceware technology for advanced motion capture in gaming or film production environments.

Customization enables users not only to personalize their experience but also adapt the system based on varying environmental conditions or user preferences.

Facial Mocap Technology Explained

Markerless Tracking

Markerless tracking is a cutting-edge technology that doesn’t require physical markers or tags on the face for tracking. Instead, it uses computer vision algorithms to detect and track facial features directly from video streams or images. This method offers more natural interactions and greater freedom of movement, making it ideal for applications like augmented reality filters in social media apps. For example, Snapchat uses markerless tracking to overlay animated effects onto users’ faces in real time without the need for any special markers or equipment.

Another benefit of markerless tracking is its ability to capture subtle facial expressions accurately, which is crucial in fields such as film production and character animation. By capturing minute movements of the face without any intrusive markers, this technology ensures a more authentic portrayal of emotions and expressions.

Facial and Body Integration

Real-time face tracking can be seamlessly integrated with body tracking technologies, allowing for comprehensive motion capture solutions. This integration enables full-body motion capture, enhancing immersive experiences across various industries such as virtual reality gaming and animation.

For instance, in virtual reality (VR) gaming applications, combining facial mocap with body tracking creates a more immersive experience by accurately replicating players’ movements and expressions within the game environment. Moreover, this integration is vital in creating lifelike avatars that mirror users’ gestures realistically during VR interactions.

Tailored Solutions

Real-time face tracking isn’t limited to standard applications; it can be tailored to meet specific industry requirements or application needs. Customized solutions are designed to cater to diverse sectors such as healthcare, entertainment, marketing, education among others.

In healthcare settings like telemedicine or physiotherapy clinics where remote patient monitoring occurs via video calls, customized real-time face-tracking solutions enable accurate assessment of patients’ conditions through visual cues like facial muscle movements or changes in expression.

Moreover,tailored solutions play an essential role in educational settings where interactive learning experiences are facilitated through personalized avatars driven by real-time face-tracking technology. These avatars enhance engagement by mimicking students’ expressions during virtual classroom sessions.

Enhancing Face Tracking Performance

OpenVINO Toolkit Usage

The OpenVINO toolkit is a powerful tool for optimizing real-time face tracking applications on Intel hardware. It utilizes deep learning models and provides hardware acceleration for improved performance. By leveraging the capabilities of the OpenVINO toolkit, developers can significantly enhance the efficiency and speed of real-time face tracking systems. Moreover, it enables the deployment of these systems on edge devices, making them more accessible and versatile.

For instance:

The OpenVINO toolkit allows developers to harness the power of Intel’s hardware to achieve faster and more accurate real-time face tracking.

With its deep learning model optimization, it ensures that facial feature detection and tracking are executed with high precision.

Utilizing this toolkit not only boosts performance but also streamlines the process of implementing real-time face tracking in various applications such as augmented reality (AR), virtual reality (VR), or interactive digital experiences.

Smooth Head Movement

Achieving smooth head movement detection and tracking is a crucial objective in real-time face tracking. This aspect directly impacts the realism of virtual avatars or characters in AR/VR environments. Algorithms like Kalman filters or optical flow techniques are employed to ensure that head movement is tracked seamlessly, enhancing user experience by providing natural-looking interactions with virtual elements.

Consider this:

In AR/VR applications, seamless head movement detection creates an immersive experience for users interacting with virtual environments.

Techniques like optical flow play a vital role in capturing subtle movements accurately without abrupt jumps or disruptions.

By incorporating these algorithms into real-time face tracking systems, developers can create lifelike interactions between users and digital content, elevating the overall quality of AR/VR experiences.

Refinement and Editing

Refinement and editing techniques play a pivotal role in improving the accuracy of real-time face tracking results. These post-processing steps involve noise reduction, feature enhancement, data fusion, among others. By applying these techniques to tracked facial features’ data output from real-time face trackers, developers can refine details while ensuring minimal errors or inconsistencies.

Here’s why it matters:

Noise reduction helps eliminate unwanted artifacts from tracked facial features’ data output.

Feature enhancement techniques improve visual fidelity by refining key facial attributes captured during real-time face tracking processes.

Ultimately, refinement and editing contribute to enhancing both accuracy and visual appeal within real-time face-tracking applications across various domains such as entertainment industry productions or interactive installations.

Real-Time Face Tracking in Different Sectors

Automotive AI Applications

Real-time face tracking is integral to various automotive AI systems, such as driver monitoring and personalized in-car experiences. This technology enables driver identification, drowsiness detection, emotion recognition, and gesture-based controls. For instance, a vehicle equipped with real-time face tracking can detect when the driver is feeling drowsy or distracted, prompting alerts to ensure enhanced safety on the road.

Automotive AI applications also benefit from real-time face tracking for improved user comfort. By recognizing individual drivers and adjusting settings like seat position, climate control preferences, and entertainment options accordingly, the driving experience becomes more personalized and enjoyable.

Driver identification

Drowsiness detection

Emotion recognition

Gesture-based controls

VR and AR Trends

In the rapidly evolving landscape of virtual reality (VR) and augmented reality (AR) technologies, real-time face tracking plays a pivotal role in delivering immersive experiences to users. As these technologies advance, they continually seek to enhance accuracy while integrating seamlessly with other sensors for comprehensive user interaction.

For example, real-time face tracking contributes to creating lifelike avatars that mimic users’ facial expressions within virtual environments. It facilitates natural interaction with virtual objects by accurately capturing facial movements in real time.

Trends in VR/AR encompass not only improved accuracy but also the fusion of multiple sensory inputs for a more holistic user experience. These advancements are propelling the capabilities of VR/AR applications beyond mere visual immersion into deeper levels of engagement through realistic interactions.

Immersive experiences

Improved accuracy

Integration with other sensors

Seamless interaction with virtual objects

Mask Detection Methods

Real-time face tracking serves as an effective tool for mask detection across various scenarios related to public health or security concerns. Employing different methods such as deep learning models or color-based segmentation allows for accurate and rapid mask detection processes.

By leveraging this technology effectively—such as integrating it into surveillance systems at public venues—authorities can maintain compliance with safety regulations regarding mask-wearing protocols during pandemics or heightened security measures during critical events.

Accurate and real-time mask detection significantly contributes to public safety by promptly identifying individuals who may be non-compliant without causing disruptions at entry points or checkpoints where large volumes of people pass through regularly.

Privacy and User Experience in Face Tracking

Privacy-First Features

Real-time face tracking systems prioritize privacy by incorporating features like anonymization or data encryption. These measures ensure that personal information is protected during the tracking process, addressing concerns about unauthorized access to sensitive data. Compliance with privacy regulations is essential for the acceptance and adoption of real-time face tracking technologies, fostering trust among users and stakeholders.

For example, a retail company implementing real-time face tracking in its stores can use anonymization techniques to analyze customer behavior without compromising individuals’ identities. This approach respects privacy while still providing valuable insights for improving store layout or product placement.

Furthermore, integrating data encryption into real-time face tracking applications enhances security by safeguarding the captured facial data from potential breaches or misuse. By prioritizing these privacy-first features, organizations demonstrate their commitment to protecting user privacy while leveraging the benefits of real-time face tracking technology.

Eye Gaze Tracking

One valuable feature enabled by real-time face tracking is eye gaze monitoring. This functionality allows for eye movement analysis, attention detection, or gaze-based interaction within various contexts such as human-computer interaction, market research, or assistive technologies. For instance, in gaming applications, developers can utilize eye gaze tracking to enhance user experiences by enabling more immersive gameplay interactions based on players’ visual focus.

Eye gaze tracking also finds practical application in assistive technologies where it enables individuals with mobility impairments to control devices using their eye movements. In market research settings, companies can employ this feature to gain insights into consumer behavior and preferences through detailed analysis of participants’ visual attention patterns.

By understanding how users interact visually with digital content or physical environments through eye gaze analysis facilitated by real-time face tracking technology, businesses and researchers can enhance products and services tailored to specific user needs.

Lightweight Technology Integration

Real-time face tracking’s capability for integration into lightweight devices like smartphones or wearable gadgets opens up opportunities for on-the-go applications across diverse sectors including remote assistance services and mobile gaming experiences. The optimized algorithms and efficient hardware utilization associated with lightweight integration contribute significantly to minimizing resource consumption while maintaining high performance levels.

For instance – when integrated into smartphones – lightweight real-time face-tracking technology can enable innovative augmented reality (AR) filters that respond seamlessly to users’ facial expressions during video calls or social media interactions. Moreover – wearable gadgets equipped with this technology offer enhanced functionalities such as hands-free navigation through head movements which enriches the overall user experience.

Getting Started with Face Tracking Software

Real-time face tracking software requires a comprehensive installation guide to help users set up the necessary software and dependencies. This guide provides step-by-step instructions for different platforms, ensuring a smooth development process and accurate results. Proper installation is crucial for seamless functionality.

For instance, if you’re using OpenCV for real-time face tracking, the installation guide will walk you through installing Python and setting up the OpenCV library on your system. Troubleshooting tips are also included to address common issues that may arise during the installation process.

Testing and Calibration

Thorough testing is an essential step in real-time face tracking projects. It involves verifying the accuracy of face detection and tracking under various conditions such as different lighting environments or varying facial expressions. Without proper testing, inaccuracies in tracking can lead to unreliable results.

Calibration is equally important as it ensures optimal performance by adjusting parameters like camera position, lighting conditions, or feature filters. For example, calibrating a depth-sensing camera used in real-time face tracking helps improve accuracy by accounting for variations in distance from the camera.

Workflow Tools

Workflow tools play a crucial role in streamlining the development process of real-time face tracking projects. These tools offer functionalities such as data annotation, model training, performance evaluation, and more. Integration of these workflow tools not only enhances productivity but also facilitates collaboration among developers working on the project.

For instance:

Data annotation tools allow developers to label facial features within images or video frames.

Model training tools enable developers to train machine learning models with annotated data for improved accuracy.

Performance evaluation tools help assess the effectiveness of different algorithms used in face detection and tracking.

Conclusion

You’ve now uncovered the intricate world of real-time face tracking, from the underlying detection methods to the diverse applications across various sectors. As technology continues to advance, the potential for enhancing face tracking performance and user experience becomes even more promising. The fusion of facial mocap technology and privacy considerations presents both opportunities and challenges that demand careful navigation in this evolving landscape.

Ready to delve into the realm of real-time face tracking? Whether you’re a developer, researcher, or simply curious about this cutting-edge technology, take the next step by exploring the software and techniques discussed. Embrace the possibilities and stay informed about the ethical and practical implications as you venture into this innovative domain.

Frequently Asked Questions

Is faceware realtime, object detection, and detection algorithms technology only used for security purposes?

Real-time face tracking technology is not limited to security applications. It has diverse uses, including in entertainment, marketing, and healthcare. Its versatility enables it to be applied across various sectors for different purposes.

What are the primary methods for enhancing face tracking performance in object detection? Faceware Realtime and OpenCV are two popular detection algorithms used for this purpose.

Enhancing face tracking performance involves optimizing algorithms, improving hardware capabilities, and refining data processing techniques. By integrating these elements effectively, developers can achieve more accurate and efficient real-time face tracking systems.

How does facial motion capture (Mocap) technology work?

Facial Mocap technology utilizes markers or sensors to track facial movements and expressions in real time. This data is then translated into digital form to animate virtual characters or analyze human behavior for various applications like gaming and film production.

Can users control their privacy when interacting with real-time face tracking systems using Faceware Realtime? How does object detection with OpenCV affect privacy? Additionally, can users manage their gaze direction while using these systems?

Users have the right to control their privacy when engaging with real-time face tracking systems. Developers must prioritize user consent, provide transparent information on data usage, and offer options for individuals to manage their privacy settings effectively.

Are there specific software programs like Faceware Realtime and OpenCV designed specifically for beginners interested in exploring real-time face tracking with a recognizer and mask detector?

Yes, there are user-friendly software programs tailored for beginners who want to delve into the world of real-time face tracking. These tools often come with intuitive interfaces and comprehensive tutorials to help new users get started on their journey into this innovative technology.

Add a Comment