Did you know that face recognition technology is now used in over 90% of smartphones? This powerful tech isn’t just for unlocking your phone; it’s revolutionizing security, retail, and even healthcare. Face recognition offers unparalleled convenience and safety, making it a hot topic today. But how does it work? And what are the benefits and risks?

We’ll explore its applications, advantages, and potential pitfalls. Whether you’re a tech enthusiast or just curious about the latest trends, this guide will give you a clear understanding of face recognition technology. Ready to learn more? Let’s get started!

Key Takeaways

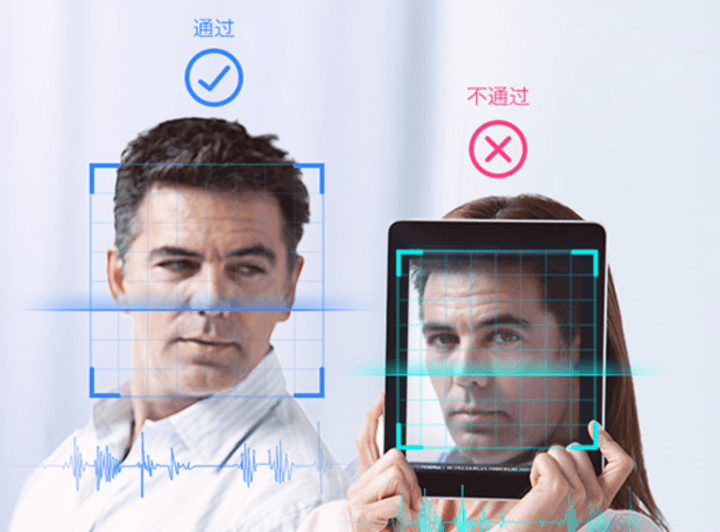

Liveness Detection is Crucial: Implementing liveness detection in face recognition systems helps prevent fraud by ensuring that the subject is a live person, not a photo or video.

Enhanced Security: Combining face recognition with video analysis significantly boosts security measures, making it harder for malicious actors to bypass systems.

AI and Machine Learning Integration: Leveraging AI and machine learning can improve the accuracy and efficiency of face recognition technologies, making them more reliable.

Wide-Ranging Applications: Face recognition technologies are being used across various industries, from banking to healthcare, enhancing both security and user experience.

Addressing Challenges: It’s essential to tackle the ethical and privacy concerns associated with face recognition to ensure responsible use and public trust.

Best Practices: Adopting best practices, such as regular system updates and user consent protocols, can help in the effective and ethical implementation of face recognition systems.

Understanding Liveness Detection

Definition and Role

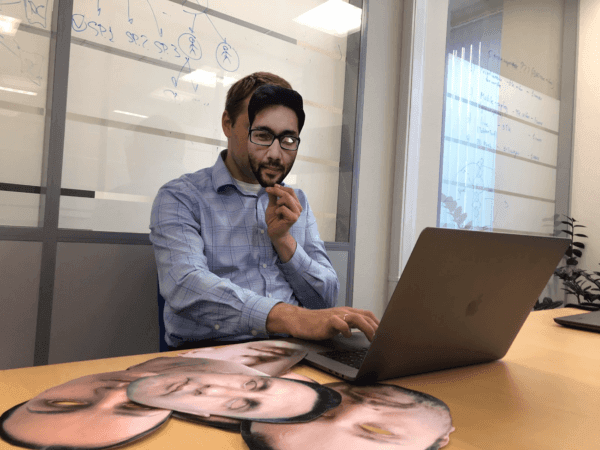

Liveness detection is a security feature in face recognition systems. It ensures the person being scanned is real. This prevents spoofing attacks. Spoofing involves using photos, videos, or masks to trick the system.

Motion Analysis

Motion analysis is one method used in liveness detection. It tracks small facial movements. Real faces have natural movements like blinking and smiling. Fake faces, such as photos or masks, lack these subtle motions.

Texture Analysis

Texture analysis examines the surface details of a face. Real skin has unique textures and patterns. Photos or masks often appear smooth or flat under scrutiny. By analyzing these differences, systems can detect fakes.

Importance for Security

Integrating liveness detection enhances security. It makes biometric authentication more reliable. Without it, attackers could easily bypass face recognition systems. Liveness detection protects against unauthorized access.

Advantages of Liveness Detection

Fraud Prevention

Liveness detection greatly reduces fraud. It distinguishes between real users and fake attempts. This prevents unauthorized access. Advanced techniques like 3D sensing and micro-expression analysis identify genuine faces. These methods block photos, videos, and masks used by fraudsters.

Biometric systems become more secure with liveness detection. Banks and financial institutions benefit from this technology. It ensures only legitimate users can access accounts. Fraudulent activities decrease significantly.

User Trust

Liveness detection increases user trust in biometric systems. People feel safer knowing their data is protected. They are more likely to use these systems without fear of impersonation.

Trust is crucial for widespread adoption of face recognition technologies. When users know the system can detect live faces, they gain confidence in its reliability. This leads to a higher acceptance rate among the public.

Compatibility

Liveness detection works well with existing face recognition technologies. It integrates seamlessly without requiring major changes. This compatibility enhances security while maintaining convenience.

Most face recognition systems can incorporate liveness detection features easily. Developers design these features to be adaptable and user-friendly. Security levels improve without sacrificing usability.

Real-life Examples

Several companies have successfully implemented liveness detection in their systems:

Apple uses Face ID with liveness detection in iPhones.

Banking apps utilize this technology for secure transactions.

Airports employ it for faster and safer boarding processes.

These examples show how effective liveness detection is in various sectors.

Technical Details

Liveness detection involves several technical aspects:

3D Sensing: Measures depth and contours of a face.

Micro-Expression Analysis: Detects subtle facial movements.

Infrared Scanning: Identifies heat patterns unique to living tissue.

Challenge-Response Tests: Requires users to perform specific actions like blinking or smiling.

These techniques ensure accurate identification of live users.

Evolution and Techniques in Face Recognition

Early Methods

Early face recognition systems used geometric techniques. In the 1960s, scientists mapped facial features like eyes, nose, and mouth; Try online Face Attribute Analysis. They measured distances between these points. This method was simple but not very accurate.

In the 1970s, researchers improved this approach. They introduced linear algebra to better analyze facial structures. These early methods laid the groundwork for future advancements.

PCA and LDA

Principal Component Analysis (PCA) emerged in the 1980s. It reduced data complexity by focusing on key features. PCA transformed high-dimensional data into a lower-dimensional form. This made it easier to process images.

Linear Discriminant Analysis (LDA) followed in the 1990s. LDA aimed to find a linear combination of features that separated different classes of objects. Both techniques improved accuracy but had limitations with lighting and angles.

3D Modeling

3D modeling appeared in the early 2000s. It captured depth information along with facial features. This technique used multiple cameras to create a three-dimensional model of a face.

3D models enhanced accuracy under varying conditions:

Different lighting

Various angles

Diverse expressions

However, creating and processing 3D models required significant computational power.

Neural Networks

Neural networks revolutionized face recognition in the 2010s. Convolutional Neural Networks (CNNs) became popular due to their high accuracy. CNNs learn patterns from vast datasets, improving over time.

Deep learning models can recognize faces even with changes in:

Age

Makeup

Facial hair

These models outperform previous methods but need large amounts of data and powerful hardware.

Computational Power

Advances in computational power have driven face recognition forward. Modern GPUs handle complex calculations quickly. This speed allows real-time face recognition on devices like smartphones and security cameras.

Algorithm efficiency has also improved:

Faster processing times

Reduced error rates

Enhanced scalability

These improvements make face recognition more accessible and reliable for various applications.

Impact on Adoption

Higher accuracy has increased adoption across industries:

Security systems use face recognition for access control.

Smartphones employ it for user authentication.

Retail stores utilize it for customer analytics.

By integrating advanced algorithms with robust hardware, businesses enhance both security and user experience.

Enhancing Security with Video Analysis

Dynamic Authentication

Video analysis enhances face recognition by enabling dynamic authentication. Traditional methods capture a single image. This can be easily spoofed. Video analysis, however, captures continuous frames. It monitors facial movements and expressions over time.

This method ensures the person is physically present. It reduces the risk of static image attacks. Dynamic authentication adds an extra layer of security.

Continuous Monitoring

Continuous monitoring is another benefit of video analysis. Static images only provide a snapshot in time. Video streams offer ongoing surveillance. They track changes in real-time.

This approach detects any unusual behavior immediately. If someone tries to bypass security, the system alerts authorities instantly.

Behavioral Biometrics Integration

Integrating behavioral biometrics with face recognition strengthens security further. Behavioral biometrics analyze unique patterns like walking style or typing rhythm.

Combining these with face recognition creates a multi-layered defense system:

Face recognition verifies identity.

Behavioral biometrics confirm habitual actions.

Both systems work together to detect anomalies.

For example, if someone looks like an employee but walks differently, the system flags it.

Case Study: Airport Security

Airports are sensitive environments needing high security levels. In 2018, an airport in Atlanta implemented video analysis for face recognition. The system continuously monitored passengers’ faces and behaviors.

It successfully identified a person using a fake passport. Authorities arrested the individual before boarding the plane.

Case Study: Financial Institutions

Banks also use video analysis to enhance security. A major bank in New York integrated behavioral biometrics with face recognition in 2020.

The system detected an unauthorized person trying to access secure areas by mimicking an employee’s appearance but failing behavioral checks. The bank prevented potential fraud and data theft.

AI and Machine Learning in Detection

Role of AI

AI plays a crucial role in face recognition. It helps refine algorithms for better accuracy and speed. These systems analyze facial features like eyes, nose, and mouth; Try online Face Attribute Analysis and Get APIs for face attribute analysis. They then compare these features to stored data.

Machine learning enables these systems to improve over time. The more data the system processes, the more accurate it becomes. This constant learning helps reduce errors.

Adaptive Systems

AI develops adaptive face recognition systems. These systems learn from new data inputs. They adjust their algorithms based on this new information.

Such adaptability is essential for real-world applications. For example, lighting conditions can change how a face appears. An adaptive system can recognize faces even in poor lighting.

Ethical Considerations

Using AI in face recognition raises ethical concerns. Privacy is a significant issue. People worry about being monitored without consent.

There are also concerns about bias in AI algorithms. These biases can lead to unfair treatment of certain groups. For instance, some studies show that face recognition systems are less accurate for people with darker skin tones.

Privacy Concerns

Privacy concerns are widespread with AI-based face recognition. Many fear misuse by governments or corporations. Unauthorized surveillance is a major worry.

To address these issues, some suggest strict regulations. Laws could limit how and where face recognition technology can be used.

Real-Life Examples

In 2018, San Francisco banned the use of facial recognition by city agencies due to privacy concerns. This move highlighted the need for ethical guidelines.

Another example is London’s Metropolitan Police using facial recognition during public events to Get APIs for face attribute analysis. This raised questions about civil liberties and surveillance.

Applications Across Industries

Security and Law Enforcement

Face recognition is widely used in security. Airports use it to verify passengers’ identities. This helps prevent fraud and enhances safety. Police departments use face recognition to find suspects. It matches faces from crime scenes with databases.

Governments also use it for border control. It speeds up the process and reduces human error. Surveillance cameras equipped with face recognition can track and identify individuals in real time.

Marketing and Retail

Retailers use face recognition to improve customer experience. Cameras installed in stores recognize returning customers. This allows for personalized service, such as tailored recommendations.

Marketing teams benefit from data collected through face recognition. They analyze customer behavior patterns to create targeted advertisements. Personalized marketing increases sales by catering directly to individual preferences.

Healthcare

Hospitals use face recognition for patient identification. It ensures that patients receive the correct treatment by matching their faces with medical records. This reduces errors caused by mistaken identity.

Face recognition also helps monitor patients. Cameras track patients’ movements, which is useful for those with dementia or other conditions requiring constant supervision. Real-time monitoring improves patient safety and care quality.

Financial Services

Banks employ face recognition for secure transactions. Customers can access accounts using their faces instead of passwords or PINs. This adds an extra layer of security against fraud.

ATMs equipped with face recognition allow withdrawals without a card. Users simply look at the camera, making banking more convenient.

Education

Schools use face recognition for attendance tracking. Students’ faces are scanned as they enter classrooms, automatically recording their presence.

This technology also enhances campus security by identifying unauthorized visitors quickly.

Addressing Challenges and Controversies

Accuracy Issues

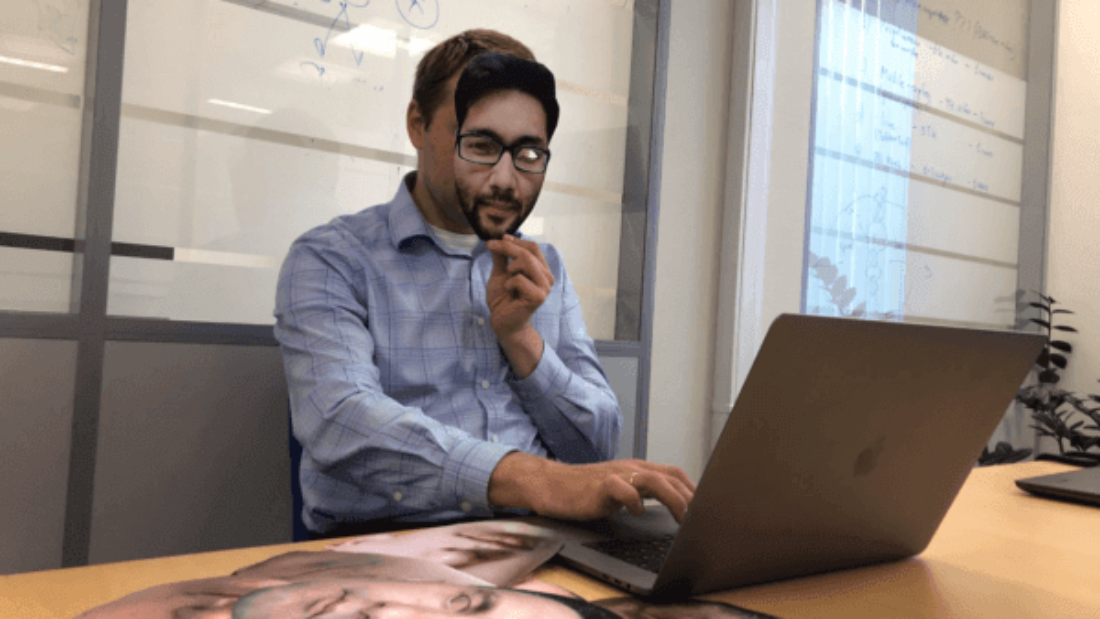

Face recognition technology struggles with accuracy. Different populations show varied results. For example, darker skin tones often lead to higher error rates. This was highlighted in a 2018 study by the National Institute of Standards and Technology (NIST). They found that algorithms were less accurate for African Americans and Asian faces compared to Caucasian faces.

Lighting conditions also impact accuracy. Poor lighting or shadows can confuse the system. Even slight changes in light can affect results. This makes face recognition less reliable in real-world settings.

Privacy Concerns

Collecting biometric data raises privacy issues. Face recognition systems need images of people’s faces. These images are stored in databases, sometimes without consent. Unauthorized access to these databases can lead to misuse of personal information.

People worry about constant surveillance. Cameras equipped with face recognition can track movements. This reduces anonymity in public spaces. In 2019, San Francisco became the first U.S. city to ban police use of face recognition technology due to these concerns.

Ethical Dilemmas

There are ethical questions around the use of face recognition. One issue is bias in the technology itself. If an algorithm is biased, it can lead to unfair treatment of certain groups.

Another concern is consent. Often, people are unaware their data is being collected. This lack of transparency leads to mistrust.

Regulatory Landscape

Regulations vary by region and industry. The European Union has strict rules under the General Data Protection Regulation (GDPR). It requires explicit consent for collecting biometric data.

In contrast, the United States lacks federal regulations specific to face recognition. Some states have their own laws, but there is no uniform standard.

Industries like banking and healthcare have specific guidelines too. For example, banks must comply with Know Your Customer (KYC) regulations when using face recognition for identity verification.

Best Practices for Implementation

Transparency

Transparency is essential. Organizations should clearly explain how they use face recognition technology. This includes detailing the purpose, scope, and duration of data storage. Users must know why their data is collected and how it will be used.

Publicly accessible policies help build trust. Regular updates to these policies ensure they remain relevant.

Consent

Obtaining consent is crucial. Individuals should have the choice to opt-in or out of face recognition systems. This respects personal privacy and autonomy.

Clear consent forms are necessary. They should outline what data will be collected and for what specific purposes.

Data Protection

Data protection measures safeguard sensitive information. Encryption technologies can secure stored facial data against breaches. Regular audits ensure compliance with security standards.

Organizations should also implement strict access controls. Only authorized personnel should handle sensitive information.

Ongoing Testing

Continuous testing ensures system accuracy. Face recognition algorithms must undergo regular evaluations to maintain high performance levels.

Testing helps identify biases within the system. Addressing these biases improves fairness across different demographics.

Calibration

Calibration fine-tunes the system. It adjusts settings to enhance accuracy in various conditions, such as lighting changes or camera angles.

Regular calibration prevents degradation over time. It maintains consistent performance despite environmental variations.

Collaboration with Privacy Advocates

Collaborating with privacy advocates provides valuable insights. These experts highlight potential privacy concerns and suggest mitigation strategies.

Engagement with advocacy groups fosters accountability. It demonstrates a commitment to ethical practices in deploying face recognition technology.

Legal Expertise

Legal experts help navigate complex regulations. They ensure compliance with laws governing data protection and privacy rights.

Consulting legal professionals minimizes risks of legal repercussions. It aligns organizational practices with current legislation regarding face recognition usage.

Summary

Face recognition technology has come a long way, integrating advanced techniques like liveness detection and AI-driven video analysis. These advancements enhance security and offer numerous benefits across industries. By addressing challenges and implementing best practices, you can ensure robust and reliable systems.

As you explore face recognition solutions, consider the importance of staying updated with evolving technologies. Dive deeper into the field and apply these insights to your projects. Ready to elevate your security protocols? Start today by leveraging cutting-edge face recognition tools.

Frequently Asked Questions

What is liveness detection in face recognition?

Liveness detection ensures the face being scanned is real and not a photo or video. It enhances security by preventing spoofing attacks.

How does liveness detection improve security?

It detects fake faces, like photos or masks, making it harder for impostors to bypass face recognition systems.

What are the main techniques used in face recognition?

Techniques include 2D and 3D imaging, thermal imaging, and deep learning algorithms. These methods enhance accuracy and reliability.

How do AI and machine learning contribute to face recognition?

AI and machine learning analyze facial features more accurately. They adapt to new data, improving recognition over time.

In which industries is face recognition commonly used?

Face recognition is used in security, finance, healthcare, retail, and travel. It enhances safety, efficiency, and user experience.

What challenges does face recognition face?

Challenges include privacy concerns, bias in algorithms, and spoofing attacks. Addressing these issues is crucial for wider adoption.

What are best practices for implementing face recognition systems?

Ensure data privacy, use robust algorithms, regularly update systems, and educate users on proper usage. This maximizes effectiveness and trust.