Did you know that 55% of all communication, including language, is non-verbal? With the use of tensorflow, efficient face emotion recognition can be achieved through facial expression recognition. Our facial expression recognition and face detection technology, powered by tensorflow, allows us to detect and understand emotions in real-time. This has significant implications for liveness and how we interact with the world around us. Enter the world of real-time emotion detection on GitHub for liveness in web applications. Explore the repository that focuses on detecting facial attributes and stay up-to-date with the latest advancements in this topic.

Real-time emotion detection technology utilizes facial expressions, voice tone, and other physiological signals to recognize and interpret human emotions with liveness. This technology can be used in various fields, including marketing, customer service, and healthcare. By analyzing the real-time data, businesses can branch out and tailor their products and services to better meet the needs of their customers. With applications in psychology, marketing, and human-computer interaction, facial emotion detection and emotion classification have become increasingly important fields. But what does GitHub have to do with it?

GitHub, the popular platform for hosting code repositories, offers a wealth of resources for developers. It provides access to public repositories containing pre-trained models and code implementations related to real-time emotion detection. This availability makes GitHub a valuable tool for developers looking to incorporate emotion detection into their projects.

Understanding Real-Time Emotion Detection

Real-time emotion detection is a fascinating field that combines deep learning concepts, facial emotion recognition, and multimodal recognition techniques. By leveraging these technologies, we can analyze and understand human emotions in real-time, opening up numerous possibilities for applications like virtual assistants, mental health monitoring, and customer sentiment analysis.

Deep Learning Concepts

Deep learning is a subset of machine learning that focuses on artificial neural networks with multiple layers. These networks are designed to mimic the structure of the human brain, enabling them to learn from large datasets and perform complex pattern recognition tasks. In the context of real-time emotion detection, deep learning algorithms play a crucial role in extracting meaningful features from input data.

With their ability to process vast amounts of information quickly, deep learning models excel at capturing intricate details in facial expressions or other modalities associated with emotions. This allows them to identify subtle cues that may not be apparent to the naked eye. By training these models on labeled datasets containing examples of different emotions, they can learn to accurately classify new inputs based on their learned patterns.

Facial Emotion Recognition

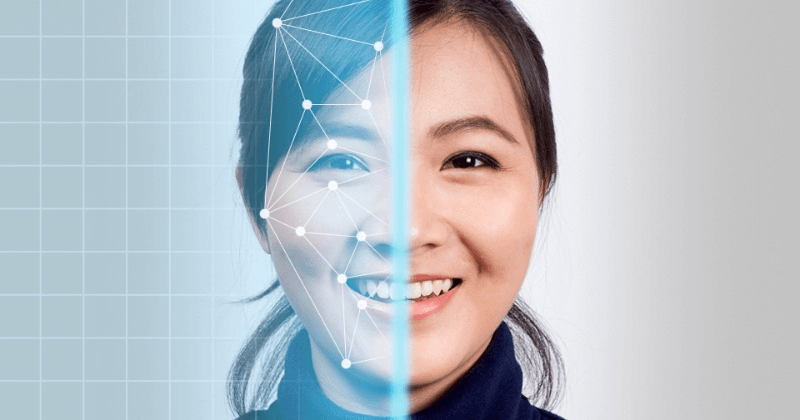

Facial emotion recognition is an essential component of real-time emotion detection systems. It involves detecting and analyzing emotions from facial expressions using computer vision techniques. By extracting features such as facial landmarks, texture, and motion from images or videos, these systems can infer the underlying emotional states.

Computer vision algorithms can detect key facial landmarks like the position of the eyes, nose, and mouth. They can also analyze changes in texture and motion across different regions of the face. By combining these features with deep learning models trained on labeled data, facial emotion recognition systems can accurately recognize emotions like happiness, sadness, anger, surprise, fear, and disgust.

The applications for facial emotion recognition are diverse. For example:

In mental health monitoring: Real-time emotion detection using facial expression analysis could assist therapists or counselors in assessing patients’ emotional states during therapy sessions.

In customer sentiment analysis: Companies can use facial emotion recognition to gauge customers’ emotional reactions to products, advertisements, or user interfaces, helping them improve their offerings based on real-time feedback.

Multimodal Recognition

Real-time emotion detection often incorporates multimodal recognition techniques. Multimodal recognition involves combining information from multiple sources, such as facial expressions, speech, and physiological signals like heart rate or skin conductance. By considering different modalities simultaneously, these systems can enhance accuracy and robustness in detecting emotions.

For instance, when analyzing a person’s emotional state, combining facial expression analysis with speech intonation and physiological signals can provide a more comprehensive understanding of their emotions.

GitHub and Emotion Detection Integration

GitHub, being a popular platform for code hosting and collaboration, plays a significant role in the development of real-time emotion detection systems. By integrating GitHub with emotion detection, developers can access a wide range of resources, stay updated with the latest advancements, and ensure secure user authentication.

Public Repositories

Public repositories on platforms like GitHub provide developers with a treasure trove of pre-trained models, datasets, and code implementations related to real-time emotion detection. These resources can be leveraged to build efficient and accurate emotion detection systems without starting from scratch. Instead of reinventing the wheel, developers can benefit from the collective efforts of others in the field.

Collaboration and knowledge sharing thrive in public repositories as developers contribute their expertise by sharing their work openly. This fosters an environment where individuals can learn from each other’s successes and failures. By exploring public repositories dedicated to emotion detection, developers gain insights into various approaches, techniques, and best practices that they can apply to their own projects.

Latest Commits

The continuous development and improvement of real-time emotion detection projects are reflected in the latest commits made by developers on GitHub. These commits signify ongoing efforts to enhance performance, fix bugs, or introduce new features into existing projects. By keeping track of these updates regularly, developers can stay up-to-date with the latest advancements in the field.

Staying informed about the latest commits allows developers to incorporate cutting-edge techniques into their own projects. They can learn from the mistakes made by others or take inspiration from successful implementations. By leveraging these improvements made by fellow developers worldwide, they can save time and effort while building robust emotion detection systems.

User Authentication

User authentication is a crucial aspect when integrating GitHub with real-time emotion detection systems that handle sensitive data. It ensures that only authorized users have access to the system while safeguarding against potential security breaches or unauthorized usage.

Various authentication methods such as passwords, biometrics, or two-factor authentication can be implemented to ensure secure access. Passwords provide a basic level of security by verifying user identity through a unique combination of characters. Biometric authentication, like fingerprint or facial recognition, adds an additional layer of security by leveraging unique physical traits. Two-factor authentication requires users to provide two different forms of identification, such as a password and a verification code sent to their mobile device.

Development Environments for Emotion Analysis

Launching Environments: Launching environments play a crucial role in deploying real-time emotion detection systems. These platforms or frameworks allow developers to make their models accessible and scalable. Cloud platforms like AWS and Google Cloud provide the infrastructure needed to run and manage these systems efficiently. They offer services such as virtual machines, storage, and data processing capabilities that can handle the computational requirements of emotion analysis.

Alternatively, developers can use web-based application frameworks like Flask or Django to create their own launching environments. These frameworks enable the development of user-friendly interfaces where users can interact with the emotion detection system. By choosing the right launching environment, developers can ensure optimal performance, scalability, and accessibility for their real-time emotion detection models.

Dataset Preparation: Preparing a dataset is an essential step in training accurate and unbiased real-time emotion detection models. It involves collecting a diverse range of images or videos that represent different emotions. The dataset needs to be carefully labeled, assigning each image or video with the corresponding emotion category.

To ensure diversity and representativeness in the dataset, it is important to include images or videos from various sources and demographics. This helps prevent bias in the model’s predictions by exposing it to a wide range of emotions expressed by different individuals. Proper dataset preparation lays the foundation for building robust emotion detection models that perform well across different scenarios.

Running Demos: Running demos allows developers to test and evaluate real-time emotion detection models quickly. Demos often provide sample inputs such as images or videos and showcase how the model detects emotions in real time. By running demos, developers gain insights into the capabilities and limitations of different models.

For example, a demo may take an image as input and output labels indicating whether the person in the image is happy, sad, angry, or surprised. Developers can experiment with various inputs to understand how well their model performs under different conditions.

Demos also serve as a valuable tool for showcasing the capabilities of real-time emotion detection systems to potential users or stakeholders.

Facial Recognition Techniques in Emotion Detection

Facial recognition techniques play a crucial role in the field of emotion detection. By analyzing facial features and expressions, these techniques enable the identification and classification of various emotions in real-time.

OpenCV with Deepface

OpenCV, an open-source computer vision library, is widely utilized in real-time emotion detection projects. Its versatility and extensive functionality make it an ideal choice for processing and analyzing images or video streams. When combined with Deepface, a deep learning facial analysis library built on top of OpenCV, developers gain access to even more advanced tools for facial feature extraction and emotion recognition.

Deepface leverages deep learning models to extract meaningful information from faces. With its comprehensive set of pre-trained models, developers can easily detect facial landmarks, analyze expressions, and classify emotions accurately. This combination of OpenCV’s robust computer vision capabilities and Deepface’s deep learning algorithms empowers developers to build highly accurate real-time emotion detection systems.

EfficientNetV2 Quantization

EfficientNetV2 is a state-of-the-art deep learning architecture known for its efficiency and accuracy in image classification tasks. However,Memory footprint and computational requirements become critical considerations.

Quantization offers a solution by reducing the memory footprint and computational demands of models without significant loss in performance. By applying quantization techniques to EfficientNetV2-based real-time emotion detection models, developers can create efficient solutions suitable for deployment on devices with limited resources. This enables the widespread adoption of real-time emotion detection technologies across various platforms.

Facial Feature Extraction

Accurate facial feature extraction is paramount for robust real-time emotion detection systems. This process involves identifying key facial landmarks, textures, or patterns that provide valuable information for analyzing facial expressions and detecting emotions. By extracting these features, developers can gain insights into the subtle changes in facial expressions that signify different emotional states.

Facial feature extraction algorithms utilize techniques such as keypoint detection, texture analysis, and deep learning-based feature extraction to identify and extract relevant information from faces. These extracted features serve as input for emotion recognition models, enabling them to classify emotions accurately in real-time.

Analyzing Emotional Data through AI

In the field of emotion detection, there are various techniques and methods that can be employed to analyze emotional data in real-time. This section will explore some of these approaches and their significance in understanding user emotions.

Sentiment Analysis

Sentiment analysis plays a crucial role in complementing real-time emotion detection by analyzing textual content such as social media posts or customer reviews. It involves the process of determining the sentiment or emotional tone conveyed in text data. By examining the words, phrases, and context used in a piece of text, sentiment analysis algorithms can classify it as positive, negative, or neutral.

By combining sentiment analysis with real-time emotion detection, we can gain a more comprehensive understanding of user emotions. For example, while real-time emotion detection might identify an individual’s facial expressions as happy or sad during a video call, sentiment analysis could provide additional insights into their overall satisfaction with the conversation.

Speech-Based Analyzers

Speech-based analyzers focus on detecting emotions from speech signals. They utilize features such as pitch, intensity, voice quality, and other acoustic characteristics to infer emotional states. By analyzing these audio cues, speech-based analyzers can determine whether someone is speaking with joy, anger, sadness, or any other specific emotion.

Integrating speech-based analyzers into real-time emotion detection systems enhances their multimodal capabilities. This means that instead of solely relying on visual cues from facial expressions or gestures captured through cameras or sensors, these systems can also consider vocal cues to provide a more accurate assessment of an individual’s emotional state.

Neural Networks

Neural networks are computational models inspired by the structure and function of the human brain. They consist of interconnected nodes called neurons organized in layers to process and learn from data. In the context of real-time emotion detection, neural networks play a significant role due to their ability to learn complex patterns.

Real-time emotion detection often relies on neural networks to analyze and interpret various types of data, including facial expressions, speech signals, and textual content.

Advancements in Emotion Recognition Algorithms

Emotion recognition algorithms have made significant advancements, enabling the development of real-time emotion detection systems. Understanding the basics of these algorithms is crucial for creating effective and accurate emotion detection solutions. Algorithms provide step-by-step instructions for solving problems and performing specific tasks, making them essential for developing robust real-time emotion detection systems.

One key aspect of real-time emotion detection is face recognition models. These models play a vital role in identifying and verifying individuals based on their facial features. By integrating face recognition models into emotion detection systems, user identification and personalization can be enhanced. Face recognition models often leverage deep learning techniques to achieve higher accuracy in recognizing faces.

Expression classification is another critical component of real-time emotion detection systems. It focuses on categorizing facial expressions into different emotional states such as happiness, sadness, or anger. Deep learning algorithms are commonly used for expression classification due to their ability to learn complex patterns and accurately classify emotions based on facial cues.

These advancements in emotion recognition algorithms have enabled various applications across different industries. For example, in healthcare, real-time emotion detection can help monitor patients’ emotional well-being during therapy sessions or assist in diagnosing mental health conditions. In marketing and customer service, it can be used to analyze customers’ emotions while interacting with products or services, providing valuable insights for improving user experience.

Moreover, these algorithms have found applications in the entertainment industry as well. Real-time emotion detection can enhance virtual reality experiences by adapting the content based on users’ emotional responses. It can also enable more immersive gaming experiences by dynamically adjusting gameplay elements according to players’ emotions.

The continuous improvement of emotion recognition algorithms has led to increased accuracy and efficiency in real-time emotion detection systems. Researchers are constantly exploring new approaches and techniques to further enhance these algorithms’ performance. As a result, we can expect even more sophisticated and precise emotion detection solutions in the future.

Case Studies and Real-World Applications

Emotional assistant development is a fascinating field that aims to create intelligent systems capable of understanding and responding to human emotions. Real-time emotion detection plays a crucial role in building these emotional assistants, enabling them to provide personalized and empathetic interactions. The applications of emotional assistants are wide-ranging, spanning areas such as mental health support, customer service, and interactive entertainment.

Image and text analytics are essential components of real-time emotion detection systems. These analytical techniques involve extracting insights and information from visual or textual data. By incorporating image and text analytics into the emotion detection process, a more comprehensive understanding of user emotions can be achieved. Analyzing both visual and textual content allows for a deeper analysis of emotional states, providing valuable context for emotional assistant development.

Real-time analyzer development focuses on creating efficient and responsive emotion detection systems. These systems analyze input data in real-time, allowing for immediate feedback or responses based on detected emotions. To achieve this level of responsiveness, developers need to optimize algorithms and leverage hardware acceleration when necessary. The goal is to ensure that the emotion detection process is seamless and does not cause any noticeable delays in system response.

One example of real-world application for real-time emotion detection is in mental health support. Emotional assistants equipped with real-time analyzers can help individuals manage their emotions by providing timely interventions or suggesting coping strategies based on their current emotional state. This can be particularly beneficial for individuals struggling with anxiety or depression who may benefit from immediate support during difficult moments.

Another application lies in customer service interactions. Companies can utilize emotional assistants with real-time emotion detection capabilities to enhance their customer service experiences. By analyzing customer emotions during interactions, companies can proactively address any negative sentiments or frustrations before they escalate further. This proactive approach helps improve customer satisfaction levels and fosters stronger relationships between businesses and their customers.

In the realm of interactive entertainment, real-time emotion detection adds an extra layer of immersion to gaming experiences. Emotional assistants can analyze a player’s emotions in real-time, adapting the gameplay or narrative based on their emotional responses. For example, if a player is feeling anxious during a suspenseful moment in a game, the emotional assistant could adjust the difficulty level or introduce calming elements to alleviate their anxiety and enhance their overall gaming experience.

Enhancing Emotion Detection with Modern Techniques

Real-time emotion detection has become increasingly important in various fields, including human-computer interaction, healthcare, and marketing. To enhance the accuracy and efficiency of emotion detection models, modern techniques have been developed and implemented.

Fast Recognition Methods

Fast recognition methods play a crucial role in reducing the computational complexity of real-time emotion detection models. These methods prioritize speed without compromising accuracy significantly. By implementing fast recognition methods, real-time emotion detection becomes feasible even in resource-constrained environments.

The aim is to process emotions swiftly and efficiently by optimizing the underlying algorithms. This involves utilizing techniques such as feature selection, dimensionality reduction, and model compression. These methods enable the extraction of essential information from input data while discarding redundant or less informative features.

With fast recognition methods, real-time emotion detection systems can keep pace with dynamic environments where emotions change rapidly. For example, in interactive applications like virtual reality or gaming, quick responses are crucial for providing an immersive user experience.

Sentiment Integration

Sentiment integration is another technique that enhances real-time emotion detection by incorporating sentiment analysis into the system. It goes beyond analyzing facial expressions alone and considers information from different modalities such as speech and text to provide a holistic view of user emotions.

By integrating sentiment analysis into real-time emotion detection systems, it becomes possible to capture emotions expressed through multiple channels simultaneously. This comprehensive approach improves the overall performance and applicability of these systems.

For instance, imagine a customer service chatbot that utilizes sentiment integration to analyze both textual messages and vocal tone during interactions with customers. By understanding not only what customers say but also how they say it, the chatbot can respond more effectively based on their emotional state.

Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are a powerful type of deep learning architecture commonly used in computer vision tasks. They excel at processing and analyzing visual data, making them well-suited for real-time emotion detection from images or videos.

CNNs leverage convolutional layers to extract meaningful features from input data. These layers apply filters to capture different patterns and structures within the image, allowing the network to learn representations specific to emotions. By training on large datasets of labeled facial expressions, CNNs can accurately classify emotions in real-time.

The advantage of CNNs lies in their ability to automatically learn relevant features from raw visual data without relying on handcrafted feature engineering. This makes CNN-based models more adaptable and capable of capturing complex emotional cues that may not be easily discernible by humans.

Future of Emotion Detection Technology

Emotional data visualization is an essential aspect of the future of emotion detection technology. It involves presenting emotion-related information in a visually appealing and understandable manner. By using graphs, charts, or other visual elements, emotional data visualization helps users interpret and analyze emotions detected by real-time emotion detection systems.

This technology has significant applications in various fields such as psychology, market research, or user experience design. For example, in psychology, emotional data visualization can aid therapists in understanding their patients’ emotional states more effectively. Market researchers can use it to analyze customer sentiments towards products or advertisements. User experience designers can utilize emotional data visualization to gain insights into how users interact with digital interfaces and improve their overall experience.

Understanding speech-based data is another crucial component of real-time emotion detection systems. To accurately detect emotional states, these systems need to analyze various acoustic features like pitch, intensity, and rhythm present in speech signals. This process involves extracting meaningful information from audio recordings and interpreting them to determine the speaker’s emotional state.

By comprehending speech-based data accurately, real-time emotion detection systems can provide valuable insights into human emotions during conversations or presentations. These insights have practical applications in fields such as mental health diagnosis, customer service analysis, and public speaking training.

AI interpretation methods play a vital role in enhancing transparency and trust in real-time emotion detection systems. These methods involve techniques for understanding and interpreting the output of these models. They aim to provide insights into why certain emotions were detected or how the model arrived at its conclusions.

By employing AI interpretation methods, developers and users can gain a deeper understanding of the inner workings of real-time emotion detection models. This knowledge can help identify biases or limitations within the system’s algorithms and ensure more accurate results.

Furthermore, AI interpretation methods enable researchers to refine existing models by analyzing patterns and trends within large datasets generated by real-time emotion detection systems. This iterative process allows for continuous improvement and refinement of emotion detection technology.

Conclusion

Congratulations! You’ve reached the end of this exciting journey into the world of real-time emotion detection and its integration with GitHub. Throughout this article, we’ve explored various aspects of emotion detection technology, from facial recognition techniques to AI-driven analysis of emotional data. We’ve also delved into the advancements in emotion recognition algorithms and examined real-world case studies and applications.

By now, you have a solid understanding of how emotion detection works and how it can be harnessed in different contexts. But the story doesn’t end here. Emotion detection technology is evolving rapidly, opening up new possibilities for enhancing our understanding of human emotions and improving various industries such as marketing, healthcare, and entertainment.

So, what’s next? It’s time for you to take action! Whether you’re a developer looking to contribute to open-source emotion detection projects on GitHub or a business owner considering implementing emotion detection technology in your organization, seize the opportunity to explore further and make a difference. Embrace the power of real-time emotion detection and unlock its potential in your own unique way.

Frequently Asked Questions

What is real-time emotion detection?

Real-time emotion detection is a technology that uses algorithms and artificial intelligence to analyze facial expressions and determine the emotions of individuals in real-time. It can be used in various applications such as video analysis, market research, and mental health monitoring.

How does GitHub integrate with emotion detection?

GitHub provides a platform for developers to collaborate on projects, including those related to emotion detection. Developers can create repositories, share code, and contribute to existing projects focused on emotion detection. This integration fosters knowledge sharing and accelerates the development of new techniques and algorithms.

What are some popular development environments for emotion analysis?

Popular development environments for emotion analysis include Python with libraries like OpenCV and TensorFlow, MATLAB with the Image Processing Toolbox, and Java with frameworks like JavaFX. These environments provide tools and resources to process images or videos, extract facial features, and apply machine learning algorithms for emotion analysis.

What techniques are used in facial recognition for emotion detection?

Facial recognition techniques used in emotion detection include feature extraction using methods like Haar cascades or deep learning-based models such as Convolutional Neural Networks (CNNs). These techniques help identify key facial landmarks, detect facial expressions, and classify emotions based on patterns found in the face.

How is emotional data analyzed through AI?

Emotional data can be analyzed through AI by training machine learning models using datasets that contain labeled emotional data. These models learn patterns from the data and can then predict emotions from new input. Techniques like supervised learning or deep learning are commonly used to analyze emotional data through AI algorithms.