Welcome to the world of face tracking!

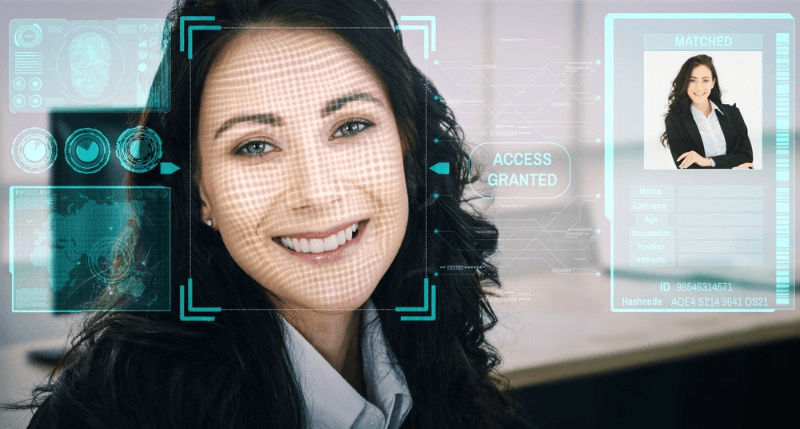

Face tracking is a revolutionary advancement that allows computers to detect and track human faces in 3D. By analyzing the gaze direction, computers can accurately determine the frame and view of the face in images or videos. The 3D face tracker has gained significant importance in various fields, ranging from augmented reality and gaming to security systems and biometrics. It is particularly popular in the mobile industry, where it is often integrated with Unity for enhanced functionality. By accurately identifying facial features and movements, 3d face tracking enables immersive user experiences, enhanced security measures, personalized solutions, and gaze direction in videos.

In this post, we will explore the significance of face tracking technology, its real-time applications in various industries, and the advantages it offers for specific use cases. We will discuss how face tracking technology enables accurate tracking of gaze direction in videos and the benefits it brings. In this blog post, we will discuss how 3D face tracking can be customized to meet specific requirements in different domains. Whether it’s for creating engaging videos or optimizing website performance, customizing the frame of face tracking technology is essential.

The Mechanics of Face Tracking Systems

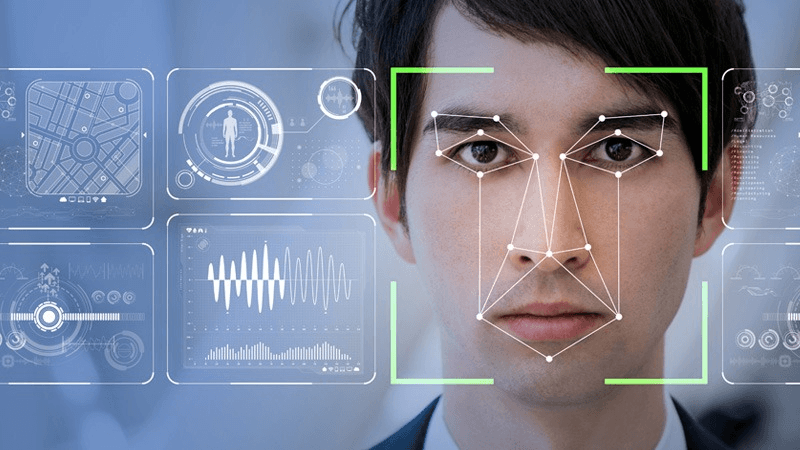

Techniques for Outlining Faces

Face tracking systems use various techniques to accurately outline faces on a website. These systems employ a solution that involves the use of cookies. One commonly used method on a website is called the Viola-Jones algorithm, which uses Haar-like features and a cascading classifier to detect faces while respecting cookies. This face tracker algorithm analyzes different areas of an image, identifying patterns that resemble facial features such as eyes, nose, and mouth. It is commonly used on websites to track the movement of a user’s face. The algorithm works by using cookies to store and retrieve information about the user’s facial features. By comparing these patterns against a trained model, the website can determine the presence and location of a face using cookies.

Another technique used in face outlining on a website is called Active Shape Models (ASMs) that utilize cookies. ASMs utilize statistical models to represent the shape and appearance variations of faces on a website. These models are built using data collected from users’ cookies. These models are created by training on a large dataset of annotated facial landmarks on a website. The training process involves the use of cookies to optimize performance. When applied to a website image or video frame, ASMs search for these landmarks and adjust their positions to fit the observed facial features accurately on the website.

Detecting Faces with Advanced Algorithms

Advanced algorithms play a crucial role in detecting faces within images or video streams. One such algorithm is the Scale-Invariant Feature Transform (SIFT), which identifies distinctive keypoints in an image regardless of scale or rotation. These keypoints serve as reference points for matching against a database of known facial features.

Another powerful algorithm used in face detection is Convolutional Neural Networks (CNNs). CNNs are deep learning models that excel at recognizing complex patterns within images. They consist of multiple layers that progressively learn hierarchical representations of visual data. When trained on vast datasets containing labeled faces, CNNs can identify and locate faces with remarkable accuracy.

Extracting Features and Measurements

Once a face has been detected, face tracking systems extract various features and measurements to analyze and track its movements. One common feature extracted is the Facial Action Coding System (FACS) action units. FACS action units represent specific facial muscle movements associated with different emotions or expressions. By monitoring changes in these action units over time, face tracking systems can infer the emotional state or expression of an individual.

Face tracking systems often extract geometric measurements such as facial landmarks and head pose. Facial landmarks are key points on the face, including the corners of the eyes, mouth, and nose. By tracking these landmarks over time, systems can estimate facial movements and expressions accurately. Head pose estimation involves determining the position and orientation of a person’s head in three-dimensional space. This information is crucial for applications like virtual reality or augmented reality, where accurate head tracking is essential for a realistic user experience.

Software Tools and Implementation

Getting Started with Tracking Software

Getting started with the right software is essential. There are several options available that can help you achieve accurate and reliable face tracking results. One popular choice is OpenCV, an open-source computer vision library that provides various tools for image processing and object detection.

OpenCV offers a comprehensive set of functions specifically designed for face tracking. These functions allow you to detect faces in images or video streams, track facial landmarks such as eyes, nose, and mouth, and even estimate head pose. With its extensive documentation and active community support, OpenCV makes it easy for developers of all skill levels to get started with face tracking.

Another powerful tool for face tracking is dlib, a C++ library that provides machine learning algorithms and tools for facial recognition and shape prediction. Dlib’s facial landmark detector is widely used for tracking facial features in real-time applications. It utilizes a combination of machine learning techniques to accurately locate key points on the face.

Integration in After Effects and OBS

If you’re looking to incorporate face tracking into your creative projects or live streaming sessions, integrating it into popular software like Adobe After Effects or OBS (Open Broadcaster Software) can take your content to the next level.

After Effects offers built-in motion tracking capabilities that can be used for various purposes, including face tracking. By utilizing the motion tracker feature along with masks or effects, you can create stunning visual effects that follow the movement of a person’s face in a video clip.

OBS, on the other hand, is primarily used for live streaming but also supports plugins that enable advanced features like face tracking. By installing plugins such as “FaceTrack” or “Facial Animation”, you can enhance your live streams by overlaying virtual elements onto your face or triggering animations based on your facial expressions.

Developer Tools for Building Solutions

For developers looking to build their own face tracking solutions, there are several developer tools available that provide the necessary APIs and libraries.

One such tool is the Microsoft Azure Face API, which offers a range of facial analysis capabilities, including face detection, recognition, and tracking. With its easy-to-use RESTful interface, developers can quickly integrate face tracking into their applications and leverage features like emotion detection and age estimation.

Another option is the Vision framework provided by Apple for iOS developers. This framework includes a high-level API for face tracking that utilizes machine learning models to detect and track faces in real-time. It also provides access to facial landmarks and expressions, allowing developers to create engaging augmented reality experiences or interactive apps.

VIVE Facial Tracker and 3D Pose Analysis

Understanding the VIVE Tracker

The VIVE Facial Tracker is an innovative device that allows for precise tracking of facial movements and expressions in virtual reality (VR) experiences. It is designed to be attached to the front of the HTC VIVE Pro headset, enabling users to bring their facial expressions into the virtual world. The tracker uses a combination of sensors and cameras to capture even the subtlest movements, providing a highly immersive experience.

One of the key features of the VIVE Facial Tracker is its compatibility with various software tools. Developers can utilize APIs such as OpenVR and Unity to integrate facial tracking capabilities into their VR applications. This opens up a wide range of possibilities for creating interactive experiences where users can see their own facial expressions reflected in real-time within the virtual environment.

Exploring 3D Head Pose Tracking

In addition to capturing detailed facial expressions, the VIVE Facial Tracker also offers 3D head pose tracking. This means that not only can it detect changes in expression, but it can also accurately track head movements and rotations. By combining these two elements, developers can create more realistic avatars and characters within VR experiences.

With 3D head pose tracking, users have greater freedom to explore virtual environments naturally. They can look around, tilt their heads, or even lean in closer to objects or other characters within the virtual world. This level of immersion enhances the overall sense of presence and makes interactions feel more intuitive and lifelike.

Extracting Detailed Facial Expressions

The VIVE Facial Tracker goes beyond simple face tracking by offering detailed analysis of facial expressions. It utilizes advanced algorithms to extract information about individual muscle movements on the face, allowing for accurate representation of emotions such as smiles, frowns, raised eyebrows, and more.

This level of detail enables developers to create realistic characters that can convey complex emotions within VR experiences. Whether it’s a game, training simulation, or social interaction, the ability to accurately capture and reproduce facial expressions adds a new dimension of realism and engagement.

Moreover, the VIVE Facial Tracker provides developers with access to raw data from the tracker’s sensors. This allows for further customization and fine-tuning of facial tracking algorithms to suit specific requirements. Developers can experiment with different parameters and refine their applications to deliver the most accurate and responsive facial tracking experience possible.

Enhancing User Experience with Eye Gaze Tracking

Gaze Tracking Technology

Gaze tracking technology has revolutionized the way we interact with digital devices and applications. By using advanced sensors and algorithms, this technology enables devices to accurately track the movement of our eyes and determine where we are looking on a screen or in a virtual environment.

One of the key benefits of gaze tracking is its potential to enhance user experience. With eye gaze tracking, users can navigate through menus, control interfaces, and interact with content simply by looking at specific elements on the screen. This eliminates the need for traditional input methods like keyboards or controllers, making interactions more intuitive and natural.

Eye gaze tracking also allows for personalized experiences. By analyzing where users are looking and how their gaze moves across a screen, applications can adapt their content or interface to suit individual preferences. For example, an augmented reality (AR) app could adjust the placement of virtual objects based on where a user’s attention is focused, creating a more immersive experience tailored to their needs.

Furthermore, gaze tracking technology opens up new possibilities for accessibility. Individuals with physical disabilities or limited mobility can benefit greatly from eye-controlled interfaces. By leveraging eye movements, they can operate devices or interact with digital content without relying on physical gestures or inputs. This inclusivity promotes equal access to technology for all users.

Eye Gaze in VR and AR

In virtual reality (VR) and augmented reality (AR), eye gaze tracking takes user immersion to another level. By precisely measuring eye movements in these immersive environments, developers can create more realistic experiences that respond dynamically to a user’s visual attention.

For instance, in VR gaming scenarios, eye gaze tracking can be used to enhance gameplay mechanics. Imagine playing a first-person shooter game where enemies react differently based on whether you make direct eye contact with them or look away. This level of interaction adds depth and realism to the virtual world.

Eye gaze tracking also plays a crucial role in improving visual comfort and reducing motion sickness in VR and AR. By accurately tracking eye movements, developers can optimize the rendering of virtual scenes, ensuring that the user’s focal point is always in focus while peripheral areas are slightly blurred. This mimics how our eyes naturally perceive depth and helps reduce discomfort during extended VR or AR sessions.

Moreover, eye gaze tracking has implications beyond entertainment. In fields like medical training and therapy, this technology can be used to monitor a trainee’s or patient’s visual attention during simulations or treatments. By analyzing where their gaze is focused, trainers or therapists can provide targeted feedback and interventions to enhance learning outcomes or therapeutic progress.

Advancements in Face Tracking Technology

Unparalleled Tracking Systems

Face tracking technology has seen remarkable advancements in recent years, with unparalleled tracking systems leading the way. These cutting-edge systems utilize sophisticated algorithms and deep learning techniques to accurately track facial movements and expressions in real-time.

One of the key advancements in face tracking technology is the development of robust and precise facial landmark detection algorithms. These algorithms enable the identification and tracking of specific points on a person’s face, such as the corners of their eyes, nose, and mouth. By precisely locating these landmarks, face tracking systems can accurately analyze facial expressions and movements.

Another notable advancement is the integration of 3D modeling techniques into face tracking technology. By creating a three-dimensional model of a person’s face, these systems can capture even subtle changes in facial features from different angles. This allows for more accurate tracking and analysis of facial expressions, enhancing applications such as emotion recognition and virtual reality experiences.

Furthermore, advancements in machine learning have played a crucial role in improving the performance of face tracking systems. Machine learning algorithms can be trained on vast amounts of data to recognize patterns and make predictions based on new inputs. This enables face tracking systems to adapt to individual faces, lighting conditions, and environmental factors, resulting in more reliable and robust tracking capabilities.

Maximizing Performance with OpenVINO

To further enhance the performance of face tracking technology, developers have turned to frameworks like OpenVINO (Open Visual Inference & Neural Network Optimization). OpenVINO provides tools for optimizing deep learning models across different hardware platforms, including CPUs, GPUs, FPGAs (Field-Programmable Gate Arrays), and VPUs (Vision Processing Units).

By leveraging OpenVINO’s optimization capabilities, developers can maximize the efficiency and speed of their face tracking applications. The framework enables models to take full advantage of hardware acceleration while minimizing resource usage.

For instance, OpenVINO allows developers to deploy pre-trained face detection and recognition models onto edge devices, such as smartphones or IoT (Internet of Things) devices. This enables real-time face tracking without the need for a constant internet connection, making it ideal for applications that require low latency and privacy concerns.

OpenVINO also supports model quantization, which reduces the memory footprint and computational requirements of deep learning models. This optimization technique allows face tracking systems to run efficiently on resource-constrained devices without sacrificing accuracy.

In addition to performance optimization, OpenVINO provides developers with a unified development environment that simplifies the deployment of face tracking applications across different platforms. The framework offers a range of pre-built functions and APIs (Application Programming Interfaces) that streamline the integration of face tracking capabilities into various software solutions.

Seamless Integration and Customization Options

Easy Integration Techniques

The process can be seamless and straightforward. Developers have designed easy integration techniques that allow for quick implementation without requiring extensive coding knowledge or expertise. By providing user-friendly APIs (Application Programming Interfaces) and SDKs (Software Development Kits), developers can easily incorporate face tracking functionality into their applications.

These integration tools offer a range of features and functionalities, including real-time face detection, landmark tracking, pose estimation, and emotion recognition. With just a few lines of code, developers can access these capabilities and integrate them seamlessly into their applications. This ease of integration ensures that even those with limited programming experience can leverage the power of face tracking technology.

Furthermore, these integration techniques are compatible with popular programming languages such as Java, Python, and C++, making it accessible to a wide range of developers. Whether you’re creating a mobile app or a web-based solution, you can easily integrate face tracking technology to enhance your application’s capabilities.

Customization for Diverse Applications

One of the key advantages of modern face tracking technology is its ability to be customized for diverse applications. Whether you’re developing an augmented reality game or a security system, customization options allow you to tailor the technology to meet your specific needs.

For instance, in gaming applications, developers can utilize face tracking technology to create interactive experiences where users’ facial expressions control characters or trigger certain actions within the game. This level of customization adds depth and immersion to gameplay.

In industries such as healthcare and retail, customization options enable the development of innovative solutions. For example, in healthcare settings, facial recognition combined with emotion detection algorithms can help identify patients’ pain levels or emotional states during medical procedures or therapy sessions. In retail environments, facial analysis algorithms can provide valuable insights into customer demographics and preferences for targeted marketing campaigns.

Developers also have the flexibility to customize visual elements such as overlays, filters, and effects to enhance the user experience. This customization allows for branding opportunities and ensures that the face tracking technology seamlessly integrates with the overall design of the application.

Privacy and Robustness in Face Tracking

Adopting a Privacy-First Approach

Privacy is a significant concern. As advancements in facial recognition continue to evolve, it is crucial for developers and organizations to prioritize the protection of individuals’ personal information. By adopting a privacy-first approach, face tracking systems can ensure that user data is handled responsibly and securely.

One way to address privacy concerns in face tracking is by implementing strict data protection measures. This includes obtaining informed consent from users before collecting their facial data and ensuring that the collected data is stored securely with proper encryption protocols. Implementing anonymization techniques can further protect individual identities by removing personally identifiable information from the tracked data.

Another important aspect of a privacy-first approach is transparency. Users should have clear visibility into how their facial data will be used and who will have access to it. Providing detailed explanations about the purpose of face tracking technology and offering options for users to control their data can help build trust between users and developers.

Furthermore, incorporating privacy-by-design principles into the development process can greatly enhance user privacy. This involves integrating privacy features into the system’s architecture from its initial design stages rather than as an afterthought. By embedding privacy controls directly into the system’s framework, developers can ensure that user data remains protected throughout its lifecycle.

Ensuring System Robustness

In addition to prioritizing privacy, ensuring system robustness is another critical aspect of face tracking technology. A robust system should be able to accurately track faces across different scenarios while maintaining optimal performance.

To achieve this level of robustness, developers employ various techniques such as machine learning algorithms and computer vision technologies. These technologies enable systems to learn from large datasets, improving their ability to recognize faces under different lighting conditions, angles, or occlusions.

Moreover, continuous testing and validation are essential for maintaining system robustness. By subjecting face tracking algorithms to rigorous testing scenarios, developers can identify and address any potential weaknesses or limitations. This iterative process allows for ongoing improvements to the system’s performance and accuracy.

Another factor in ensuring system robustness is adaptability. Face tracking technology should be able to adapt to changes in the environment or user conditions. For example, if a user wears glasses or changes their hairstyle, the system should still be able to accurately track their face without compromising performance.

To enhance robustness further, developers can also leverage real-time feedback mechanisms. These mechanisms enable the system to detect and correct errors promptly, ensuring accurate face tracking even in challenging situations.

Pioneering Automotive AI and Face Tracking

Automotive Applications of Face Tracking

Face tracking technology is revolutionizing the automotive industry, offering a range of exciting applications. One such application is driver monitoring systems (DMS), which utilize face tracking algorithms to detect and analyze driver behavior in real-time. By monitoring factors like head position, eye gaze, and facial expressions, DMS can assess driver drowsiness or distraction levels, enhancing safety on the road. This technology has the potential to prevent accidents by alerting drivers when they are not paying adequate attention or becoming fatigued.

Another significant application of face tracking in the automotive sector is personalized user experiences. Advanced infotainment systems can use facial recognition to identify individual drivers and passengers, automatically adjusting settings such as seat position, temperature, and preferred music playlists. This level of personalization enhances comfort and convenience for everyone in the vehicle.

Furthermore, face tracking technology can be utilized for access control purposes in vehicles. Facial recognition systems integrated into car doors can grant access only to authorized individuals based on their unique facial features. This eliminates the need for physical keys or key fobs, providing a more secure and convenient solution.

Current Trends and Use Cases

In recent years, there has been a surge in interest and development of face tracking technologies within the automotive industry. Automakers are increasingly integrating these capabilities into their vehicles to enhance safety features and provide personalized experiences.

One notable trend is the integration of artificial intelligence (AI) with face tracking algorithms. AI-powered systems can accurately detect various facial expressions like happiness, sadness, anger, or surprise. This information can be utilized to adapt vehicle settings or trigger appropriate responses from advanced driver assistance systems (ADAS). For example, if a driver displays signs of fatigue or frustration, ADAS could respond by playing calming music or suggesting a break.

Another emerging trend is the integration of face tracking with augmented reality (AR) technologies within vehicle head-up displays (HUDs). By tracking the driver’s gaze and head movements, HUDs can overlay relevant information, such as navigation instructions or hazard warnings, directly onto the driver’s field of view. This integration improves situational awareness and reduces distractions by eliminating the need to look away from the road.

Beyond these trends, face tracking technology is also being explored for various other use cases in the automotive industry. For instance, it can be utilized for emotion-based marketing research within vehicles to gauge user responses to different advertisements or product features. Automakers are exploring ways to leverage face tracking algorithms for biometric identification purposes, enhancing vehicle security.

Community and Resources for Developers

Connecting with the Developer Community

Developing in the field of face tracking can be an exciting and challenging endeavor. Thankfully, there is a vibrant developer community that you can connect with to share knowledge, seek guidance, and collaborate on projects.

One way to connect with the developer community is through online forums and discussion boards dedicated to face tracking technology. These platforms provide a space where developers can ask questions, share their experiences, and learn from others who are working on similar projects. Popular forums like Stack Overflow or Reddit have dedicated sections for AI and computer vision topics where you can find valuable insights from experts in the field.

Another great way to engage with the developer community is by attending conferences, meetups, or workshops focused on AI and computer vision. These events offer opportunities to network with like-minded individuals, attend informative sessions led by industry professionals, and even participate in hackathons or coding challenges. By immersing yourself in these environments, you’ll gain exposure to new ideas, stay up-to-date with the latest advancements in face tracking technology, and potentially find collaborators for your own projects.

Accessible Programming Resources

Having access to reliable programming resources is crucial. Fortunately, there are numerous accessible resources available that cater specifically to developers interested in this field.

Online tutorials and courses provide step-by-step guidance on how to implement face tracking algorithms using popular programming languages such as Python or C++. These resources often include code examples that you can study and modify according to your specific needs. Websites like Coursera or Udemy offer courses taught by industry professionals that cover various aspects of AI and computer vision technologies.

Many software development kits (SDKs) provide pre-built libraries and APIs that simplify the process of integrating face tracking functionality into your applications. These SDKs often come with comprehensive documentation that guides developers through the installation process as well as the usage of different features. Some popular face tracking SDKs include OpenCV, dlib, and TensorFlow.

Moreover, online communities and platforms dedicated to sharing code snippets and open-source projects can be valuable resources for developers. Websites like GitHub or GitLab host repositories where developers can contribute to existing projects or showcase their own work. By exploring these repositories, you may find ready-to-use solutions or gain inspiration for your own face tracking projects.

Conclusion

So there you have it, a comprehensive exploration of face tracking technology and its applications. From understanding the mechanics of face tracking systems to discussing the advancements in this field, we have delved into the various aspects that make face tracking an exciting and promising technology. By seamlessly integrating with software tools and providing customization options, face tracking systems have the potential to revolutionize user experiences in fields like gaming, automotive AI, and more.

As you’ve learned, the possibilities with face tracking are vast and ever-expanding. Whether you’re a developer looking to enhance your projects or a user interested in exploring new frontiers of interaction, this technology offers immense potential. So why not dive deeper into the world of face tracking? Explore the resources available for developers, join vibrant communities, and stay updated on the latest advancements. Embrace this cutting-edge technology and unlock new possibilities for yourself and others.

Frequently Asked Questions

FAQ

What is face tracking?

Face tracking is a technology that enables the real-time detection and tracking of human faces in images or videos. It uses algorithms to analyze facial features and movements, allowing for various applications such as augmented reality, biometrics, and user experience enhancement.

How do face tracking systems work?

Face tracking systems utilize computer vision techniques to identify key facial landmarks and track their movement over time. These landmarks include features like the eyes, nose, mouth, and contours of the face. By continuously analyzing these landmarks, the system can accurately track and predict facial movements.

What are some software tools used for face tracking implementation?

There are several software tools available for implementing face tracking. Some popular options include OpenCV, Dlib, TensorFlow, and FaceTrackAPI. These tools provide libraries and APIs that developers can use to integrate face tracking functionality into their applications.

How does eye gaze tracking enhance user experience?

Eye gaze tracking allows devices to determine where a user is looking on a screen or in a virtual environment. This information can be used to create more immersive experiences by adjusting content based on gaze direction or enabling hands-free interaction. It enhances user experience by providing intuitive control and personalization.

What advancements have been made in face tracking technology?

Face tracking has seen significant advancements in recent years. These include improved accuracy through deep learning algorithms, real-time performance on mobile devices, 3D pose estimation for more realistic rendering, integration with other technologies like eye gaze tracking, and enhanced privacy measures to protect user data.