In today’s digital world, where biometric data is becoming increasingly prevalent, the need for robust security measures has never been greater. That’s where anti-spoofing technology comes into play. Designed to prevent unauthorized access to biometric systems, this technology implements measures to detect and block spoofing attempts. Understanding the fundamentals of anti-spoofing technology is crucial for ensuring the security of sensitive biometric data.

The importance of anti-spoofing technology in maintaining overall security cannot be overstated. It plays a vital role in safeguarding against fraudulent activities and identity theft, protecting individuals’ personal information from falling into the wrong hands. By securely storing and encrypting biometric data and employing strong authentication protocols, organizations can add an extra layer of protection to their systems. Regularly updating these security measures is necessary to stay one step ahead of evolving spoofing techniques.

Spoofing Threats Overview

Types of Spoofing

Spoofing is a deceptive technique used by cybercriminals to gain unauthorized access to systems and networks. There are various types of spoofing, each requiring specific anti-spoofing techniques for effective prevention.

Voice spoofing involves impersonating someone’s voice to trick voice recognition systems. By mimicking the unique vocal characteristics of an individual, attackers can bypass security measures that rely on voice authentication. Anti-spoofing technology in this case could include analyzing additional factors like speech patterns or using advanced algorithms to detect anomalies in the voice signal.

Face spoofing is another common type where fraudsters use counterfeit images or videos to deceive facial recognition systems. By presenting a fake face, they attempt to gain access to secure areas or unlock devices protected by facial recognition. To counter this threat, anti-spoofing techniques can involve liveness detection methods such as checking for eye movement or analyzing depth information from 3D cameras.

Fingerprint spoofing targets biometric fingerprint scanners by creating artificial fingerprints to fool the system into granting unauthorized access. Anti-spoofing measures in this case may include analyzing sweat pores or detecting temperature variations on the finger surface, ensuring that only real human fingerprints are recognized.

Similarly, iris spoofing involves fabricating iris patterns using high-resolution prints or contact lenses with printed irises. This type of attack aims to deceive iris recognition systems and gain illicit entry into secure locations or devices. Effective anti-spoofing technology can employ multi-factor authentication methods that combine iris recognition with other biometric factors like eye movement analysis or pupil dilation checks.

Understanding the characteristics and vulnerabilities associated with each type of spoofing is crucial for developing targeted countermeasures against these threats. Implementers must stay vigilant and continually update their anti-spoofing techniques as attackers evolve their methods.

Spoofing Implications

The implications of successful spoofing attacks can be severe. Once an attacker gains unauthorized access to a system or network, they can exploit sensitive information, compromise data integrity, and potentially cause financial losses and reputational damage.

In the case of biometric data breaches resulting from spoofing attacks, individuals’ personal information may be compromised. This can lead to identity theft, fraudulent activities, and significant financial repercussions for both individuals and organizations.

Implementing anti-spoofing technology is crucial for mitigating these risks and ensuring the integrity of systems that rely on biometric authentication. By implementing robust anti-spoofing measures, organizations can enhance their security posture and protect against potential spoofing threats.

Network Security Measures

ARP Vulnerabilities

Address Resolution Protocol (ARP) vulnerabilities pose a significant threat to network security. These vulnerabilities can be exploited by attackers to carry out spoofing attacks, where they impersonate legitimate devices on the network. To combat this, it is crucial to implement effective ARP spoofing detection mechanisms.

By implementing ARP spoofing detection mechanisms, network administrators can identify and prevent these types of attacks. These mechanisms monitor network traffic for any suspicious activity related to ARP requests and responses. If an anomaly is detected, such as multiple devices responding with the same MAC address or IP address conflicts, immediate action can be taken to mitigate the attack.

Regularly monitoring network traffic is also essential in detecting suspicious ARP activities. By analyzing the patterns and behavior of ARP requests and responses, administrators can identify any anomalies or signs of spoofing attempts. This proactive approach allows for swift response and mitigation before any significant damage occurs.

UDP Vulnerabilities

User Datagram Protocol (UDP) vulnerabilities also present a risk. UDP is a connectionless protocol that does not provide built-in security measures like TCP. Attackers can exploit these vulnerabilities by forging source IP addresses in UDP packets, making it difficult to trace the origin of malicious activities.

To enhance protection against UDP-based spoofing attacks, implementing UDP source port randomization is crucial. This technique involves assigning random source ports for outgoing UDP packets instead of using predictable values. By doing so, it becomes more challenging for attackers to guess or manipulate the source port information, reducing their ability to launch successful spoofing attacks.

Regularly updating software patches helps address known UDP vulnerabilities. Software vendors often release updates that include fixes for identified security flaws in protocols like UDP. Keeping systems up-to-date ensures that any known vulnerabilities are patched promptly, minimizing the risk of exploitation by attackers.

Ingress Filtering

Ingress filtering is a powerful technique used to prevent IP spoofing attacks. It involves filtering incoming network traffic based on the source IP addresses of packets. By implementing strict ingress filtering policies, organizations can significantly mitigate the risk of spoofing.

Ingress filtering works by comparing the source IP address of an incoming packet with a list of allowed or expected IP addresses for that particular network segment. If the source IP address does not match any valid addresses, the packet is dropped or discarded, preventing potential spoofing attempts from reaching their intended targets.

Wireless Network Protections

Attack Prevention

Implementing multi-factor authentication adds an extra layer of protection against spoofing attacks. By requiring users to provide multiple forms of identification, such as a password and a unique code sent to their mobile device, the risk of unauthorized access is significantly reduced. This makes it much harder for attackers to impersonate legitimate users and gain access to sensitive information or network resources.

Regularly educating users about potential risks and best practices helps prevent successful attacks. By raising awareness about the dangers of spoofing and providing guidance on how to identify and respond to suspicious activity, organizations can empower their employees to be proactive in protecting themselves and the network. This includes advising users not to click on suspicious links or download attachments from unknown sources, as these are common tactics used by attackers.

Employing intrusion detection systems aids in detecting and blocking spoofing attempts. These systems monitor network traffic in real-time, analyzing patterns and behaviors that may indicate a spoofing attack. When a potential threat is detected, the system can automatically block the malicious activity or alert security personnel for further investigation. This proactive approach helps mitigate the impact of spoofing attacks before they can cause significant damage.

Security Enhancements

Continuous monitoring and analysis of system logs help identify potential security vulnerabilities. By regularly reviewing log files generated by routers, firewalls, and other network devices, organizations can detect any unusual or suspicious activities that may indicate a spoofing attempt. Analyzing these logs allows security teams to take immediate action to address any identified weaknesses or vulnerabilities in their anti-spoofing measures.

Regular penetration testing assists in identifying weaknesses in anti-spoofing measures. By simulating real-world attacks on the wireless network infrastructure, organizations can evaluate the effectiveness of their existing defenses against various types of spoofing techniques. Penetration tests provide valuable insights into areas that require improvement or additional safeguards, allowing organizations to strengthen their overall security posture.

Implementing real-time threat intelligence feeds enhances the ability to detect emerging spoofing techniques. By subscribing to reputable threat intelligence services, organizations can receive timely updates about new and evolving threats, including the latest spoofing tactics. This information enables security teams to stay one step ahead of attackers by proactively implementing countermeasures to protect against these emerging threats.

Biometric Anti-Spoofing Essentials

Voice Biometrics

Voice biometrics is a technology that utilizes unique voice characteristics for user identification. By analyzing speech patterns and detecting synthetic voices, anti-spoofing technology adds an extra layer of security to voice biometrics systems. This ensures that the system can differentiate between genuine human voices and artificially generated ones.

To enhance security even further, voice biometrics can be combined with other authentication factors such as passwords or fingerprints. This multi-factor authentication approach strengthens overall security by requiring multiple forms of verification before granting access to sensitive information or systems.

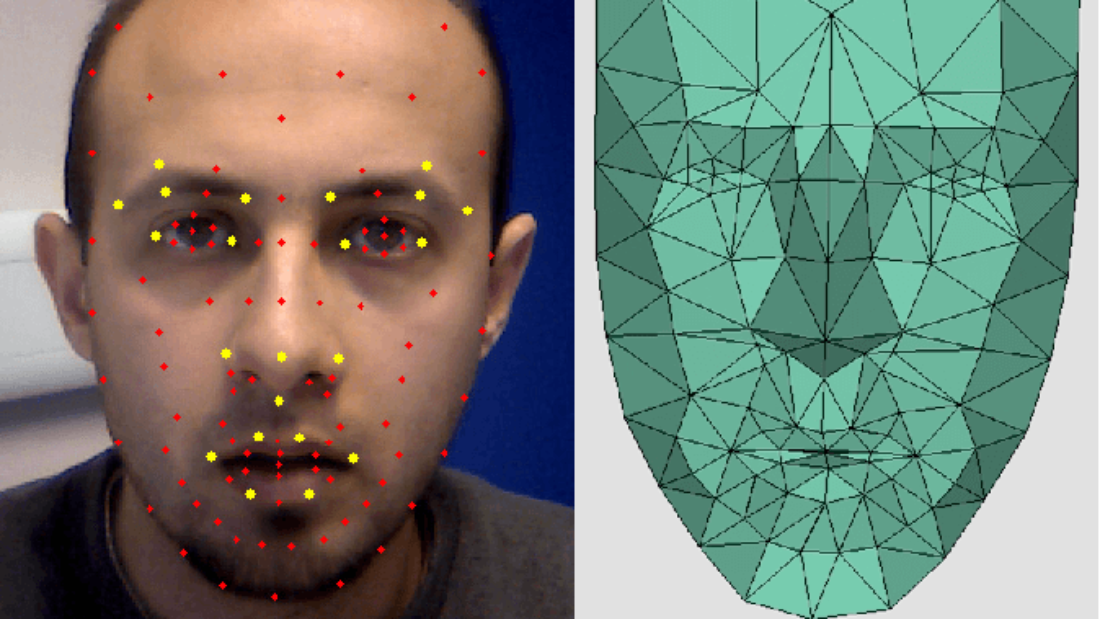

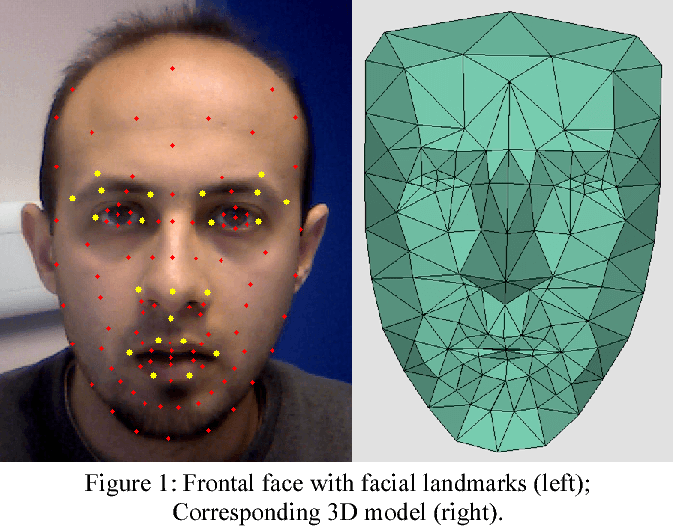

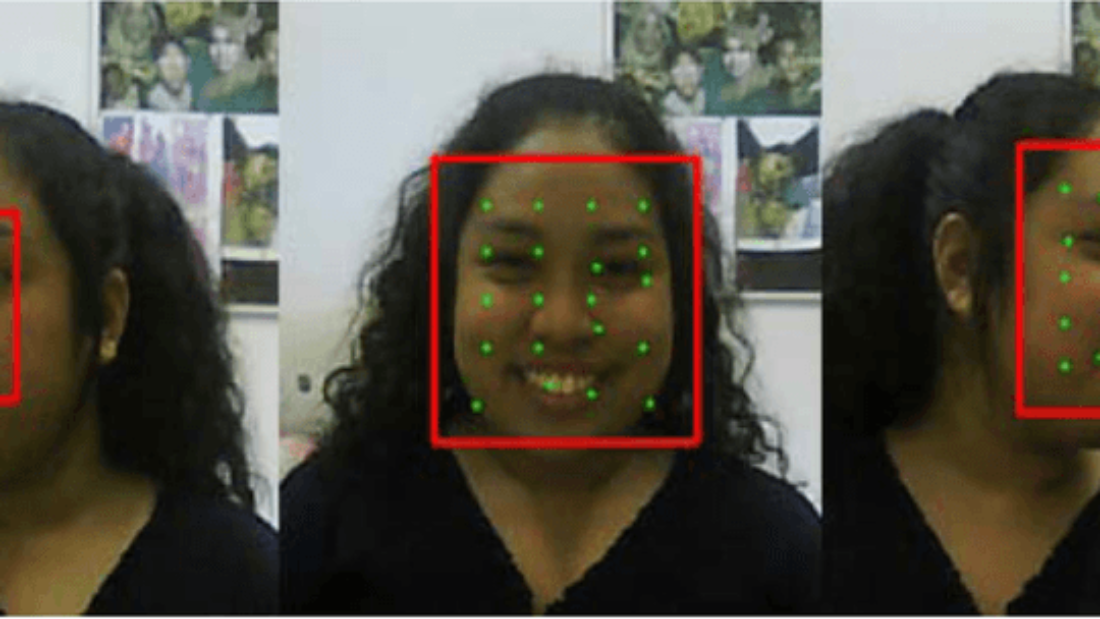

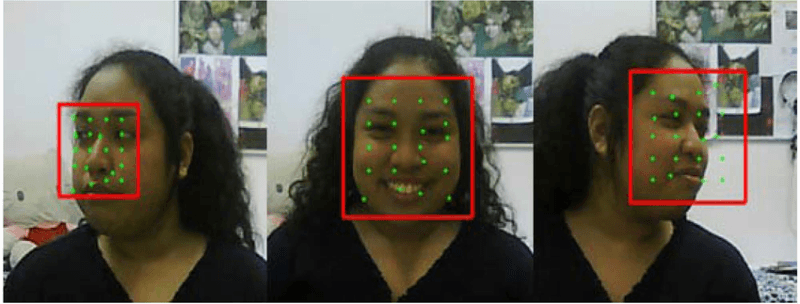

Face Biometrics

Face biometrics rely on facial features for user identification. However, this form of biometric authentication is vulnerable to spoofing attempts using masks, photos, or videos. To counter these threats, anti-spoofing techniques have been developed.

Advanced algorithms are employed to analyze facial movement and depth, allowing the system to distinguish between real faces and spoofed ones. By examining subtle details like blinking patterns and changes in facial expressions, the anti-spoofing technology can identify fraudulent attempts to deceive the system.

In addition to detecting static images or videos used for spoofing purposes, face biometric systems can also utilize liveness detection mechanisms. These mechanisms prompt users to perform specific actions or gestures during the authentication process, ensuring that a live person is present rather than a static image or video recording.

By combining different types of biometric data such as voice and face recognition, organizations can create more robust authentication systems that are resistant to spoofing attacks. The integration of multiple biometric factors enhances security by making it significantly more difficult for malicious actors to bypass these measures.

Biometric anti-spoofing technology plays a crucial role in safeguarding sensitive information and protecting individuals’ identities from fraudulent activities. As technology continues to advance, so do the methods employed by attackers attempting to exploit vulnerabilities in biometric systems. Therefore, ongoing research and development in anti-spoofing techniques are essential to stay one step ahead of potential threats.

Biometric Authentication Assurance

Ensuring Security

Regularly updating anti-spoofing software is crucial to ensure the security of biometric authentication systems. By staying up-to-date with the latest advancements in anti-spoofing technology, organizations can protect themselves against new attack methods. These updates often include improvements in detecting and preventing spoofing attempts, enhancing the overall security of the system.

In addition to software updates, conducting regular security audits is essential. These audits help identify any vulnerabilities in the biometric authentication system that could be exploited by attackers. By proactively assessing and addressing potential weaknesses, organizations can strengthen their defenses and reduce the risk of unauthorized access.

Collaborating with cybersecurity experts can provide valuable insights into enhancing overall security. These experts have a deep understanding of the latest threats and attack techniques, allowing them to offer expert guidance on implementing effective anti-spoofing measures. Their expertise can help organizations stay one step ahead of potential attackers and ensure robust protection for their biometric authentication systems.

Trust Building Role

Effective anti-spoofing measures play a vital role in building trust among users who rely on biometric authentication. Users need assurance that their biometric data is secure and cannot be easily manipulated or spoofed by malicious actors. Implementing robust anti-spoofing technologies helps establish this trust by ensuring the integrity of users’ biometric information.

Establishing a reputation for robust security measures also attracts more users to adopt biometric systems. In an increasingly digital world where data breaches are prevalent, individuals are more cautious about sharing their personal information. By demonstrating a commitment to protecting user data through effective anti-spoofing measures, organizations can instill confidence in potential users and encourage wider adoption of biometric authentication.

Transparency plays a crucial role in trust-building efforts as well. Organizations should communicate openly about the implemented anti-spoofing technologies, explaining how they work and highlighting their effectiveness. This transparency helps users understand the measures in place to protect their biometric data and reinforces their trust in the system.

Anti-Spoofing for Voice Systems

Recognition Technology

Anti-spoofing technology plays a crucial role in biometric recognition systems, ensuring the security and integrity of the authentication process. By incorporating advanced algorithms and techniques, anti-spoofing technology helps differentiate between genuine biometric data and spoofed attempts.

With continuous advancements in anti-spoofing techniques, the accuracy and reliability of recognition technology have significantly improved. These advancements enable biometric systems to detect various types of spoofing attacks effectively. For example, anti-spoofing algorithms can analyze subtle differences in facial expressions or skin texture to identify fake fingerprints or face images.

The ongoing development of anti-spoofing technology is vital due to the ever-evolving nature of spoofing attacks. Hackers constantly devise new methods to deceive voice recognition systems, making it imperative for researchers and developers to stay one step ahead. This proactive approach ensures that biometric recognition systems remain robust against emerging threats.

Combatting Voice Attacks

Voice attacks are a common target for spoofers attempting to bypass voice-based authentication systems. To counter such attacks, anti-spoofing measures focus on analyzing various aspects of the voice signal.

One effective method involves examining speech patterns and characteristics unique to an individual’s voiceprint. By comparing these patterns with stored samples, anti-spoofing algorithms can identify discrepancies indicative of a synthetic voice or pre-recorded audio.

Background noise analysis is another technique employed by anti-spoofing technology. Genuine voices often contain subtle variations caused by environmental factors such as room acoustics or background sounds. By scrutinizing these acoustic properties, anti-spoofing algorithms can differentiate between real voices and artificially generated ones.

To further enhance security measures against voice spoofing attacks, liveness detection techniques are implemented. Liveness detection ensures that the captured sample is from a live person rather than a recording or synthetic source. This can be achieved by incorporating challenges that require real-time interaction, such as asking the user to repeat a randomly generated phrase or perform specific actions while speaking.

Ongoing research in the field of anti-spoofing technology focuses on developing more robust methods for voice biometrics. These advancements aim to strengthen the security of voice recognition systems against evolving spoofing techniques. By continuously refining and improving anti-spoofing algorithms, researchers strive to create highly accurate and reliable solutions that can effectively combat voice attacks.

Face Recognition Technologies

Anti-Spoofing Methods

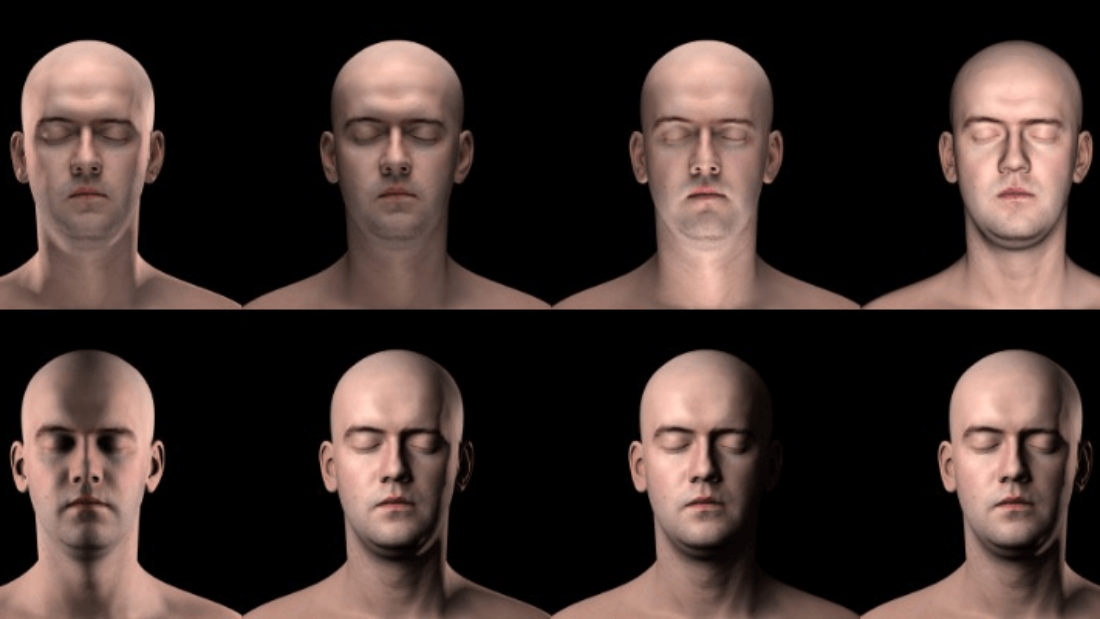

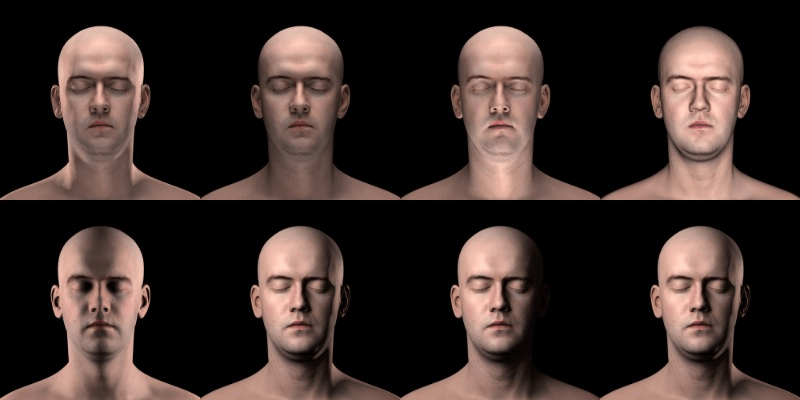

Anti-spoofing methods play a crucial role in ensuring the reliability and security of face recognition technologies. These methods employ various techniques to detect and prevent spoof attacks, where an impostor tries to deceive the system using fake biometric information.

One commonly used anti-spoofing method is feature-based analysis. This approach examines specific facial features such as texture, depth, or motion to distinguish between genuine faces and spoofed ones. By analyzing these features, the system can identify inconsistencies or irregularities that indicate a potential spoof attack.

Another effective method is motion detection. This technique focuses on detecting unnatural movements in front of the camera, which are often associated with spoof attempts. By monitoring the motion patterns during face recognition, the system can differentiate between a live person and a static image or video playback.

Texture analysis is another powerful anti-spoofing technique employed by face recognition systems. It involves analyzing the fine details of facial textures, such as pores or wrinkles, to determine their authenticity. Spoofed images or videos typically lack these intricate details, allowing texture analysis algorithms to flag them as potential spoofs.

To enhance accuracy and robustness, researchers are continuously exploring ways to combine multiple anti-spoofing methods. By leveraging the strengths of different techniques, these hybrid approaches can effectively detect various types of spoof attacks with higher precision.

Ongoing research in this field aims to develop more sophisticated and efficient anti-spoofing techniques. Researchers are exploring advanced machine learning algorithms, deep neural networks, and artificial intelligence models to improve the detection capabilities of face recognition systems further.

Functionality Measures

While ensuring robust anti-spoofing measures is essential for protecting biometric systems from fraudulent activities, it is equally important not to compromise their usability and functionality. Striking a balance between security measures and user experience is crucial for widespread adoption of face recognition technologies.

Regular testing and user feedback play a vital role in refining anti-spoofing measures without hindering system performance. By continuously evaluating the effectiveness of these methods and incorporating user insights, developers can make necessary adjustments to enhance both security and usability.

Moreover, integrating anti-spoofing technology seamlessly into existing biometric systems is key. This ensures that users can experience a smooth and hassle-free authentication process while maintaining a high level of security against spoof attacks.

To achieve this, developers should consider factors such as processing speed, resource requirements, and compatibility with different hardware devices. By optimizing these aspects, they can ensure that anti-spoofing measures do not introduce significant delays or limitations that hinder the overall functionality of face recognition systems.

Email and Website Spoofing Mitigation

Protecting Websites

Implementing CAPTCHA or reCAPTCHA mechanisms is an effective way to prevent automated spoofing attacks on websites. These mechanisms require users to complete a challenge, such as identifying objects in an image or solving a puzzle, before accessing certain features or submitting forms. By doing so, they can differentiate between human users and bots, significantly reducing the risk of spoofing.

Utilizing SSL/TLS encryption is another crucial step in securing data transmission between users and websites. This technology encrypts the information exchanged between a user’s browser and the website server, making it extremely difficult for attackers to intercept or manipulate the data. By implementing SSL/TLS certificates on their websites, organizations can ensure that sensitive information remains confidential and protected from spoofing attempts.

Regularly updating website software patches is essential in addressing known vulnerabilities that can be exploited for spoofing. Hackers often target outdated software versions with known security flaws to gain unauthorized access or manipulate website content. By promptly applying software updates and patches provided by developers, organizations can strengthen their website’s defenses against spoofing attacks.

Mitigating Email Risks

Implementing email authentication protocols like SPF (Sender Policy Framework), DKIM (DomainKeys Identified Mail), and DMARC (Domain-based Message Authentication, Reporting & Conformance) is crucial in reducing the risk of email spoofing. SPF allows domain owners to specify which mail servers are authorized to send emails on behalf of their domain. DKIM adds a digital signature to outgoing emails, ensuring their authenticity and integrity. DMARC combines SPF and DKIM checks while providing additional policies for handling suspicious emails.

Training employees to recognize phishing emails plays a vital role in mitigating the risk of successful spoofing attacks via email. Phishing involves tricking individuals into revealing sensitive information or performing actions that benefit attackers. By educating employees about common phishing techniques, warning signs to look out for, and best practices for handling suspicious emails, organizations can empower their workforce to be vigilant against potential spoofing attempts.

Deploying advanced spam filters is an effective measure in detecting and blocking malicious emails. These filters use sophisticated algorithms to analyze incoming emails, identifying patterns and characteristics commonly associated with spoofing or phishing attempts. By automatically diverting suspicious emails to spam folders or blocking them altogether, these filters can significantly reduce the chances of employees falling victim to spoofed email attacks.

IP Spoofing Defense Strategies

Understanding Prevention

Understanding the different types of spoofing attacks is essential for effective prevention. By familiarizing ourselves with techniques such as IP spoofing and organization (org) spoofing, we can better identify and defend against them. Regularly educating users about common spoofing techniques and warning signs improves awareness and empowers them to be proactive in their approach to cybersecurity.

Implementing a comprehensive anti-spoofing strategy involves a combination of technical and user-focused measures. Technical measures include implementing security protocols such as DHCP snooping, which verifies the integrity of DHCP messages, preventing unauthorized devices from gaining network access. Using IP verify source commands helps validate the source IP addresses of incoming packets, ensuring they are legitimate.

User-focused measures involve training employees to recognize suspicious emails or websites that may be attempting to deceive them through phishing or other forms of spoofing. By teaching them how to identify red flags like misspelled URLs or requests for sensitive information, organizations can significantly reduce the risk of falling victim to these attacks.

Detecting Techniques

Implementing anomaly detection algorithms plays a crucial role in detecting spoofing attempts. These algorithms analyze network traffic patterns and flag any abnormal behaviors that may indicate an ongoing attack. By continuously monitoring network activity, organizations can quickly identify potential threats and take appropriate action to mitigate them.

Machine learning techniques can also be employed to train models for detecting spoofed biometric data. For example, in facial recognition systems, machine learning algorithms can learn patterns associated with genuine faces versus those generated by synthetic means or manipulated images. By leveraging these technologies, organizations can enhance their ability to detect fraudulent attempts at bypassing biometric authentication mechanisms.

Collaborating with cybersecurity researchers and organizations is vital for staying updated on emerging detection techniques. The landscape of cyber threats is constantly evolving, making it crucial for organizations to remain informed about new attack vectors and countermeasures being developed by experts in the field. By actively participating in information sharing initiatives and engaging with the cybersecurity community, organizations can stay one step ahead of attackers.

Conclusion

And there you have it! We’ve covered a wide range of anti-spoofing technologies and strategies to protect your network and data. From biometric authentication to email and website spoofing mitigation, we’ve explored various methods to stay one step ahead of the spoofers.

Now that you’re armed with this knowledge, it’s time to take action. Evaluate your current security measures and consider implementing some of the techniques we discussed. Remember, the key is to stay proactive and vigilant in the face of evolving spoofing threats.

Don’t let the spoofers catch you off guard. Protect your network, secure your data, and keep those spoofers at bay. Stay safe out there!

Frequently Asked Questions

What is anti-spoofing technology?

Anti-spoofing technology refers to a set of measures and techniques used to detect and prevent spoofing attacks. It helps protect systems, networks, and data from unauthorized access or manipulation by identifying and blocking fake or manipulated identities.

How does biometric anti-spoofing work?

Biometric anti-spoofing utilizes advanced algorithms to distinguish between genuine biometric traits (such as fingerprints, facial features, or voice patterns) and fake ones. By analyzing specific characteristics that are difficult to replicate, it ensures the authenticity of biometric data during authentication processes.

Why is IP spoofing a concern for network security?

IP spoofing involves forging the source IP address in network packets to deceive systems into thinking they are communicating with a trusted entity. This technique can be exploited by malicious actors for various purposes like concealing their identity, bypassing filters, launching DDoS attacks, or gaining unauthorized access.

How does email and website spoofing mitigation work?

Email and website spoofing mitigation involves implementing security measures such as SPF (Sender Policy Framework), DKIM (DomainKeys Identified Mail), DMARC (Domain-based Message Authentication Reporting & Conformance), and HTTPS protocols. These mechanisms verify the authenticity of email senders or websites, reducing the risk of phishing attacks and fraudulent activities.

What are some common network security measures against spoofing threats?

To combat spoofing threats effectively, organizations employ multiple network security measures including robust firewalls, intrusion detection systems (IDS), intrusion prevention systems (IPS), secure VPNs (Virtual Private Networks), two-factor authentication (2FA), strong encryption protocols, regular software updates/patches, and employee education on cybersecurity best practices.