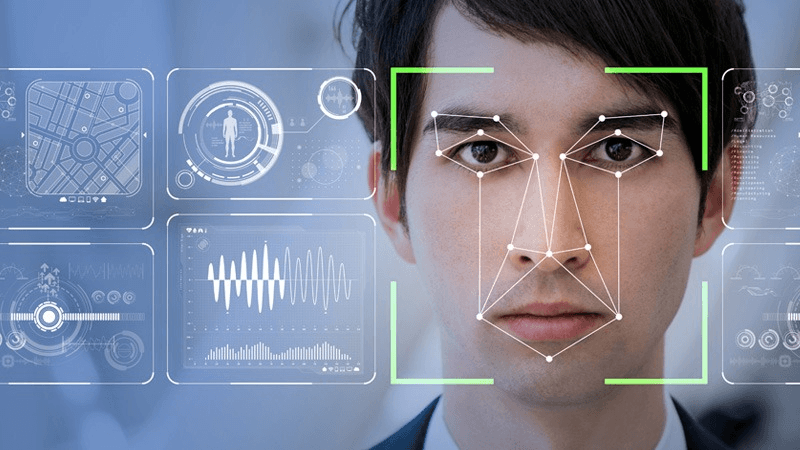

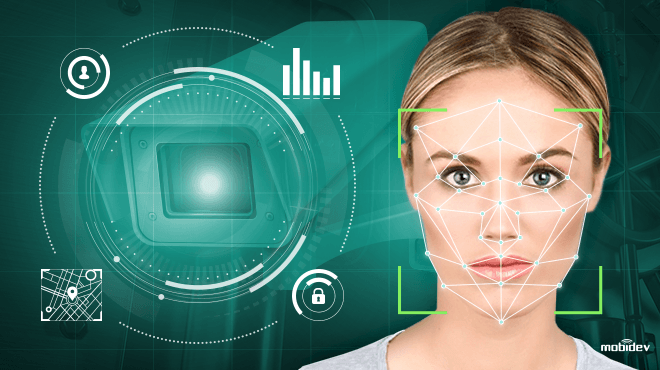

Face liveness detection is a critical technology in verifying the authenticity of faces and ensuring the security and accuracy of facial recognition systems. It plays a crucial role in identity verification by analyzing biometric data and detecting eye closure to distinguish genuine users from impostors. By analyzing various facial features and movements, deepfake detection technology effectively distinguishes between real faces and fake ones, preventing spoofing attempts. It incorporates liveness detection technology, landmark detection, and identity verification to enhance its accuracy. By analyzing various facial features and movements, deepfake detection technology effectively distinguishes between real faces and fake ones, preventing spoofing attempts. It incorporates liveness detection technology, landmark detection, and identity verification to enhance its accuracy. This is because these systems rely on face capture, which involves capturing biometric data from human faces. To prevent fraudulent activities, it is crucial to implement face anti-spoofing techniques. This is because these systems rely on face capture, which involves capturing biometric data from human faces. To prevent fraudulent activities, it is crucial to implement face anti-spoofing techniques.

Implementing reliable liveness detection techniques enhances overall system security. These techniques involve analyzing different aspects of human faces, such as texture, depth, motion, or physiological responses using facial recognition technology and landmark detection. Algorithms like texture analysis, motion analysis, 3D depth analysis, and physiological response analysis are used to determine the authenticity of human faces for identity verification. These algorithms analyze facial expressions and use liveness detection technology. Each 3D technology technique has its strengths and limitations; however, combining multiple face recognition solutions techniques can improve accuracy and reliability of face recognition capabilities for the project.

In this blog post, we will explore passive face liveness detection methods using Docker containers and device-based solutions for enhanced security measures in identity verification. We will also delve into the integration of these methods with GitHub repositories and the potential applications of 3D technology in this context.

Explore the Newest Face Liveness Detection Technologies and Techniques on GitHub

Passive vs. Active Liveness Detection Methods

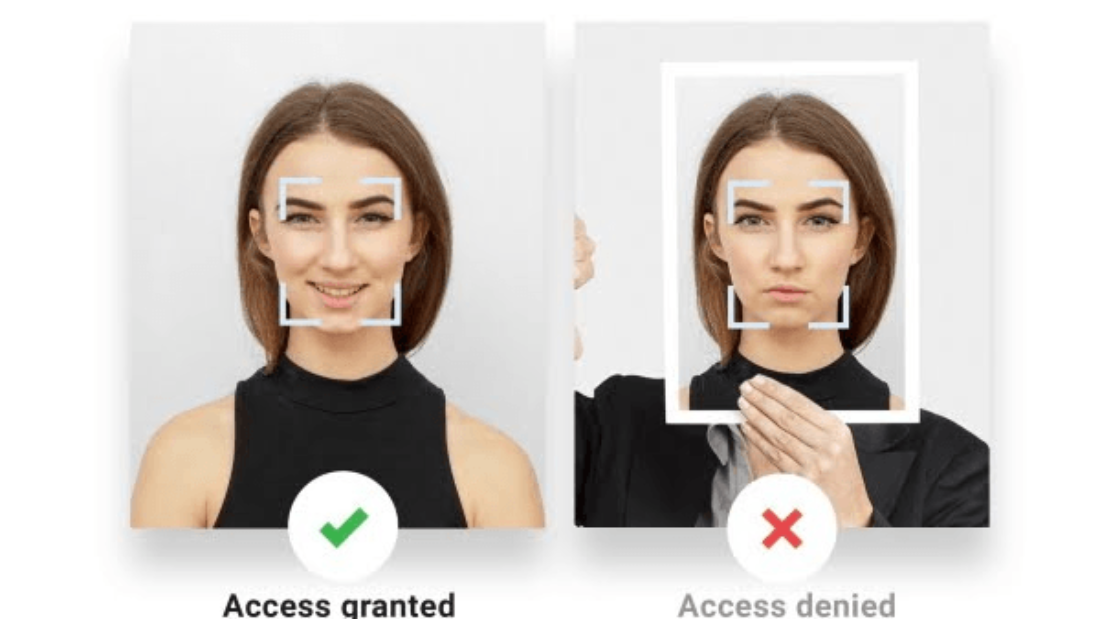

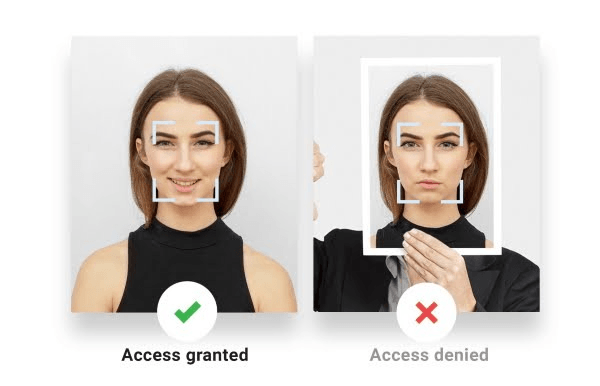

Passive liveness detection methods, such as face recognition solutions, employ security measures like 3D technology to analyze existing images or videos without requiring any specific user interaction. These methods also incorporate face anti spoofing techniques to enhance security. These security measures are designed to detect signs of spoofing or fraudulent activity based on the characteristics of the captured face data. Passive liveness detection and 3D technology are used to ensure the authenticity of the captured face data. These methods can be found in various github repositories. By analyzing factors such as texture, color, and motion, passive liveness detection algorithms can distinguish between real faces and fake representations using 3d technology. These algorithms incorporate security measures to ensure the authenticity of facial recognition. Additionally, researchers can access related code and resources on GitHub repositories for further development and collaboration in this field.

On the other hand, passive face recognition methods involve using security measures such as active liveness detection to prompt the user to perform certain actions or gestures from github repositories to prove their liveliness. This could include face detection, face recognition, face landmark detection, and face liveness detection capabilities tasks like blinking, smiling, or turning their head. By requiring these interactions, active liveness detection adds an extra layer of security to prevent attackers from using passive face static images or pre-recorded videos for authentication purposes in repositories.

By analyzing various facial features and movements, deepfake detection technology effectively distinguishes between real faces and fake ones, preventing spoofing attempts. It incorporates liveness detection technology, landmark detection, and identity verification to enhance its accuracy. Repositories can benefit from both passive and active methods, depending on the specific needs. Face liveness detection capabilities are crucial for ensuring the accuracy and reliability of the authentication process. Repositories can benefit from both passive and active methods, depending on the specific needs. Face liveness detection capabilities are crucial for ensuring the accuracy and reliability of the authentication process. By analyzing various facial features and movements, deepfake detection technology effectively distinguishes between real faces and fake ones, preventing spoofing attempts. It incorporates liveness detection technology, landmark detection, and identity verification to enhance its accuracy. These methods can be easily implemented in repositories. These methods can be easily implemented in repositories. However, 3D passive face liveness detection and passive liveness detection techniques may be more susceptible to advanced spoofing techniques that mimic realistic facial movements. These techniques can be found in repositories. Active methods, such as 3D passive face liveness detection, provide a higher level of assurance by actively engaging users in proving their liveliness but may introduce slight inconvenience during the authentication process. Passive liveness detection methods, like repositories, offer an alternative approach.

3D Living Faces Anti-Spoofing Data

To develop effective face liveness detection models, researchers and developers rely on datasets from repositories specifically designed for training and testing purposes. One such dataset is the 3D living faces anti-spoofing data, which is available in repositories for passive liveness detection. These datasets contain a variety of real face images from repositories, as well as spoofed images created using different attack methods for passive liveness detection.

By training models with these datasets from repositories, researchers can evaluate the performance of their liveness detection algorithms under various conditions. The inclusion of spoofed images helps improve the robustness of anti-spoofing solutions by identifying vulnerabilities through passive face liveness detection. This is important for repositories using passive liveness detection to enhance security measures. 3D living faces anti-spoofing data allows developers to test the effectiveness of their models in real-world applications, using passive liveness detection. This data helps them evaluate their models against a wide range of attack scenarios, ensuring their reliability. The developers can access this data from repositories.

Blink Detection for Enhanced Security

Blink detection is a commonly used technique in face liveness detection to enhance security in repositories. By implementing passive liveness detection through prompting the user to blink during the authentication process, it becomes significantly more challenging for attackers to spoof the system using static images or videos. The ability to detect a natural blink response is a form of passive liveness detection, which indicates the presence of a living person.

Blink detection can be combined with other liveness detection methods to create a more robust anti-spoofing solution. For example, by incorporating facial landmark detection techniques, it is possible to track specific points on the face and monitor changes that occur during blinking. This combination of passive liveness detection adds an extra layer of security by verifying both the presence of facial landmarks and the naturalness of blinking.

Face Liveness Detection on Different Platforms

Android SDK for Face Liveness Detection

The Android Software Development Kit (SDK) offers developers a powerful set of tools and libraries to implement face liveness detection in Android applications. With various APIs and features, the Android SDK enables real-time analysis of facial movements and gestures, enhancing the security features of mobile applications through passive liveness detection.

By integrating the Android SDK with passive liveness detection into their apps, developers can ensure that only a live person is being authenticated. The SDK utilizes advanced algorithms for passive liveness detection to analyze facial expressions, eye blinking, head movements, and other factors that indicate the presence of a live person. This helps prevent spoofing attempts using static images or videos by incorporating passive liveness detection.

With the Android SDK’s ease of use and flexibility, developers can seamlessly integrate face liveness detection into their applications without extensive coding knowledge. This allows them to focus on creating engaging user experiences while ensuring robust security measures, including passive liveness detection.

iOS SDK for Face Liveness Detection

Similar to the Android platform, the iOS Software Development Kit (SDK) provides developers with comprehensive tools and resources for implementing face liveness detection in iOS applications. The iOS SDK offers APIs and frameworks that enable real-time analysis of facial features and movements, allowing for accurate liveness verification.

By leveraging the capabilities of the iOS SDK, developers can protect their iPhone and iPad apps from unauthorized access using passive liveness detection. The SDK uses sophisticated algorithms for passive liveness detection, detecting signs of liveliness such as eye movement, facial expressions, and head rotation. This ensures that only genuine users with passive liveness detection are granted access to sensitive information or functionalities within an app.

Integrating face liveness detection using the iOS SDK is straightforward due to its well-documented APIs and intuitive development environment. Developers can easily incorporate passive liveness detection into their apps without compromising on performance or user experience.

Web-Based Solutions for Liveness Verification

Web-based solutions provide an alternative approach to implementing face liveness detection without requiring dedicated mobile apps or specialized hardware installations. These solutions utilize JavaScript libraries or browser plugins to access the device’s camera and analyze facial movements in real-time, using passive liveness detection.

By leveraging web-based liveness verification, users can perform face authentication directly through their web browsers. This eliminates the need for additional software installations or compatibility issues across different platforms, making passive liveness detection a convenient and hassle-free solution. Users can simply access a website and complete the liveness verification process using their device’s camera.

Web-based solutions with passive liveness detection offer convenience and accessibility, making them suitable for various applications such as online banking, e-commerce, and identity verification services. Passive liveness detection provides an additional layer of security by ensuring that only live persons are granted access to sensitive information or transactions.

GitHub Repositories for Face Liveness Detection

Public Repositories Overview

Public repositories on platforms like GitHub provide a valuable resource for developers working on face liveness detection. These repositories serve as a hub for sharing, collaborating, and contributing to open-source projects related to passive liveness detection in this field. They host a wide range of resources including source code, datasets, documentation, and more that can be freely accessed by the developer community for passive liveness detection.

By leveraging public repositories, developers can benefit from the collective knowledge and expertise of others in the field, including passive liveness detection. This fosters innovation and accelerates the development of robust face liveness detection solutions. It allows developers to build upon existing work and collaborate with others, ultimately leading to more efficient and effective implementations.

Popular Tools for Developers

Developers have access to popular tools such as OpenCV, TensorFlow, or PyTorch. These tools offer a wealth of pre-trained models, libraries, and APIs that simplify the implementation process.

For example, OpenCV is a widely used computer vision library that provides various functions and algorithms specifically designed for image processing tasks like face recognition and liveness detection. TensorFlow and PyTorch are deep learning frameworks that enable developers to train complex neural networks for face liveness detection using large datasets.

By utilizing these tools, developers can save significant time and effort in building their own face liveness detection systems from scratch. They can leverage the existing functionalities provided by these tools while focusing on fine-tuning or customizing them according to their specific requirements.

Telecom and Anti-Spoofing Solutions

Telecommunication companies play a crucial role in implementing anti-spoofing solutions to protect their customers’ identities. Face liveness detection is an essential component integrated into their authentication processes to prevent unauthorized access and identity fraud.

To ensure high accuracy and reliability in detecting spoof attempts, telecom anti-spoofing solutions often combine multiple liveness detection methods. These methods may include analyzing facial movements, detecting eye blinking or pupil dilation, or even using 3D depth sensors to capture the unique characteristics of a live face.

By incorporating face liveness detection into their authentication systems, telecom companies can enhance the security of their services and protect their customers from identity theft and fraudulent activities. It adds an extra layer of defense against spoofing attacks, making it more challenging for malicious actors to bypass the authentication process.

Implementing Face Liveness Detection in Projects

Adding to Your Repository

Developers have the opportunity to contribute to public repositories by adding their own face liveness detection implementations, datasets, or documentation. By sharing their work with the community, developers can receive valuable feedback, collaborate with others, and collectively improve the overall quality of the repository.

The act of adding to a repository not only benefits individual developers but also creates a diverse collection of resources that can be leveraged by the entire developer community. This collaborative approach fosters an environment where ideas are shared and refined, leading to innovative solutions and advancements in face liveness detection technology.

Docker Implementation Strategies

Docker provides containerization technology that simplifies the deployment and distribution of face liveness detection systems. Developers can package their applications along with all dependencies into Docker containers, ensuring consistent behavior across different environments.

By utilizing Docker implementation strategies, developers gain several advantages. Firstly, it enables easy scalability as containers can be effortlessly replicated and deployed on multiple machines. Secondly, it enhances portability since Docker containers encapsulate all necessary components, making it easier to move applications between different platforms or cloud providers. Lastly, it ensures reproducibility as the same containerized application will exhibit consistent behavior regardless of where it is executed.

Cross-Platform SDK Integration

Cross-platform SDK integration offers developers the convenience of using a single SDK for implementing face liveness detection across various platforms such as Android, iOS, or web applications. Instead of developing separate implementations for each platform, cross-platform SDKs provide a unified interface and functionality that can be utilized across different operating systems.

This approach significantly reduces development efforts by eliminating the need for platform-specific codebases while maintaining consistent liveness detection capabilities across multiple platforms. Developers no longer have to spend time learning different APIs or adapting their codebase for each platform individually. With cross-platform SDKs at their disposal, they can focus on building robust and reliable face liveness detection features without the complexities associated with platform fragmentation.

SDKs and APIs for Liveness Detection Development

SDK Overview for Android and iOS

SDKs specifically designed for Android and iOS platforms offer developers a range of tools, libraries, and APIs to seamlessly integrate face liveness detection into their mobile applications. These SDKs are tailored to the unique features and optimizations of each platform, ensuring optimal performance and an enhanced user experience during liveness verification. By utilizing the SDK overview, Android and iOS developers gain a comprehensive understanding of the available functionalities and integration options.

For developers working on Linux or Windows platforms, dedicated SDKs are available to facilitate the implementation of face liveness detection in their applications. These platform-specific SDKs provide a set of APIs, libraries, and tools that enable real-time analysis of facial movements for anti-spoofing purposes. With these SDKs, developers can leverage advanced techniques to detect potential spoofing attempts effectively.

One notable provider in this field is DoubangoTelecom, which offers a specialized Telecom’s SDK tailored specifically for telecom companies seeking to enhance their authentication processes. The Telecom’s SDK by DoubangoTelecom provides telecom operators with advanced anti-spoofing capabilities such as blink detection, texture analysis, motion analysis, and more. This specialized solution ensures robust security while maintaining high accuracy in liveness verification.

Innovative GitHub Projects Leveraging Face Liveness Detection

Intelligent Lock Systems and KYC Integration

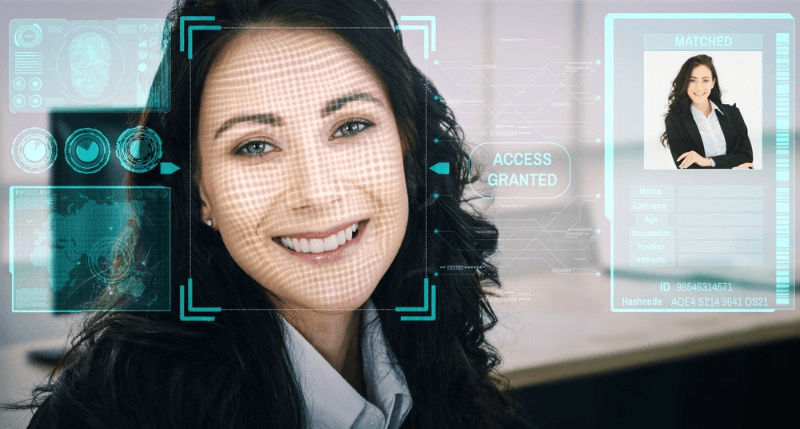

Intelligent lock systems have become increasingly popular for enhancing security in various settings. By incorporating face liveness detection as an additional security measure, these systems can prevent unauthorized access effectively. Face liveness detection works by verifying that the person attempting to gain access is a live human being and not a spoofing attempt.

One significant application of face liveness detection in intelligent lock systems is its integration with Know Your Customer (KYC) processes. KYC procedures are crucial for verifying the authenticity of users’ identities, particularly in industries like banking and e-commerce. Integrating face liveness detection into KYC processes ensures that the user’s identity is genuine, providing an extra layer of security.

The combination of face liveness detection and KYC integration offers several benefits. Firstly, it enhances the overall security of physical access control systems by preventing impersonation or fraud attempts. Secondly, it provides a seamless user experience as individuals can conveniently verify their identity using their faces without relying on traditional identification methods such as passwords or ID cards.

Presentation Attack Detection (PAD)

Presentation Attack Detection (PAD) plays a vital role in ensuring the effectiveness of face liveness detection systems. PAD refers to the system’s ability to detect various types of spoofing attacks during face authentication. These attacks can include presenting photos, videos, or even 3D masks to deceive the system.

To identify presentation attacks accurately, PAD techniques analyze different characteristics such as texture, motion, or physiological responses exhibited by live human faces but absent in spoofed ones. Through advanced algorithms and machine learning models, these techniques can distinguish between real faces and presentation attacks with high accuracy.

Effective PAD algorithms are crucial for robust face liveness detection systems. They provide reliable protection against sophisticated spoofing attempts while maintaining a smooth user experience. The continuous development and improvement of PAD technology contribute to strengthening overall security in face recognition applications.

Real-World Applications and Case Studies

Face liveness detection technology has found widespread applications across various industries. In the banking sector, it is utilized to secure online transactions and prevent fraud. E-commerce platforms employ face liveness detection to enhance user authentication during payment processes, safeguarding against unauthorized access and fraudulent activities.

Healthcare facilities can benefit from face liveness detection by ensuring accurate patient identification for secure access to medical records or restricted areas. Law enforcement agencies leverage this technology for identity verification in criminal investigations, enhancing their ability to identify suspects accurately.

Real-world case studies highlight the successful implementation of face liveness detection in practical scenarios. For instance, a leading financial institution implemented a face liveness detection system as part of its mobile banking app. The technology effectively prevented unauthorized access attempts and reduced instances of account fraud, providing customers with enhanced security and peace of mind.

Another case study involved an e-commerce platform that integrated face liveness detection into their payment authentication process.

Comprehensive Look at GitHub’s Anti-Spoofing Resources

Anti-spoofing with face_liveness_detection

The face_liveness_detection repository on GitHub is a valuable resource for developers looking to implement anti-spoofing capabilities into their projects. This open-source repository offers an implementation of face liveness detection algorithms, providing source code, documentation, and examples for easy integration. With face liveness detection, developers can enhance the security of facial recognition systems by distinguishing between real faces and spoofed ones.

Web App for Anti-Spoofing by birdowl21

For those interested in web-based solutions for anti-spoofing, the web app developed by birdowl21 is worth exploring. This practical example showcases how face liveness detection can be integrated into browser-based applications. By leveraging this web app, developers can gain insights into the implementation of anti-spoofing techniques in real-world scenarios. It serves as a helpful guide for understanding how to enhance the security of web applications using face liveness detection.

Spoofing Detection Techniques by ee09115

The repository created by ee09115 focuses specifically on spoofing detection techniques and provides implementations of various anti-spoofing algorithms. Developers seeking different approaches to detect spoofing attacks in facial recognition systems will find this repository invaluable. By referring to these resources, researchers and developers can explore diverse methods and gain a deeper understanding of how to combat spoofing effectively.

Evaluating GitHub’s Face Liveness Detection Repositories

Passive Liveness Detection Review

Passive liveness detection methods play a crucial role in identifying and preventing facial spoofing attempts. These methods analyze various aspects of the face, such as motion, texture, or depth, to determine if the presented image or video is from a live person or a fake representation. By reviewing passive liveness detection techniques, developers can gain insights into their strengths and limitations.

Passive liveness detection approaches offer advantages such as simplicity and non-intrusiveness. They do not require active user participation or additional hardware, making them convenient for various applications. However, it’s important to note that passive methods may have limitations in certain scenarios. For example, they may struggle with detecting highly sophisticated spoofing attacks that mimic natural movements accurately.

Understanding the strengths and limitations of passive liveness detection techniques helps developers choose the most suitable approach for their specific requirements. By considering factors like accuracy, robustness against different types of attacks, and computational efficiency, developers can make informed decisions when implementing face liveness detection measures.

In-depth Analysis of Top Repositories

GitHub hosts numerous repositories related to face liveness detection that provide valuable resources for developers. An in-depth analysis of these repositories allows us to understand their features, functionalities, and popularity within the developer community.

One popular repository is “Face-Anti-Spoofing,” which offers implementations of various anti-spoofing algorithms using deep learning frameworks like TensorFlow and PyTorch. It provides a comprehensive set of tools for training models and evaluating their performance on different datasets. It includes pre-trained models that developers can readily use in their own projects.

Another noteworthy repository is “LiveFaceDetection,” which focuses on real-time face liveness detection using computer vision techniques. It offers an intuitive interface for capturing video input from webcams or recorded videos and applies algorithms to detect facial movements indicative of liveness. The repository also provides extensive documentation and examples that facilitate the integration of face liveness detection into applications.

By analyzing these repositories, developers can identify the strengths and weaknesses of each option. They can consider factors such as ease of use, compatibility with their preferred programming language or framework, and community support when selecting the most appropriate repository for their projects. Moreover, understanding the popularity and user feedback for each repository helps developers gauge its reliability and effectiveness.

Recommendations for Repository Improvement

While GitHub’s face liveness detection repositories offer valuable resources, there are opportunities for improvement to enhance their usability and value to developers. One recommendation is to focus on improving documentation. Clear and comprehensive documentation enables developers to understand how to use the repository effectively, reducing confusion and potential errors during implementation.

Another area for improvement is code quality. Well-structured code with proper comments and meaningful variable names enhances readability and maintainability. By adhering to coding best practices, repositories can attract more contributors who can help refine the codebase further.

Conclusion

So there you have it, a comprehensive exploration of face liveness detection on GitHub. We’ve delved into the various technologies and techniques used in this field, examined different platforms for face liveness detection, and highlighted some of the most innovative projects on GitHub. By evaluating the available repositories and discussing the implementation of face liveness detection in projects, we’ve provided you with a solid foundation to start incorporating this technology into your own work.

But our journey doesn’t end here. Face liveness detection is a rapidly evolving field, and there’s always more to discover and explore. So why not take what you’ve learned and dive deeper? Explore the GitHub repositories we’ve discussed, experiment with different SDKs and APIs, and stay up to date with the latest advancements in face liveness detection. By doing so, you’ll be at the forefront of this exciting technology and can contribute to its ongoing development.

Now go forth, armed with knowledge and curiosity, and let your creativity shine in the realm of face liveness detection!

Frequently Asked Questions

How does face liveness detection work?

Face liveness detection works by analyzing various facial features and movements to determine if a face is real or fake. It uses techniques like eye blinking, head movement, and texture analysis to identify signs of life in the face.

What technologies are commonly used in face liveness detection?

Commonly used technologies in face liveness detection include computer vision algorithms, machine learning models, facial recognition systems, depth sensors (such as 3D cameras), and infrared imaging.

Can face liveness detection be implemented on different platforms?

Yes, face liveness detection can be implemented on different platforms such as desktop computers, mobile devices (smartphones and tablets), embedded systems, and even cloud-based services.

Are there any GitHub repositories available for face liveness detection?

Yes, there are several GitHub repositories that provide code and resources for implementing face liveness detection. These repositories offer open-source projects, libraries, and examples that can help developers get started with integrating this technology into their own applications.

Are there SDKs and APIs available for developing face liveness detection?

Yes, there are SDKs (Software Development Kits) and APIs (Application Programming Interfaces) specifically designed for developing face liveness detection. These tools provide pre-built functions and interfaces that simplify the process of incorporating this functionality into software projects.