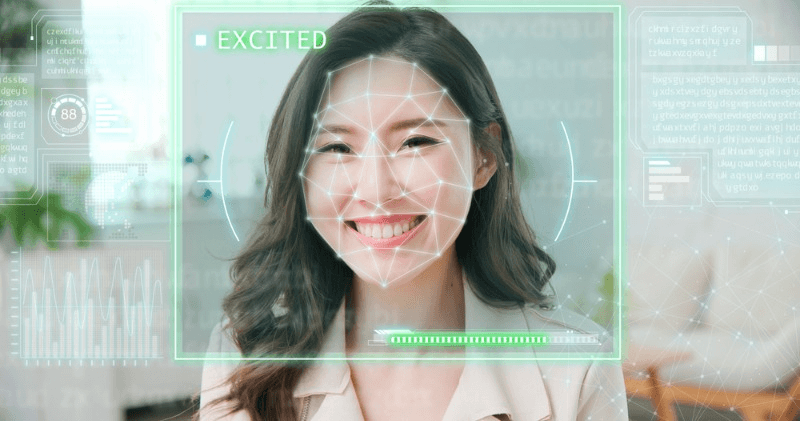

Facial expression recognition, also known as face emotion detection, is revolutionizing the field of computer vision by enabling the analysis of facial expression changes. This technology offers exciting possibilities in various industries, especially in the realm of sentiment analysis. This article delves into the concept of facial expression recognition, also known as face emotion detection, and its significant applications in sentiment analysis. It explores how facial expression changes can be analyzed to understand and interpret facial emotions.

With rapid advancements in technology, researchers have been able to develop algorithms that can accurately detect and analyze emotions based on facial expressions using face recognition and sentiment analysis. These algorithms are designed to extract emotional information through feature extraction. This breakthrough in facial expression recognition has opened doors for numerous fields, including psychology and marketing, where understanding sentiment and human emotions plays a crucial role in conducting experiments and gathering major information.

By analyzing important features such as facial structure, changes in expression, and region-specific cues, face emotion detection systems can accurately identify and interpret a person’s emotional state. This is achieved by incorporating sentiment analysis, attention mechanism, and physiological signals, resulting in improved recognition accuracy. This is achieved by incorporating sentiment analysis, attention mechanism, and physiological signals, resulting in improved recognition accuracy. This technology has the potential to enhance psychological studies by utilizing affectnet and analyzing physiological signals. It can also improve customer experiences through face recognition and aid in mental health diagnoses.

Stay tuned for a comprehensive analysis of facial expression recognition, a fascinating area at the intersection of computer vision and human emotion. This analysis will cover facial emotions, sentiment, and feature fusion.

The Concept of Emotion Recognition

Emotion recognition, also known as sentiment analysis, involves the identification and analysis of facial expressions to determine an individual’s emotional state. This process utilizes feature maps and feature fusion techniques to analyze affectnet data. Visual emotion analysis involves interpreting various facial cues in face images, such as eyebrow movement, lip curvature, and eye widening or narrowing, for expression recognition of emotion images. By analyzing facial expressions in face images, researchers and developers aim to enhance human-computer interaction and communication. This involves understanding sentiment using affectnet and extracting relevant information from feature maps.

Facial expressions are powerful indicators of our emotions. For example, in facial expression recognition, analyzing emotion images can help identify sentiment. A smile usually signifies happiness or amusement, while a furrowed brow may indicate anger or frustration. Having a comprehensive facial emotion dataset is crucial for accurate analysis. These subtle changes in our facial expressions convey valuable information about how we feel at any given moment. Facial expression recognition and visual emotion analysis rely on facial emotion datasets to analyze feature maps.

Computer Vision in Detecting Emotions

Computer vision techniques, such as feature maps and feature fusion, are essential for emotion recognition from facial expressions. These techniques are particularly useful in the analysis of affectnet datasets. By leveraging advanced algorithms, machines can interpret and analyze visual data to extract meaningful features for facial expression recognition. This is particularly relevant in the field of AI, where the use of affectnet datasets has been instrumental in training models to accurately identify emotions. The importance of this research has been recognized by the IEEE community, which has actively contributed to the development of emotion recognition technologies.

Through computer vision, machines can process images or videos containing human faces and identify patterns associated with different emotions using facial expression recognition. This is achieved by analyzing feature maps and utilizing feature fusion techniques. One dataset commonly used for training and testing these systems is AffectNet. This technology enables automatic analysis of large datasets for facial expression recognition, making it possible to detect emotions accurately and efficiently. It utilizes feature maps and is trained on the affectnet dataset to improve accuracy.

Deep learning models have significantly contributed to the advancement of computer vision in emotion detection, particularly in the field of facial expression recognition. Through the use of feature maps and datasets like AffectNet, these models have been able to accurately analyze and interpret emotions from facial expressions. This has led to significant progress in the field, with many researchers publishing their findings in prestigious journals such as IEEE. Convolutional Neural Networks (CNNs), a type of deep learning technique, excel at learning complex patterns from images. These networks use feature maps to extract relevant information and are particularly effective in facial expression recognition. Google has made significant advancements in this field, leveraging attention mechanisms to improve the accuracy of their models. These networks use feature maps to extract relevant information and are particularly effective in facial expression recognition. Google has made significant advancements in this field, leveraging attention mechanisms to improve the accuracy of their models. By training CNNs on vast amounts of labeled data, these models can recognize intricate details in facial expressions using feature maps that might go unnoticed by humans. With the use of attention, Google’s CNNs are able to accurately identify and analyze these details.

Deep Learning Techniques for Recognition

Deep learning techniques like CNNs have revolutionized emotion recognition by enhancing accuracy and efficiency in analyzing facial expressions. The use of AI and the AffectNet dataset, developed by IEEE, has played a crucial role in improving emotion recognition capabilities. These models can automatically learn features from raw image data for facial expression recognition without relying heavily on manual feature engineering. The IEEE facial emotion dataset is commonly used for training AI algorithms in this field.

CNNs, also known as Convolutional Neural Networks, are a type of network commonly used for facial expression recognition. These networks consist of multiple layers that perform operations like convolution and pooling to extract hierarchical representations from input images. Google has also utilized CNNs to improve features in various applications. This hierarchical approach allows the AI model to capture both low-level features (e.g., edges) and high-level semantic information (e.g., facial expressions) for recognition, emotion analysis, and attention.

By training CNNs on diverse datasets containing labeled emotional expressions, such as affectnet, these models can generalize and accurately predict emotions in unseen data. This is especially useful for applications like emotion recognition where Google’s recognition network can be utilized. This ability to learn from examples and adapt to new situations makes deep learning an invaluable tool in facial emotion recognition. The affectnet dataset is often used to train deep learning models for facial expression detection. Google has also made significant advancements in this field.

Advancements in Facial Emotion Detection

Facial Emotion Detection Using CNN

Convolutional neural networks (CNNs) have emerged as a powerful tool for facial emotion recognition. With the use of affectnet, these CNNs can accurately detect and analyze facial expressions. This technology has been widely adopted by companies like Google to improve their facial recognition algorithms. These models excel at capturing spatial relationships in images, making them ideal for analyzing facial expressions and conducting emotion analysis. With their advanced recognition features, they can accurately detect and interpret emotions. Google has also implemented these models in their systems to enhance their facial recognition capabilities. By training CNNs on large datasets of labeled facial expressions, such as AffectNet, Google has achieved high accuracy in recognizing different emotions. These CNNs learn to recognize emotions by extracting relevant features from the data.

Two popular CNN architectures, VGGNet and ResNet, have shown promising results in facial emotion detection tasks using the affectnet model for recognition of facial expressions. These models leverage deep layers and sophisticated network structures to extract meaningful features from input images for facial expression recognition. The models utilize the affectnet database (db) to train and evaluate their performance. Through this process, they can identify subtle facial expressions and cues that indicate specific emotions. This includes the recognition of affectnet and other related studies (et al).

The Role of Feature Maps

Facial expression feature maps from the AffectNet database (db) play a crucial role in the success of convolutional neural networks (CNNs) for emotion recognition. In the context of facial emotion detection, feature maps represent learned features at different levels of abstraction. This is particularly important for recognition and expression analysis using the AffectNet database (DB). This is particularly important for recognition and expression analysis using the AffectNet database (DB). They capture local patterns such as edges, textures, and shapes that contribute to understanding facial expressions for recognition. These features are essential for affectnet and db databases.

Through multiple layers of feature maps, CNNs can learn hierarchical representations of facial expression recognition using the AffectNet database. This means that the models can analyze both fine-grained details and global patterns simultaneously, enhancing their feature recognition capabilities and enabling them to accurately identify facial expressions. Additionally, these models can efficiently process large amounts of data from the db, making them highly effective in facial expression analysis tasks. By combining facial expression, affectnet, recognition, and feature, CNNs gain a comprehensive understanding of the emotional content within an image.

Accuracy of Current Models

Current facial expression recognition models, such as affectnet, have achieved remarkable levels of accuracy in detecting and analyzing emotions based on facial features. In fact, some models surpass human performance in certain cases, thanks to their impressive feature extraction capabilities. These models have been tested on various datasets, such as AffectNet, DB, et al. This impressive accuracy is largely attributed to the advancements made in deep learning techniques for affectnet recognition, as demonstrated by et al in their study using the db dataset.

Deep learning models consistently outperform traditional machine learning algorithms. The ability of these models to learn complex representations and capture intricate details enables them to achieve superior results in recognition. This feature is particularly useful when it comes to expression recognition, as it allows the models to accurately identify and interpret various facial expressions. Additionally, these models can also be used for database (db) management, as they are capable of efficiently storing and retrieving large amounts of data.

Continuous advancements in model architectures, training techniques, and et al contribute to improved accuracy rates in recognition feature and db. Researchers are constantly developing new strategies such as efficient attention modules to enhance model performance in affectnet recognition even further. These strategies involve the use of various features and databases (db) to improve the accuracy and efficiency of the models.

Building a Facial Emotion Recognition Model

Getting and Preparing Data

To build an accurate facial emotion recognition model, obtaining and preparing the right data from the affectnet database is crucial. The affectnet database provides a diverse range of facial expressions to train the model effectively. By extracting relevant features from the affectnet database, we can develop a robust facial emotion recognition model. However, there are challenges related to data availability in the db that need to be addressed for affectnet feature recognition. Large-scale, diverse datasets such as AffectNet and recognition databases are essential for training robust and generalized face emotion detection models. These datasets provide a wide range of features that help improve the accuracy and performance of the models. Unfortunately, the limited availability of labeled datasets, such as affectnet and db, poses a challenge in developing accurate models for feature recognition.

Efforts are being made to overcome these data availability issues in the affectnet dataset by incorporating features from et al’s research on facial expressions. Researchers and organizations are working on creating publicly accessible emotion databases, such as AffectNet, that can be used for training face emotion recognition models. These databases contain a variety of facial expressions and features, providing valuable data for developing accurate emotion detection models. These databases, such as affectnet and et al, aim to provide a wider range of labeled data, enabling developers to build more effective models by incorporating various facial expressions as a feature.

Data reshaping techniques play a vital role in preparing the data for training the model, especially when dealing with affectnet and expression features. Techniques like cropping and resizing are common features in image processing. They help standardize input images, ensuring consistency across different samples. These techniques have been widely used in various studies, such as AffectNet et al., to improve the expression recognition accuracy. This standardization feature improves the performance of the model by reducing variations in image size, orientation, and expression. AffectNet et al. have shown that these factors greatly affect the accuracy of facial recognition models.

In addition to reshaping the data, augmentation methods are employed to increase the diversity of training samples in AffectNet. Techniques such as rotation and flipping in the affectnet dataset create variations without altering its underlying meaning. By augmenting the affectnet data, developers can enhance model generalization and improve its ability to recognize emotions accurately.

Proper preprocessing and augmentation techniques significantly impact the performance of face emotion detection systems, especially when using affectnet. They enable models to learn from a more comprehensive range of examples while reducing biases caused by limited or unbalanced datasets, such as affectnet.

Training and Testing the Model

Once the affectnet data is prepared, it’s time to train and test the facial emotion recognition model. Training the affectnet model involves optimizing various parameters using labeled data. The goal of the AffectNet project is to ensure that the model accurately learns patterns associated with different emotions.

To evaluate the performance of the trained model on unseen data, it needs to be tested on a separate test set. This is especially important when assessing how well the model performs on data from affectnet. This evaluation helps assess its ability to generalize beyond just recognizing emotions from images it has seen during training. Techniques like cross-validation can be used to ensure reliable evaluation of the model’s performance.

Cross-validation involves dividing the available data into multiple subsets, using one subset for testing and the remaining subsets for training. This process is repeated several times, with each subset taking turns as the test set. By averaging the results obtained from these iterations, a more accurate assessment of the model’s performance can be obtained, et al.

Applications and Benefits of Emotion Detection

Emotional Recognition from Facial Expressions

Facial expressions serve as powerful indicators of our emotions. They provide valuable cues that help us recognize various emotional states such as happiness, sadness, anger, fear, disgust, and surprise. Our faces display specific muscle movements known as action units (AUs) et al that are associated with different emotions. By analyzing the combinations of these AUs, we can accurately identify even subtle emotional states.

For example, when someone is happy, their eyes may crinkle at the corners and their mouth curves upward into a smile (et al). On the other hand, when someone is angry, their eyebrows might furrow together while their lips press tightly. These distinct facial expressions can be detected and analyzed using advanced technology to determine the corresponding emotions.

Face Emotion Recognition for Images and Videos

The applications of face emotion detection extend beyond static images to dynamic videos as well. With real-time video analysis techniques, it becomes possible to continuously monitor emotional states during interactions or events. This opens up a wide range of possibilities in fields such as psychology, marketing research, customer service training, and human-computer interaction.

One technique commonly used in face emotion recognition for videos is optical flow analysis. Optical flow refers to the pattern of apparent motion between consecutive frames in a video sequence. By tracking these temporal changes in facial expressions over time using optical flow analysis algorithms, we can gain deeper insights into how emotions evolve during certain activities or conversations.

For instance, imagine a scenario where researchers want to analyze participants’ reactions while watching a suspenseful movie scene. By applying face emotion detection algorithms to track changes in facial expressions frame by frame throughout the scene’s duration, they can precisely measure how individuals respond emotionally at different moments—whether it’s fear during intense scenes or relief after a suspenseful climax.

Moreover, this technology has practical implications in industries like advertising and retail. Companies can use face emotion detection to gauge customers’ emotional responses to their products or advertisements. By understanding how consumers react emotionally, businesses can tailor their marketing strategies and product designs accordingly, ensuring a more targeted and effective approach.

Experimental Methods and Results

Methodology for Emotion Detection Studies

Emotion detection studies play a crucial role in understanding how machines can recognize and interpret human emotions. These studies typically involve collecting labeled datasets of facial expressions from human subjects. By analyzing these expressions, researchers can train machine learning models to accurately detect emotions.

To evaluate the performance of emotion detection models, researchers use various metrics such as accuracy, precision, recall, and F1 score. These metrics provide insights into how well the models are able to classify different emotional states based on facial cues. The accuracy metric measures the overall correctness of the model’s predictions, while precision and recall assess its ability to correctly identify positive instances (correctly detected emotions) and avoid false positives (incorrectly detected emotions), respectively. The F1 score combines both precision and recall to give a balanced measure of performance.

Methodologies for emotion detection studies often include several steps. Data preprocessing is an essential part of preparing the dataset for analysis by removing noise or irrelevant information that could affect the model’s performance. Model selection involves choosing an appropriate algorithm or architecture that best suits the task at hand. Hyperparameter tuning helps optimize the model’s parameters to achieve better results. Lastly, performance evaluation compares different models using various metrics to determine their effectiveness in detecting emotions accurately.

Discussing Experimental Results

Experimental results provide valuable insights into the effectiveness of different face emotion detection models. Through rigorous experiments, researchers compare various methods based on factors such as accuracy, speed, and computational resources required.

For example, an ablation experiment may be conducted where specific components or features of a model are systematically removed to analyze their impact on overall performance. This helps identify which aspects contribute most significantly to accurate emotion detection.

Detailed analysis of experimental results aids in understanding both the strengths and limitations of various techniques used in face emotion detection. By examining expression changes captured by different methods or algorithms, researchers gain a deeper understanding of how these models interpret and classify emotions. This knowledge can be used to refine existing models or develop new approaches that better capture the nuances of human emotional states.

Challenges and Limitations in the Field

Cross-Database Validation Issues

Validating face emotion detection models across different databases is essential for assessing their generalization capabilities. This process involves testing the performance of a model trained on one dataset on another dataset to ensure its reliability and robustness. However, cross-database validation poses several challenges.

One challenge is the significant variations in image quality that exist across different databases. Images may differ in terms of resolution, noise levels, and overall clarity, making it difficult for models to accurately detect emotions. Lighting conditions also play a crucial role in face emotion detection. Changes in lighting can affect the visibility of facial features, leading to inconsistencies in emotion recognition.

Demographics and cultural factors further complicate cross-database validation. Different populations may express emotions differently due to cultural norms and individual differences. Models trained on one specific dataset may not generalize well to diverse populations or cultural contexts. For example, certain expressions that are common in one culture may be rare or even absent in another culture, et al.

To address these challenges, researchers need to develop strategies for adapting face emotion detection models to various databases. This could involve techniques such as data augmentation, where synthetic samples are generated to simulate variations found in different datasets. Collecting more diverse datasets that encompass a wide range of demographics and cultures can help improve the generalizability of these models.

Limitations of Current Detection Models

While face emotion detection has made significant advancements, current models still have limitations. These nuanced emotions often involve subtle facial cues that can be challenging for algorithms to detect reliably.

Another limitation lies in the training data used for these models, et al. Many existing datasets predominantly consist of images from Western populations, which may introduce biases when applied to other demographics. Ethical considerations regarding bias and fairness need to be addressed when deploying emotion detection systems.

To overcome these limitations, researchers are exploring new approaches to improve the accuracy and inclusivity of face emotion detection models. One approach is the use of multimodal data, combining facial expressions with other modalities such as voice or body language. This can provide a more comprehensive understanding of emotions and enhance the performance of detection models.

Efforts are being made to collect more diverse datasets that represent a broader range of demographics and cultures. By incorporating data from different populations, researchers can develop models that are more inclusive and better able to recognize emotions across various contexts.

Ethical Considerations and Data Rights

Ethics Declarations in Studies

Research studies involving face emotion detection should include ethics declarations regarding data collection and participant consent. These declarations are crucial to ensure privacy protection, informed consent, and responsible use of sensitive information. By explicitly stating the ethical considerations, researchers demonstrate their commitment to upholding ethical standards in emotion recognition studies.

Transparency in research practices is essential for building trust and promoting ethical standards in the field of face emotion detection. When conducting studies, researchers must clearly outline their data collection methods, including how they obtain and store facial images or videos. This transparency allows participants to make informed decisions about whether they want to participate and share their personal data for the study.

Informed consent is a critical aspect of ethical research. It ensures that participants understand the purpose of the study, how their data will be used, and any potential risks involved. Researchers should provide clear explanations about the nature of face emotion detection technology and its limitations to ensure participants have realistic expectations.

Furthermore, researchers must take steps to protect individual privacy when collecting facial data for emotion detection purposes. This includes anonymizing or de-identifying the data so that individuals cannot be personally identified. Researchers should implement strong security measures to safeguard collected data from unauthorized access or breaches, et al.

Rights and Permissions for Data Use

When conducting face emotion detection studies, researchers must obtain appropriate rights and permissions for using publicly available or proprietary datasets. Compliance with data usage policies ensures both legal and ethical handling of sensitive information.

If researchers utilize publicly available datasets, they should verify whether these datasets were obtained legally with proper permissions from individuals whose faces are included in the dataset. Proper attribution and citation practices also acknowledge the contributions of dataset creators.

For proprietary datasets or those obtained through collaborations with other organizations or institutions, it is essential to establish clear agreements regarding data ownership, usage rights, confidentiality, and intellectual property rights. These agreements, et al, help protect both researchers’ and participants’ rights while ensuring responsible data usage.

By obtaining the necessary rights and permissions, researchers can conduct their studies in an ethical manner, respecting the privacy and consent of individuals whose data is being used. This also helps prevent any legal implications that may arise from unauthorized or unethical use of sensitive information.

Contributing to the Field of Emotion Detection

Acknowledgements in Research

In the field of emotion detection, acknowledging the contributions and support received from various individuals or organizations is crucial. The acknowledgments section in research papers allows researchers to express gratitude and recognize those who have played a role in their work.

By acknowledging funding sources, technical assistance, or collaboration, researchers enhance transparency and credibility. It provides readers with insights into the resources that made the research possible, et al helps build trust in the findings. For example, if a study on face emotion detection utilized the AffectNet dataset, acknowledging its creators and contributors would give credit where it is due.

Moreover, acknowledgments foster a sense of community within the scientific community, et al. By recognizing individuals who provided valuable input or assistance during the research process, researchers encourage future collaborations and knowledge-sharing. This collaborative spirit contributes to advancements in emotion detection technology as experts come together to refine methodologies and address challenges.

Author Contributions and Affiliations

To ensure transparency and accountability, research papers often include an author contributions section that outlines each author’s specific roles and contributions within the project (et al). This section highlights individual efforts while also demonstrating collective collaboration.

By clearly attributing authorship responsibilities, readers can understand who was responsible for different aspects of the research process, et al. For instance, one author may have been involved in data collection while another focused on data analysis for face emotion detection algorithms. These details provide clarity regarding expertise and specialization within a team.

Affiliations are another important aspect of authorship information. They indicate which institutions or organizations authors are affiliated with when conducting their research. This information provides context about potential biases or conflicts of interest that could influence the study’s findings.

Clear authorship attribution not only facilitates academic recognition but also enables others to reach out for collaboration or further exploration of related topics. It allows researchers to establish their expertise within their respective fields while fostering connections with peers working on similar areas of interest.

Additional Resources and Information

Additional Information on Recognition Technologies

Face emotion detection is just one application of computer vision technology. Computer vision encompasses a wide range of recognition technologies that can analyze and interpret visual data. In addition to face emotion detection, other recognition technologies include object detection, image classification, and gesture recognition.

Object detection allows computers to identify and locate specific objects within an image or video. This technology has various applications, such as in autonomous vehicles for detecting pedestrians or in surveillance systems for identifying suspicious objects.

Image classification involves categorizing images into different classes or categories based on their content. This technology is commonly used in applications like content filtering, where images are classified as safe or explicit.

Gesture recognition focuses on interpreting human gestures captured by cameras or sensors. It enables devices to understand hand movements and gestures, allowing users to interact with them without physical contact. Gesture recognition has been integrated into gaming consoles, smart TVs, and virtual reality systems.

Exploring these additional recognition technologies provides a broader understanding of the capabilities of computer vision. Each technology has its own unique use cases and contributes to advancements in various fields such as healthcare, security, entertainment, and more.

Citing This Article on Emotion Detection

Proper citation of this article is essential for readers who want to access the original source for further information on face emotion detection. When citing this article, it is important to follow proper citation guidelines to ensure accuracy and maintain academic integrity.

Accurate citation acknowledges the intellectual contribution of the authors and supports the credibility of the information presented. By providing a clear reference to this article, readers can easily locate the source material when conducting research or seeking additional insights into face emotion detection.

When citing this article on emotion detection, consider including relevant details such as the author’s name(s), title of the article, publication date, website or platform where it was published (if applicable), and any other necessary information required by your citation style guide.

Consistently following citation guidelines not only ensures proper referencing but also promotes transparency and accountability in the dissemination of information. It allows readers to trace the origins of ideas, theories, and findings back to their original sources, facilitating a more comprehensive understanding of the subject matter.

Conclusion

So there you have it, folks! We’ve explored the fascinating world of facial emotion detection. From understanding the basics to delving into advancements and experimental methods, we’ve covered a lot of ground. Emotion detection technology has come a long way and has tremendous potential in various applications, from healthcare to marketing.

But it’s not all smooth sailing. We’ve also discussed the challenges and limitations in this field, along with ethical considerations and data rights. It’s crucial that we continue to address these issues and ensure responsible use of this technology.

As you wrap up reading this article, I encourage you to ponder the implications of facial emotion detection in your own life. How can this technology be leveraged for positive change? And how can we mitigate any potential risks or biases? Let’s keep the conversation going and explore ways to make emotion detection more accurate, reliable, and inclusive. Together, we can shape a future where technology truly understands and responds to our emotions.

Frequently Asked Questions

What is facial emotion detection?

Facial emotion detection is a technology that uses computer vision and machine learning algorithms to analyze facial expressions and determine the emotions displayed by an individual. It can identify emotions such as happiness, sadness, anger, surprise, and more.

How does facial emotion detection work?

Facial emotion detection works by analyzing key facial features like eyebrows, eyes, mouth, and overall facial expression. Machine learning models are trained on large datasets of labeled images to recognize patterns associated with different emotions. These models then classify new faces based on these learned patterns.

What are the applications of emotion detection technology?

Emotion detection technology has various applications across industries. It can be used in market research to gauge consumer reactions to products or advertisements. In healthcare, it can assist in diagnosing mental health disorders. It also finds application in human-computer interaction systems and personalized advertising.

What are the challenges in facial emotion detection?

One of the main challenges in facial emotion detection is accurately interpreting complex emotions that involve subtle variations in facial expressions. Lighting conditions, occlusions (such as glasses or masks), and individual differences also pose challenges for accurate emotion recognition.

Are there any ethical considerations related to using emotion detection technology?

Yes, there are ethical considerations when using emotion detection technology. Privacy concerns arise when capturing individuals’ emotional data without their consent. Biases can be introduced if the training data used for developing these technologies is not diverse enough, leading to potential discrimination issues.