Faceware Realtime, powered by advanced computer vision algorithms, enables real-time analysis and tracking of facial movements, including face angles, Sentimask, and gaze direction. This technology is pivotal in various applications such as augmented reality (AR), virtual reality (VR), video games, computer vision, facial tracking software, and faceware realtime. By using faceware realtime technology and computer vision algorithms, 3D face tracking enables the seamless mapping of users’ facial expressions onto avatars or characters in videos. This advanced mocap technique greatly enhances user engagement and provides immersive experiences in AR and VR applications. The real-time nature of Faceware Realtime ensures instant processing of facial movements without any noticeable delay, making it indispensable for applications requiring immediate response, like gaming and live video effects. With the Face AR SDK, users can track face angles and achieve accurate mocap.

Exploring Face Tracking Technologies

Snapchat Lenses

Snapchat lenses utilize faceware and 3D face tracking technology to apply interactive filters and effects to users’ faces on the Snapchat website. The faceware technology tracks facial landmarks for accurate motion capture. The faceware technology accurately detects facial landmarks for mocap to overlay animations, masks, or makeup on the user’s image. This is done using a model and dataset. For example, a user can add virtual sunglasses from the faceware website that precisely align with their eyes and nose, thanks to the advanced 3D face tracking capabilities of the dataset and model. This faceware, or facial tracking software, has become immensely popular on our website due to its ability to transform a user’s appearance in real-time. It’s like having a virtual model for your face.

The use of face tracking in Snapchat lenses has revolutionized the way people engage with social media platforms. With the integration of faceware technology, users can now have interactive and personalized experiences on the website. These lenses utilize cookies to enhance the tracking capabilities and provide a seamless user experience. This innovative source of technology has truly transformed the way we interact with social media. The face AR SDK allows users to interact with augmented reality elements on their website. It seamlessly integrates with their facial features, creating an entertaining and immersive experience for both creators and viewers. With the help of Faceware technology, users can easily model their own virtual avatars.

TikTok Effects

TikTok utilizes advanced faceware technology and 3D face tracking techniques to generate captivating visual effects on its platform. The website incorporates a model that sources these techniques to enhance user experience. With faceware technology, users can add various filters, stickers, and augmented reality elements that precisely align with their facial features as they model and change expressions. For instance, a user could apply facial tracking software to their video using faceware technology. They can use the face AR SDK to change their eye color while maintaining realistic alignment throughout the video. This allows them to model different looks and enhance their video content.

The utilization of faceware and facial tracking software in TikTok effects enhances the creativity and entertainment value of user-generated content by offering unique ways for users to express themselves through engaging visual enhancements. The face ar model technology allows for more immersive and dynamic experiences on the platform.

AI Innovations

Artificial intelligence (AI) innovations have significantly improved the accuracy and robustness of face tracking systems, especially those employing 3D technologies. Machine learning algorithms enable facial tracking systems to learn from vast amounts of data related to human faces’ movements and expressions. This enhancement has made it possible for 3D face tracking systems powered by AI-driven advancements more reliable when adapting them across different scenarios.

The integration of machine learning into face-tracking technologies not only improves accuracy but also enables these systems to adapt better over time as they encounter new variations in human faces or environmental conditions.

Open Source vs Proprietary Solutions

Feature Comparison

The available facial tracking software may vary in terms of features, such as the number of tracked landmarks or the ability to detect emotions. Users should compare these capabilities across different systems to ensure they meet their specific requirements. For instance, some software might excel in accurately tracking facial expressions and micro-movements, while others may prioritize real-time performance for interactive applications like virtual makeup try-ons or gaming avatars.

Considering feature comparison is crucial because it helps users choose the most suitable facial tracking software for their intended applications. For example, if a developer is creating an augmented reality (AR) app that requires precise facial feature detection and expression recognition for realistic filters and effects, they would prioritize a system with advanced landmark tracking and emotion detection capabilities.

Developers catering to diverse user bases might need software that supports multiple languages or offers customizable features based on cultural differences in facial expressions. Therefore, understanding each system’s unique features allows developers to align them with their project goals effectively.

Platform Compatibility

3D face tracking solutions can differ significantly in their compatibility with various platforms such as mobile devices, PCs, or gaming consoles. It is essential for developers to consider platform compatibility when selecting a facial tracking software to ensure seamless integration into the desired application.

For instance, if a developer aims to create an AR filter app targeting smartphone users specifically but chooses a solution incompatible with mobile platforms, it could hinder user accessibility and limit the app’s reach. On the other hand, choosing compatible software ensures optimal performance across different devices without sacrificing functionality.

Developers should choose solutions that support their target platforms for optimal performance and user experience. This consideration not only enhances usability but also broadens market reach by allowing deployment across multiple platforms seamlessly.

Advancements in AI for Face Tracking

Designing AI Networks

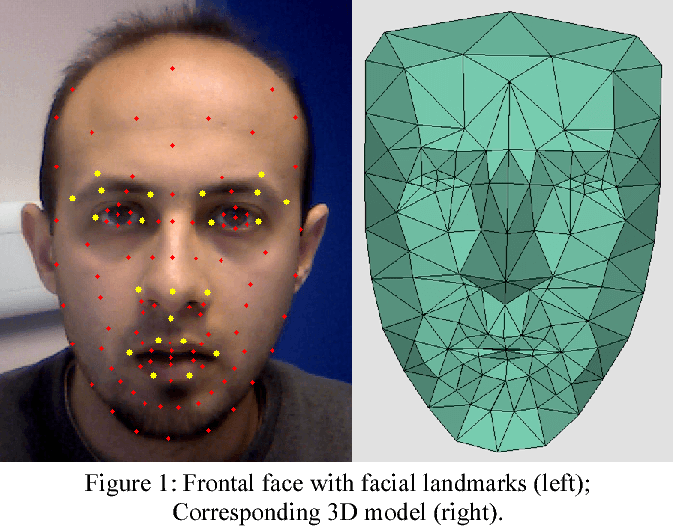

Designing AI networks for 3D face tracking involves creating architectures that can accurately detect and track facial landmarks. Deep learning techniques, such as convolutional neural networks (CNNs), are commonly used for this purpose. The design of AI networks plays a crucial role in achieving high accuracy and real-time performance in 3D face tracking systems.

For example, when designing an AI network for 3D face tracking, the architecture must be capable of identifying key facial landmarks like the eyes, nose, and mouth with precision. This requires the use of specialized layers within the CNN to extract intricate features from input images or video frames. These features form the basis for accurately predicting and tracking facial landmarks in three dimensions.

The effectiveness of AI network design directly impacts the system’s ability to perform real-time 3D face tracking tasks efficiently without compromising accuracy. Therefore, careful consideration is given to optimizing network architectures to meet specific performance requirements.

Training AI Models

Training AI models for 3D face tracking requires large datasets containing annotated facial landmark positions. These datasets are used to train the models to accurately predict facial landmarks from input images or video frames. The quality and diversity of training data significantly impact the performance of trained AI models.

For instance, a diverse dataset encompassing various ethnicities, ages, and gender representations ensures that the trained model can generalize well across different demographic groups while maintaining accurate predictions of facial landmarks under varying conditions such as lighting and pose variations.

Furthermore, ensuring sufficient coverage of extreme poses or expressions within training data helps enhance robustness against challenging scenarios encountered during real-world applications like head rotations or occlusions by external objects.

Evaluating Performance

Evaluating the performance of 3D face tracking systems involves measuring accuracy, robustness, and computational efficiency. Metrics like mean error distance or frame rate can be used to assess system performance under various conditions. Thorough evaluation ensures that the chosen 3D face tracking solution meets desired requirements.

For example: A comprehensive evaluation process may involve testing a system’s ability to maintain accurate landmark predictions across different illumination levels while simultaneously assessing its computational efficiency on hardware platforms commonly utilized in AR/VR devices or smartphones.

Real-time 3D Face Tracking with Deep Learning

Deep learning algorithms, such as recurrent neural networks (RNNs) or graph convolutional networks (GCNs), play a pivotal role in enhancing the accuracy of 3D face tracking. These advanced algorithms harness the power of neural networks to capture intricate temporal dependencies and spatial relationships in facial movements. By doing so, they enable more precise and reliable tracking results, revolutionizing the field of 3D face tracking.

For instance, recurrent neural networks are adept at processing sequences of data, making them ideal for analyzing continuous facial movements during real-time tracking. On the other hand, graph convolutional networks excel at capturing complex spatial relationships within facial features, contributing to more accurate and robust 3D face tracking outcomes.

Deep learning algorithms have significantly elevated the capabilities of faceware realtime systems by enabling them to adapt to diverse facial expressions and movements with remarkable precision. This has led to substantial improvements in applications such as virtual reality (VR), augmented reality (AR), and human-computer interaction.

Limitations and Advancements

Video-Based Challenges

Tracking facial movements in videos comes with unique challenges. Variations in lighting conditions, camera angles, and occlusions can affect the accuracy of the tracking process. To address these issues, advanced techniques such as optical flow analysis and feature matching are utilized. These methods help overcome video-based challenges by ensuring that 3D face tracking systems perform robustly in real-world scenarios.

For instance, when a person’s face is partially obscured or when they move from a well-lit area to a shadowed one, it can be challenging for the system to accurately track their facial movements. However, through the use of optical flow analysis and feature matching, these challenges can be mitigated. This ensures that regardless of environmental factors or variations in recording conditions, the 3D face tracking system remains reliable.

The robust performance achieved by overcoming video-based challenges is crucial for applications like augmented reality filters in social media platforms or facial motion capture for movies and games. By addressing these obstacles effectively, developers can create more immersive experiences for users without compromising on accuracy.

3D Morphable Models

3D morphable models play a pivotal role in enabling accurate reconstruction from 2D images or videos during real-time 3D face tracking processes. These models represent both shape and texture variations of human faces, providing a basis for estimating facial landmarks and capturing expressions accurately throughout the tracking process.

By leveraging 3D morphable models within face-tracking systems, developers enhance not only the realism but also fidelity of tracked facial movements significantly. The capability to accurately capture subtle nuances such as eyebrow raises or lip movements contributes to creating lifelike avatars or characters within virtual environments.

For example, consider an application where users interact with virtual characters that mimic their expressions realistically—this level of fidelity is made possible through utilizing sophisticated 3D morphable models within the underlying tracking technology.

Augmented Reality and Eye Gaze Tracking

AR Applications

Augmented reality (AR) applications leverage 3D face tracking to superimpose virtual objects onto users’ faces in real-time. This cutting-edge technology facilitates interactive experiences such as virtual makeup try-on, personalized filters, and immersive masks. For instance, popular social media platforms use 3D face tracking to enable users to apply various filters that seamlessly align with their facial features, enhancing user engagement.

The utilization of computer vision in AR applications powered by 3D face tracking plays a pivotal role in creating captivating and immersive user experiences. By accurately mapping the user’s facial features and movements in three dimensions, these applications can overlay virtual elements onto the user’s face convincingly. The seamless integration of digital content into the real-world environment through 3D face tracking enhances the overall visual appeal and interactivity of augmented reality experiences.

Eye Gaze Analysis

Eye gaze analysis constitutes a crucial aspect of 3D face tracking, enabling systems to discern an individual’s point of focus or where they are looking. This functionality is instrumental in facilitating gaze-based interaction with virtual content within AR environments. For example, eye gaze analysis allows users to control interfaces or interact with digital elements simply by directing their gaze towards specific areas on the screen or within a simulated environment.

Accurate eye gaze analysis significantly contributes to improving the realism and usability of applications integrating 3D face tracking technology. By accurately capturing subtle nuances related to eye movements and focus points, developers can create more natural interactions between users and virtual content within augmented reality settings. As a result, this fosters enhanced levels of immersion while also streamlining intuitive navigation through various AR experiences.

Performance Maximization Strategies

OpenVINO Toolkit

The OpenVINO toolkit is a powerful resource for developers working on 3D face tracking systems. It provides them with optimized tools and libraries to deploy efficient AI models across various hardware platforms. This means that developers can ensure real-time performance for their 3D face tracking applications, whether they are running on CPUs, GPUs, or specialized accelerators. By utilizing the OpenVINO toolkit, developers can significantly enhance the efficiency and portability of their 3D face tracking solutions.

For example:

A developer creating an augmented reality application incorporating 3D face tracking can leverage the OpenVINO toolkit to ensure that the system runs smoothly and responsively across different devices, from high-performance computers to more modest smartphones.

Lightweight Solutions

In situations where resources are limited – such as in smartphones or wearables – lightweight 3D face tracking solutions come into play. These solutions are specifically designed to operate efficiently on devices with constrained processing power while still maintaining acceptable levels of accuracy. Their priority is low computational requirements without compromising functionality.

Lightweight solutions enable widespread adoption of 3D face tracking in consumer devices with limited processing power.

For instance, a company developing smart glasses may opt for lightweight 3D face tracking technology to ensure that the device’s battery life isn’t excessively drained by intensive facial recognition processes.

Face Tracking in Automotive and VR/AR Trends

AI-powered 3D face tracking plays a pivotal role in the automotive industry, particularly in driver monitoring and attention analysis. By utilizing this technology, it becomes feasible to detect signs of driver fatigue such as drowsiness and distraction. This significantly contributes to enhancing safety on the roads by mitigating potential accidents caused by impaired driving.

Automotive AI analysis leveraging 3D face tracking also facilitates the development of advanced driver assistance systems (ADAS) and autonomous vehicles. These technologies are instrumental in revolutionizing road safety standards, making driving experiences more secure for everyone involved. For instance, with 3D face tracking, ADAS can better understand a driver’s behavior and respond accordingly to ensure optimal safety.

This innovation is crucial because it enables real-time monitoring of drivers’ facial expressions and movements while they are behind the wheel. As a result, automakers can proactively implement measures to prevent accidents or mitigate their severity through timely alerts or interventions.

VR and AR Trends

The rapid evolution of virtual reality (VR) and augmented reality (AR) continues to drive advancements in 3D face tracking technology, catering to the increasing demand for immersive experiences and realistic avatars. As these technologies become more sophisticated, there is an escalating need for highly accurate facial tracking solutions that can deliver seamless interactions within virtual environments.

With VR/AR trends, developers are constantly striving to create compelling user experiences that closely mimic real-world interactions. The utilization of precise 3D face tracking contributes significantly to achieving this goal by enabling lifelike avatars that accurately reflect users’ facial expressions and emotions in virtual settings.

Moreover, staying updated with VR/AR trends ensures access to cutting-edge technologies essential for creating captivating user experiences across various industries—from gaming and entertainment to education and healthcare. For example, medical professionals can leverage advanced VR simulations enhanced by robust 3D face tracking capabilities for training purposes or patient therapy sessions.

Getting Started with Face Tracking Software

Several established companies offer proven solutions that have been extensively tested and deployed in various applications. Choosing a proven solution reduces development time and mitigates potential risks associated with implementing new or untested technologies. For instance, companies like Apple, Microsoft, and Intel have developed reliable 3D face tracking solutions that are widely used in consumer electronics, gaming, and security systems.

Relying on proven solutions provides assurance of reliable performance and support from experienced providers. This means that developers can leverage the expertise of these companies to integrate 3D face tracking seamlessly into their applications without having to build the technology from scratch. By using established solutions, developers can also benefit from ongoing updates and technical support provided by the solution providers.

Companies offering proven 3D face tracking solutions often invest significant resources in research and development to ensure high accuracy and robustness of their technology. This translates into a more dependable system for end-users across various industries such as healthcare (patient monitoring), retail (customer analytics), entertainment (gesture recognition), and more.

Privacy-First Approaches

Privacy concerns are addressed by implementing privacy-first approaches in 3D face tracking systems. Anonymization techniques play a crucial role in protecting individuals’ identities when their facial data is being captured or analyzed. Companies developing these technologies use advanced algorithms to de-identify facial features while retaining essential information for analysis purposes.

Data protection measures are implemented to safeguard the storage and transmission of facial data collected through 3D face tracking systems. Encryption protocols ensure that sensitive information remains secure throughout its lifecycle within the system. These measures not only protect user privacy but also mitigate the risk of unauthorized access or misuse of personal data.

Moreover, user consent mechanisms are integrated into privacy-first 3D face tracking systems to empower individuals with control over how their facial data is utilized. Users may be prompted to provide explicit consent before their facial biometrics are processed for identification or authentication purposes within specific applications or services.

Prioritizing privacy safeguards builds trust among users who interact with products incorporating facial recognition technology while ensuring compliance with evolving privacy regulations such as GDPR (General Data Protection Regulation) in Europe or CCPA (California Consumer Privacy Act) in California.

Conclusion

You’ve now journeyed through the dynamic landscape of 3D face tracking, unraveling its evolution, technological nuances, and diverse applications. From the contrasting realms of open-source and proprietary solutions to the fusion of AI and deep learning for real-time tracking, you’ve glimpsed the frontiers of this burgeoning field. As we navigate the limitations and strides in performance optimization, envisioning the intersection of augmented reality and eye gaze tracking becomes tantalizingly tangible. The road ahead beckons with tantalizing prospects in automotive integration and the ever-expanding vistas of VR/AR. Now equipped with insights into getting started with face tracking software, you’re poised to embark on your own explorations in this riveting domain.

Embark on your own face tracking odyssey, delving into the endless possibilities that this technology holds for industries and experiences alike.

Frequently Asked Questions

What is 3D face tracking?

3D face tracking is a technology that enables the real-time monitoring and analysis of facial movements and expressions in three dimensions. It allows for accurate mapping of facial features, which has applications in various fields such as augmented reality, gaming, and human-computer interaction.

How does deep learning contribute to real-time 3D face tracking using Faceware Realtime and computer vision? Deep learning algorithms leverage a training dataset to enable accurate motion capture for real-time face tracking.

Deep learning algorithms play a crucial role in real-time 3D face tracking by enabling the system to learn intricate patterns and variations in facial movements. This facilitates more accurate and efficient recognition of facial features, leading to enhanced performance in real-world scenarios.

What are the limitations of current face tracking technologies?

Current face tracking technologies may encounter challenges with occlusions, varying lighting conditions, or complex facial expressions. Some systems may struggle with accurately capturing subtle movements or differentiating between similar facial features, impacting their overall precision and reliability.

How can businesses leverage face tracking software effectively?

Businesses can harness face tracking software for diverse applications such as personalized marketing strategies based on customer reactions, enhancing user experiences through interactive interfaces, or optimizing security measures through biometric authentication systems.

When exploring open source vs proprietary solutions for face tracking, it is important to consider key factors such as computer vision, motion capture, Faceware Realtime, and Visage Technologies.

When considering open source versus proprietary solutions for face tracking technologies, factors like customization flexibility, ongoing support and updates availability should be weighed against potential licensing costs or restrictions. Open source options offer transparency but require internal expertise for maintenance while proprietary solutions often provide comprehensive support but may limit customization possibilities.