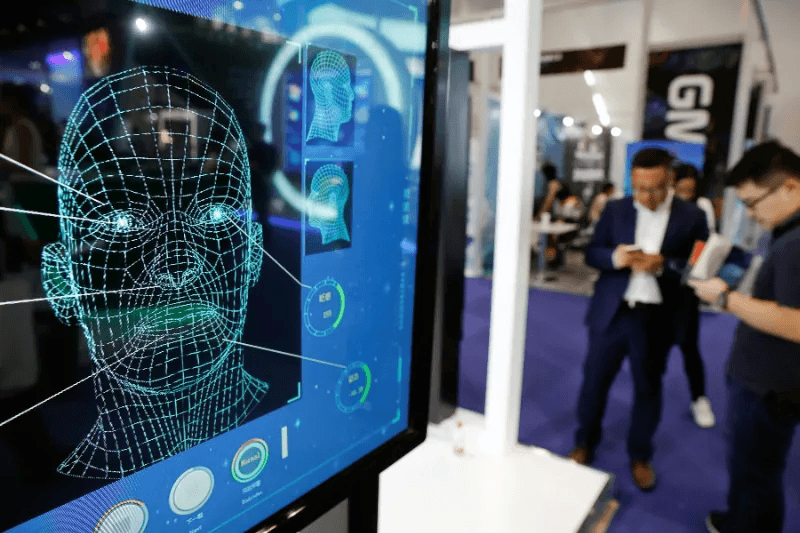

Facial recognition technology, powered by face identification algorithms, has become increasingly prevalent in our modern world, promising convenience and enhanced security through biometric identification. These advanced face verification algorithms can accurately identify individuals even when they are wearing face masks. But how accurate is it really? While some may tout the overall accuracy of the camera, the reality is that testing it can reveal more nuanced results.

On one hand, face identification algorithms and face verification algorithms have made significant strides in recent years. These systems utilize cameras to accurately identify individuals. However, it is important to acknowledge that there is still a potential for erroneous identifications. Face recognition technology, including face recognition systems and face identification algorithms, can quickly identify individuals, enabling seamless access to devices and buildings with the help of face recognition software. However, there are concerns about their reliability. Studies have shown that face identification and face verification algorithms can be biased, particularly when it comes to erroneous identification. These biases can arise due to various factors, including the quality of the camera used for capturing facial images. This raises important questions about the fairness and equity of face recognition technology and face recognition systems, as well as the testing of these technologies, in relation to fundamental rights.

Moreover, facial recognition systems are not foolproof. Factors such as lighting conditions, angle variations, image quality, camera, photos, and error can impact their accuracy during testing. Mistaken identifications and false positives are not uncommon occurrences.

Stay tuned for an insightful examination of the testing report on this widely used camera.

Understanding the Accuracy of Facial Recognition Technology

Factors Influencing System Precision

The accuracy of facial recognition technology is influenced by several factors, including the face, camera, confidence, and people. One crucial factor in testing the quality and resolution of face recognition technology and face recognition systems is the images or videos used for analysis. This includes testing the software. Higher-quality images with clear facial features lead to more accurate results in face recognition systems. The confidence of the testing increases with the use of face recognition technology. On the other hand, low-resolution or blurry images may result in false positives or negatives when testing face recognition systems. The confidence of face recognition technology may be affected by these factors.

Another important factor is the diversity of the dataset used for testing the facial recognition software. If the training data for face recognition technology primarily consists of white males, it can lead to biases and inaccuracies when testing the technology on individuals from underrepresented groups. This is why organizations such as NIST conduct extensive testing to ensure the accuracy and fairness of person identification. To improve accuracy in face recognition testing, it is essential to have diverse datasets that encompass various demographic factors such as ethnicities, genders, ages, and other relevant factors. These diverse datasets are crucial in meeting the standards set by NIST.

Lighting conditions play a significant role in system precision. Different lighting environments can affect how well facial recognition algorithms detect and match faces during testing. AI-powered software is used to analyze and identify faces, but the accuracy of these algorithms can be influenced by the lighting conditions. It is important to consider the rights and privacy implications of using facial recognition software in various settings. For example, poor lighting or extreme shadows may hinder accurate identification in face recognition technology. These limitations can impact the rights and privacy of a person when it comes to AI-powered systems.

Moreover, changes in appearance over time can impact accuracy. Facial recognition systems must account for variations in hairstyles, facial hair, makeup, aging effects, and other physical alterations that individuals may undergo between enrollment and subsequent identification attempts. These variations are important to ensure accurate face recognition, as specified by the National Institute of Standards and Technology (NIST) guidelines for facial recognition (FR) systems.

Addressing Critiques and Challenges

Facial recognition technology, also known as FR system, has faced criticism due to concerns about privacy violations and potential misuse by authorities or private entities. These concerns have raised questions about the rights and ethical implications of AI-powered facial recognition systems. The National Institute of Standards and Technology (NIST) has been actively involved in evaluating the accuracy and bias of these FR systems. Critics argue that the widespread adoption of face recognition AI without proper regulations could lead to surveillance abuses, infringements on civil liberties, and violations of grother et al rights.

To address the critiques and challenges surrounding the use of facial recognition technology, policymakers are working on implementing legal frameworks that establish guidelines for responsible use of AI and protect individuals’ face rights. These face recognition frameworks aim to strike a balance between public safety needs and protecting individual rights by defining appropriate usage scenarios while safeguarding against potential abuses.

Furthermore, ongoing research focuses on improving transparency and accountability within facial recognition (FR) systems. This is crucial in ensuring that the use of artificial intelligence (AI) in FR respects the rights of individuals. This includes developing methods for auditing face recognition (FR) algorithms’ performance across different demographics to identify any biases or inaccuracies present in their outputs. AI plays a crucial role in ensuring the rights of individuals are protected.

NIST’s Role in Enhancing Technology

The National Institute of Standards and Technology (NIST) plays a crucial role in enhancing the accuracy and reliability of facial recognition (FR) technology. NIST’s work is essential for improving the performance of artificial intelligence (AI) systems that analyze and identify faces. NIST conducts evaluations and benchmarking to assess the performance of different AI facial recognition algorithms and FR systems.

Through its Face Recognition Vendor Test (FRVT) program, NIST evaluates the effectiveness of various AI algorithms in matching faces under different conditions, including variations in pose, illumination, and image quality. This evaluation helps identify areas for improvement in face recognition (FR) algorithms and encourages developers to enhance their AI algorithms’ accuracy.

NIST collaborates with industry stakeholders, researchers, and policymakers to establish standards and best practices for facial recognition (FR) technology, leveraging artificial intelligence (AI) to improve accuracy and efficiency. These efforts aim to address concerns related to bias, privacy, and ethical considerations while promoting the responsible deployment of face recognition (FR) technology.

Analyzing Performance and Accuracy Over Time

Gender and Racial Disparities

One important aspect to consider when evaluating the accuracy of AI facial recognition (FR) technology is the presence of gender and racial disparities in face recognition (FR). Studies have shown that face recognition systems can sometimes be less accurate when identifying individuals from certain demographic groups. For example, research has found that AI facial recognition algorithms tend to perform better on lighter-skinned individuals compared to those with darker skin tones. This disparity in face recognition (FR) technology can lead to biased outcomes, potentially impacting marginalized communities. The use of artificial intelligence (AI) in FR exacerbates this issue.

To address this issue, researchers and developers are actively working on improving the performance of AI-based facial recognition (FR) systems for all individuals, regardless of their gender or race. They are implementing more diverse datasets during training phases to ensure that the algorithms learn from a wide range of faces. By incorporating a variety of ethnicities and genders in the training data, face recognition (FR) technology can reduce biases and improve accuracy across different demographic groups.

Real-World Applications and Wild Picture Challenges

Facial recognition technology, also known as FR or face AI, has found numerous applications in real-world scenarios. From unlocking smartphones using face recognition to enhancing security measures at airports with the same technology, face recognition has proven its potential in various domains. However, it’s worth noting that there are challenges associated with accurately recognizing faces in different environments, especially when using AI technology.

One such challenge is the “wild picture” problem. Facial recognition algorithms, powered by AI, often struggle when presented with images taken under uncontrolled conditions such as low lighting or extreme angles. This is because the face recognition (FR) technology relies on clear and well-lit images to accurately identify individuals. In these situations, the accuracy of the AI face recognition system may decrease significantly.

Developers are constantly working on refining face recognition algorithms and training them using diverse datasets that include images captured under a wide range of conditions. This helps address challenges in fr development. By exposing the face recognition (FR) system to challenging scenarios during its development phase, researchers aim to improve its performance in real-world applications where environmental factors can impact accuracy.

Visa Picture Utilization for Improved Results

In recent years, visa application processes have started utilizing facial recognition technology (FR) as a means of verifying identities more efficiently. This technology uses artificial intelligence (AI) to analyze and match the face of the applicant with their identification documents. When applying for a visa, applicants are typically required to submit a photograph of themselves for face recognition (FR) purposes. This picture is then used as a reference point for face recognition (FR) during the identity verification process.

By comparing the live image of the applicant’s face captured at the visa office with the submitted photograph, facial recognition algorithms can help ensure that the applicant’s identity matches the information provided in their application. This utilization of facial recognition technology not only streamlines the visa application process but also enhances security measures by reducing the risk of identity fraud. By implementing face recognition technology, the visa application process becomes more efficient and secure.

To improve accuracy in this context, developers focus on training face recognition (FR) algorithms using a large and diverse dataset of visa application pictures. By exposing the system to a wide range of facial variations, including different ethnicities, ages, and genders, they aim to enhance its ability to accurately match live images with submitted photographs. This process improves the accuracy of face recognition (FR) technology.

The Science Behind Facial Recognition Accuracy

Reviewing Scientific Findings

Facial recognition technology, or FR, has made significant advancements in recent years. However, many are still questioning the accuracy of this technology. Scientists have conducted extensive research to evaluate the performance and reliability of facial recognition algorithms. These algorithms are designed to accurately identify and match a person’s face with existing data, ensuring efficient FR technology. These studies have provided valuable insights into the strengths and limitations of face recognition (FR) technology.

One key finding from scientific research is that facial recognition systems can achieve high levels of accuracy when it comes to recognizing a person’s face under certain conditions. For example, when tested on high-quality images with well-lit faces and minimal occlusions, facial recognition algorithms have demonstrated impressive accuracy rates for fr. In controlled environments where subjects are cooperative and pose for the camera, face recognition (FR) technology performs exceptionally well.

However, it’s important to note that real-world scenarios often present more challenges than controlled lab settings when it comes to fr and face recognition. Factors such as variations in lighting conditions, different camera angles, changes in appearance over time, and the accuracy of facial recognition systems can all impact the face. Scientific studies have shown that these factors can significantly reduce the performance of face recognition (FR) algorithms, leading to lower identification rates.

Algorithm Evaluation and Development

To improve the accuracy of face recognition systems, algorithm evaluation and development play a crucial role in FR technology. Researchers continuously work on refining existing face recognition (FR) algorithms and developing new ones to overcome challenges posed by real-world conditions.

Algorithm evaluation involves testing different facial recognition algorithms using standardized datasets to compare their performance in recognizing and identifying faces. This process of face recognition (FR) helps identify areas where improvements are needed and guides researchers in developing more accurate FR algorithms. By analyzing the results obtained from these evaluations, scientists gain insights into which face recognition techniques or approaches yield better identification results.

Furthermore, ongoing research focuses on addressing biases within facial recognition algorithms. These algorithms are used to analyze and identify a person’s face, but they can sometimes be inaccurate or biased. The goal of this research is to improve the accuracy and fairness of FR technology. Studies have revealed that some face recognition (FR) algorithms perform differently depending on factors like race or gender. These biases can lead to inaccurate identifications or misidentifications in face recognition (FR) if not properly addressed. To ensure fairness and mitigate bias-related issues, researchers are actively working on developing more inclusive and unbiased face recognition (FR) algorithms.

Impact of Picture Quality on Identification

The accuracy of facial recognition identification relies heavily on the quality of face pictures used for fr. Studies have shown that low-resolution images, blurry pictures, or those with poor lighting conditions can significantly reduce the performance of facial recognition algorithms. This is because facial recognition algorithms struggle to accurately identify faces when presented with images that are fr.

For instance, in crowded surveillance footage where faces may appear pixelated or obscured, accurate identifications become more challenging for facial recognition (fr) systems. Similarly, low-quality images extracted from social media platforms or video recordings may not provide enough detail for reliable face recognition.

Researchers are aware of these limitations and are developing techniques to enhance the performance of algorithms when dealing with suboptimal picture quality, specifically focusing on improving the algorithms’ ability to handle fr. Advancements in image enhancement technologies and the incorporation of machine learning techniques have shown promise in improving identification accuracy even in challenging scenarios. These advancements in image enhancement technologies and the incorporation of machine learning techniques have demonstrated their potential to enhance identification accuracy, even in challenging scenarios.

Addressing Accuracy Concerns in Facial Recognition

Promoting Equity and Fairness

One of the key concerns surrounding facial recognition technology is its potential for bias and discrimination. The use of fr technology has raised questions about fairness and equity in its application. As facial recognition algorithms are trained on large datasets, they can inadvertently learn biases present in those datasets, leading to inaccurate results and unfair treatment of certain groups. This is particularly concerning when it comes to fr technology.

To promote equity and fairness, it is crucial to ensure that the training data used for facial recognition systems is diverse and representative of the population. This is especially important for facial recognition (FR) technology to be effective and unbiased. This means including a wide range of individuals from different races, genders, ages, and backgrounds. By incorporating this diversity into the training process, we can minimize the risk of biased outcomes. This fr approach ensures a more fair and balanced outcome.

Ongoing monitoring and evaluation of facial recognition systems are necessary to identify any biases that may arise over time, especially in the context of fr. Regular audits can help detect and address any disparities or inaccuracies in how the technology performs across different demographic groups, including the fr population. This proactive approach enables developers to continuously improve their algorithms and reduce potential biases, resulting in more accurate and fair results for users.

Mitigating Potential Risks and Consequences

While facial recognition technology offers numerous benefits, it also carries potential risks that need to be carefully managed. The use of fr technology has become increasingly prevalent in various industries. However, it is crucial to address the privacy concerns associated with fr and implement effective safeguards. One significant concern is privacy infringement. The use of facial recognition systems raises questions about how personal data is collected, stored, and shared using fr technology. It is essential to establish robust safeguards to protect individuals’ privacy rights while using this fr technology.

Another risk associated with facial recognition is the occurrence of false positives or false negatives in fr technology. False positives occur when the system incorrectly identifies someone as a match when they are not, potentially leading to wrongful accusations or arrests. This can happen in various scenarios, such as facial recognition technology used by law enforcement agencies or fingerprint matching systems at border control checkpoints. The consequences of fr can be severe, resulting in innocent individuals being wrongly targeted and their lives disrupted. It is crucial for these systems to continually improve and reduce the occurrence of fr to ensure fair and accurate identification processes. On the other hand, false negatives happen when the system fails to recognize a person correctly, which could result in security breaches or missed opportunities for identification. In the context of facial recognition (FR) technology, false negatives occur when the FR system does not accurately identify an individual, leading to potential security risks or missed chances for identification.

To mitigate the risks associated with facial recognition systems, rigorous testing and validation processes should be implemented during the development stages. These processes are crucial to ensure the accuracy and reliability of fr technology. Continuous improvement through feedback loops allows developers to refine their algorithms based on real-world performance data. This process is essential for optimizing the fr of algorithms and ensuring they perform efficiently in various scenarios.

Ensuring Ethical Practices in Implementation

Ethical considerations play a crucial role in the implementation of facial recognition technology, especially when it comes to fr. It is essential to establish clear guidelines and regulations to govern the use of fr, ensuring that it is deployed responsibly and ethically.

Transparency is a key aspect of ethical implementation. Individuals should be informed about when and how their data will be collected, stored, and used for facial recognition purposes. This is especially important as facial recognition technology becomes more prevalent in various industries. Consent should be obtained whenever possible, allowing individuals to make informed decisions about participating in systems that utilize this technology, including fr.

Furthermore, accountability mechanisms must be in place to hold organizations accountable for any misuse or abuse of facial recognition technology. These mechanisms are crucial to ensure that organizations using fr technology are held responsible for their actions. Regular audits by independent third parties can help ensure compliance with ethical standards and provide an extra layer of oversight. These audits, conducted by external experts, are essential for maintaining a high level of fr and ensuring that all ethical guidelines are followed. By having an independent party review and assess the company’s practices, it adds an additional level of accountability and transparency. These audits are crucial in identifying any potential fr violations and addressing them promptly. Overall, regular audits by independent third parties are a vital component of maintaining ethical standards within an organization.

Enhancing Security with Biometric Systems

Facial recognition technology has become increasingly prevalent in various aspects of our lives, from unlocking smartphones to airport security. Fr offers the promise of enhanced security and convenience, but many people are concerned about its accuracy and potential for misuse.

Automated Identification for Robust Security

One of the key advantages of facial recognition technology is its ability to automate identification processes, providing a more efficient and secure means of verifying individuals’ identities using fr. By analyzing unique facial features such as the distance between eyes, nose shape, and jawline structure, biometric systems can accurately match faces against a database of known individuals. These systems use FR technology to identify individuals based on their facial characteristics.

Studies have shown that modern facial recognition algorithms can achieve impressive levels of accuracy in recognizing faces. For example, a study conducted by the National Institute of Standards and Technology (NIST) found that top-performing algorithms had an accuracy rate exceeding 99% on high-quality images with fr. This level of precision makes facial recognition an effective tool for identifying individuals in various scenarios, including law enforcement investigations and access control systems. Facial recognition (FR) technology has proven to be highly accurate and reliable in identifying individuals. It is widely used in law enforcement investigations, where it can quickly match faces captured in surveillance footage with known suspects or persons of interest. FR is also utilized in access control systems, such as at airports or secure facilities, to verify the identity of individuals and grant them authorized entry. With its advanced capabilities, FR has revolutionized the way we identify and authenticate individuals in a wide range of applications.

Compensation for Damages Due to Inaccuracies

While facial recognition technology has made significant advancements in recent years, fr it is not without its limitations. One concern regarding the use of facial recognition technology is the potential for inaccuracies that could result in false identifications or denials of access. The accuracy of fr systems is crucial to ensure that individuals are correctly identified and granted appropriate access. To address this issue, there should be mechanisms in place to compensate individuals who experience damages due to these inaccuracies. This is especially important for individuals in the fr industry.

For instance, if someone is wrongfully identified as a suspect based on faulty facial recognition data and suffers harm as a result, they should have recourse to seek compensation for the fr. This could include financial restitution or other forms of redress to mitigate any negative impacts caused by inaccurate identifications, such as fr.

Liability of Manufacturers and Users

Another important aspect to consider when assessing the accuracy of facial recognition technology is the liability of both manufacturers and users. Facial recognition technology has become increasingly prevalent in various industries, including security and marketing. As such, it is crucial for both fr manufacturers and fr users to understand their responsibilities and potential legal ramifications. Manufacturers have a responsibility to develop and test their systems rigorously to ensure high levels of accuracy and reliability for their customers. The fr keyword is crucial in this process as it ensures that the systems meet the necessary standards and regulations set by the industry. They should also provide regular updates and improvements to address any identified vulnerabilities or biases. Additionally, they should ensure that these updates and improvements are in line with the latest industry standards and best practices in order to maintain a high level of security and accuracy.

On the other hand, users of facial recognition technology must understand its limitations and use it responsibly. Facial recognition technology is a powerful tool that requires responsible usage. This includes using appropriate image quality, ensuring proper lighting conditions, and understanding the potential for false positives or negatives in the fr. By taking these precautions, users can help minimize the risk of inaccurate identifications and promote the overall effectiveness of facial recognition systems. The use of facial recognition (FR) technology has become increasingly prevalent in various industries.

Tackling Biases and Discrimination in Algorithms

Facial recognition technology, also known as FR, has gained significant attention in recent years due to its potential applications in various fields. However, concerns about the accuracy and fairness of these algorithms have also been raised. In order to address these issues, measures are being taken to avoid erroneous results, minimize errors through constant monitoring, and evaluate AI unintelligibility issues.

Measures to Avoid Erroneous Results

One of the key challenges with facial recognition technology is the potential for biases and discrimination. Algorithms can be influenced by factors such as race, gender, age, and even lighting conditions. To mitigate this issue, researchers and developers are implementing measures to avoid erroneous results.

For instance, data collection plays a crucial role in training facial recognition algorithms. It is important to ensure that the dataset used for training is diverse and representative of different demographics. By including a wide range of individuals from various backgrounds, the algorithm can learn to recognize faces accurately across different groups.

Ongoing research focuses on developing algorithms that are robust against variations in lighting conditions or changes in appearance due to aging or facial hair. By incorporating these factors into the training process, facial recognition systems can become more reliable and accurate.

Constant Monitoring for Error Minimization

To enhance the accuracy of facial recognition systems, constant monitoring is essential. This involves regularly evaluating the performance of algorithms and identifying any potential biases or errors that may arise over time.

By analyzing real-world scenarios where facial recognition technology is deployed, researchers can identify patterns or instances where the system may produce inaccurate results or exhibit biased behavior. This feedback loop enables developers to make necessary adjustments and updates to improve system performance.

Furthermore, ongoing monitoring allows for continuous learning from new data inputs. As more diverse datasets become available over time, algorithms can adapt and refine their understanding of different faces and features.

Evaluating AI Unintelligibility Issues

Another aspect that needs consideration when assessing the accuracy of facial recognition technology is the intelligibility of AI systems. While these algorithms can achieve impressive results, they often lack transparency in explaining how they arrive at their decisions.

To address this concern, researchers are working on developing methods to evaluate the decision-making process of AI systems. This involves analyzing the underlying factors and features that contribute to a facial recognition algorithm’s output. By understanding the reasoning behind these decisions, it becomes possible to identify potential biases or errors and rectify them.

Researchers are also exploring ways to make AI systems more interpretable by humans. This includes developing techniques that provide explanations or justifications for the algorithm’s outputs, allowing users to understand why a particular decision was made.

Technical Challenges Impacting Facial Recognition Accuracy

Facial recognition technology has made significant advancements in recent years, but there are still several technical challenges that can impact its accuracy. Let’s explore some of these challenges and how they affect the precision of facial recognition systems.

Effects of Aging on Recognition Accuracy

One of the key challenges faced by facial recognition technology is its ability to accurately identify individuals as they age. As people grow older, their facial features change due to factors such as wrinkles, sagging skin, and changes in hair color. These variations can make it difficult for a facial recognition system to match an older image with a current one.

Research has shown that aging can significantly decrease the accuracy of facial recognition algorithms. A study conducted by the National Institute of Standards and Technology (NIST) found that many commercial facial recognition systems have higher error rates when matching images taken several years apart. This highlights the need for ongoing training and updating of algorithms to account for age-related changes in facial appearance.

Facial Coverings and Low Resolution Challenges

Another challenge affecting facial recognition accuracy is the presence of facial coverings or low-resolution images. In recent times, wearing masks has become commonplace due to public health concerns. However, this poses a challenge for facial recognition systems that rely on capturing detailed features like the shape of the nose or mouth.

Furthermore, low-resolution images captured from surveillance cameras or other sources may lack sufficient detail for accurate identification. The lack of clarity in these images can lead to false matches or misidentifications, reducing overall accuracy.

To address these challenges, researchers are developing advanced algorithms capable of recognizing individuals even when they are wearing masks or working with low-resolution images. These advancements aim to improve accuracy while maintaining security standards.

Lighting Considerations for Identification Precision

The lighting conditions under which images are captured also play a crucial role in determining the accuracy of facial recognition systems. Variations in lighting can create shadows or highlights on the face, altering its appearance. This can make it challenging for algorithms to match images taken under different lighting conditions.

Moreover, the use of artificial lighting, such as fluorescent or LED lights, can introduce color casts that further complicate facial recognition accuracy. Different light sources may emit varying wavelengths, affecting how facial features are captured and interpreted by the algorithm.

To mitigate the impact of lighting variations, researchers are exploring techniques like image normalization and adaptive illumination correction. These methods aim to standardize image quality across different lighting conditions and enhance the accuracy of facial recognition systems.

Legal and Ethical Implications of Facial Recognition Use

Liability for Inaccuracies and Moral Damages

The accuracy of facial recognition technology has significant legal and ethical implications. One concern is the potential liability for inaccuracies in facial recognition systems. If a system misidentifies an individual, it can have serious consequences, leading to false accusations or wrongful arrests. Companies and organizations that deploy these technologies may face legal challenges and lawsuits if their systems produce inaccurate results.

Moreover, there is also the issue of moral damages caused by false identifications. Being wrongly identified by a facial recognition system can be emotionally distressing for individuals who are falsely implicated in criminal activities or subjected to unwarranted surveillance. The psychological impact of such incidents cannot be overlooked, as it can lead to anxiety, stress, and a loss of trust in these technologies.

Legal Implications of Technology Failures

Facial recognition technology is not infallible, and failures can have legal ramifications. In cases where law enforcement agencies heavily rely on facial recognition systems for identifying suspects or solving crimes, any technical glitches or errors could result in wrongful convictions or the release of guilty individuals due to misidentifications.

Furthermore, there are concerns about bias within facial recognition algorithms that may disproportionately affect certain groups based on race, gender, or other characteristics. This raises questions about fairness and equal treatment under the law when using these technologies.

Quantity and Quality of Data for Enhanced Results

For accurate identification through facial recognition technology, both the quantity and quality of data play crucial roles. The more diverse and extensive the dataset used to train these systems, the better their performance will be in recognizing individuals across different demographics.

However, obtaining large quantities of high-quality data presents its own set of challenges. Privacy concerns arise when collecting vast amounts of personal information from individuals without their explicit consent or knowledge. Striking a balance between data collection for improved accuracy while respecting privacy rights becomes imperative.

The quality of the data used in training facial recognition systems is vital. If the dataset is biased or incomplete, it can lead to skewed results and reinforce existing societal biases. Ensuring that the data used for training is representative and unbiased is crucial to mitigating potential ethical issues.

Future Trends in Facial Recognition Technology

Predictions for Technological Evolution

As facial recognition technology continues to evolve, there are several predictions for its future development. One key prediction is the improvement of accuracy in facial recognition systems. With advancements in deep learning algorithms and artificial intelligence, these systems are becoming more adept at recognizing faces with higher precision. This means that the margin of error is expected to decrease significantly, leading to more accurate identification and authentication processes.

Another prediction is the integration of facial recognition technology into various industries and sectors. Currently, facial recognition is primarily used for security purposes, such as access control or surveillance. However, experts believe that this technology will expand its applications to areas like healthcare, retail, and entertainment. For example, in healthcare, facial recognition can be used to identify patients quickly and accurately, ensuring efficient medical records management.

Furthermore, there is a growing interest in developing emotion recognition capabilities within facial recognition systems. By analyzing facial expressions and microexpressions, these systems can potentially detect emotions such as happiness, sadness, anger, or surprise. This has significant implications for marketing research and customer experience analysis as businesses can gain insights into consumer reactions and tailor their strategies accordingly.

Measures to Ensure Continued Improvement

To ensure the continued improvement of facial recognition technology, several measures need to be taken. Firstly, there should be ongoing research and development efforts focused on refining algorithms and training models using diverse datasets representing different demographics. This will help address biases that may exist within current systems and improve accuracy across various populations.

Secondly, privacy concerns must be addressed through robust data protection measures. As facial recognition involves capturing biometric information from individuals’ faces, it is crucial to establish strict regulations regarding data storage and usage. Implementing strong encryption methods and obtaining informed consent from individuals can help maintain privacy while utilizing this technology effectively.

Collaboration between industry stakeholders such as technology companies, policymakers, researchers, and civil society organizations is essential. By working together, they can establish standards and guidelines for the responsible development and deployment of facial recognition technology. This collaborative approach will help ensure transparency, accountability, and ethical use of this powerful tool.

Preparing for Emerging Trends in 2021 and Beyond

In anticipation of emerging trends in facial recognition technology, organizations should be proactive in adapting their practices. One important aspect is investing in robust cybersecurity measures to protect against potential threats and unauthorized access to facial recognition systems. Implementing multi-factor authentication and regularly updating software can enhance security and safeguard sensitive data.

Furthermore, organizations should prioritize user education and awareness regarding facial recognition technology.

Conclusion

So, there you have it! We’ve delved into the world of facial recognition technology and explored its accuracy. Through our analysis, we’ve discovered the factors that impact accuracy, from technical challenges to biases in algorithms. Despite the advancements made in recent years, facial recognition technology is not without its limitations and concerns.

But what does this mean for you? As a user or potential user of facial recognition systems, it’s crucial to be aware of the strengths and weaknesses of this technology. Educate yourself about the potential risks and implications. Stay informed about the legal and ethical aspects surrounding its use. And most importantly, demand transparency and accountability from those who develop and implement these systems.

Facial recognition technology has undoubtedly transformed various industries, but it’s up to us to ensure that it is used responsibly and ethically. By understanding its accuracy and advocating for fairness, we can contribute to a future where this technology benefits society while safeguarding individual rights. It’s time to navigate this evolving landscape with knowledge and critical thinking.

Frequently Asked Questions

FAQ

How accurate is facial recognition technology?

Facial recognition technology has made significant advancements in accuracy over the years. With state-of-the-art algorithms and sophisticated deep learning techniques, it can now achieve high levels of accuracy, often surpassing human performance. However, it’s important to note that accuracy can vary depending on factors such as lighting conditions, image quality, and algorithm training.

Can facial recognition be biased or discriminatory?

Yes, facial recognition algorithms have been found to exhibit biases and discrimination. These biases can arise due to imbalanced training data or inherent limitations in the algorithms themselves. To address this issue, researchers are actively working on developing fairer and more inclusive algorithms by improving data collection practices and implementing bias mitigation strategies.

What are the technical challenges impacting facial recognition accuracy?

Several technical challenges impact facial recognition accuracy. These include variations in lighting conditions, pose changes, occlusions (such as wearing glasses or scarves), low-resolution images, and aging effects. Researchers are continuously exploring ways to overcome these challenges through advancements in computer vision techniques and robust algorithm design.

What are the legal and ethical implications of using facial recognition technology?

The use of facial recognition technology raises concerns about privacy, surveillance, and potential misuse of personal information. It is crucial to establish clear regulations and guidelines around its deployment to safeguard individuals’ rights while ensuring public safety. Striking a balance between security needs and protecting civil liberties remains a complex challenge that requires careful consideration from policymakers.

What are some future trends in facial recognition technology?

In the future, we can expect further improvements in accuracy through advancements in machine learning algorithms and hardware capabilities. There will be increased focus on addressing ethical considerations such as transparency, consent mechanisms for data usage, and minimizing biases within the algorithms. The integration of facial recognition with other biometric modalities may also enhance overall system performance.