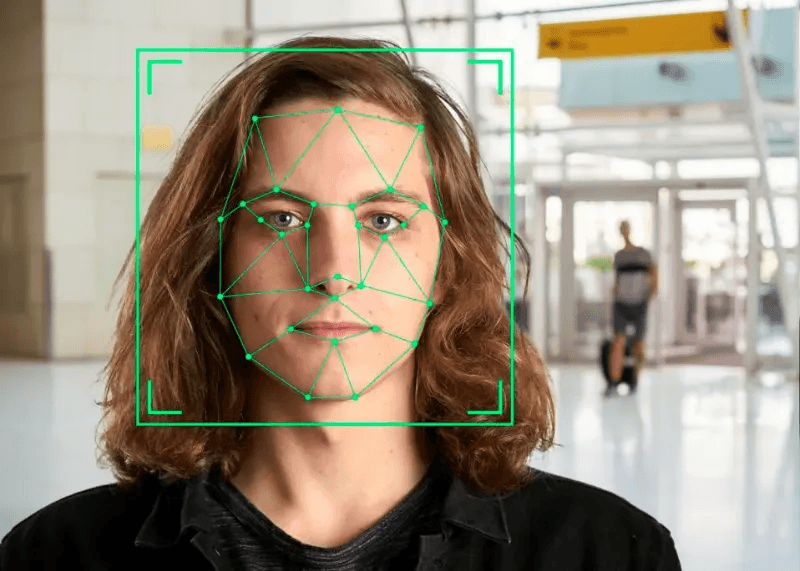

Facial recognition technology, a computer vision technology that analyzes face images, has gained widespread popularity across various industries, revolutionizing the way we interact with technology and enabling advanced applications. However, ensuring the accuracy and security of computer vision technologies and checks is of utmost importance for researchers. This is where spoof resilience plays a crucial role. Spoof resilience refers to the high accuracy of facial recognition systems in distinguishing between real faces and fraudulent attempts using computer vision and discriminative features. This ability is achieved through the use of machine learning models.

We explore different approaches, research methods, and strategies that researchers have developed to enhance spoof resilience in facial recognition technology against face spoofing attacks. These methods aim to distinguish between fake faces and genuine faces in face pictures. From advanced feature extraction techniques to robust architectural designs, we uncover the innovative solutions that are being employed in face recognition systems to tackle this challenge. These solutions utilize computer vision and face architecture.

Join us as researchers navigate through the depths of this fascinating field and discover how advancements in spoof resilience are shaping our future applications of facial recognition technology. From identifying fake faces to recognizing genuine faces, these researchers are working towards developing a reliable face recognition system.

Understanding Biometric Spoofing

Biometric spoofing is a technique used by researchers to deceive facial recognition systems by presenting false or manipulated biometric data. This technique aims to exploit the system’s vulnerability and test its reliability in identifying a reliable face. By manipulating datasets, researchers can assess the system’s ability to accurately detect and authenticate individuals. Hackers employ various spoofing techniques to trick researchers, compromising the effectiveness and security of VGG systems. These systems rely on datasets and repositories to function properly. Understanding face recognition systems and the datasets used by researchers is crucial to develop effective anti-spoofing measures and protect against unauthorized access and fraudulent activities.

Spoofing Techniques

Spoofing techniques targeting facial recognition systems have various forms, aiming to exploit vulnerabilities. These techniques are of interest to researchers studying facial recognition datasets and are often stored in repositories like VGG. Photo attacks, also known as facial recognition spoofing methods, involve presenting a printed or digital photo of an authorized user’s face to the system, fooling it into granting access. Spoof detection researchers use datasets to study and develop methods to counter these attacks. Video attacks, also known as spoof attacks, involve the use of recorded videos to deceive the system into authenticating impostors. These attacks simulate real-time presence and can be detected by researchers using datasets and repositories. 3D mask attacks involve creating realistic masks that mimic the authorized user’s facial features, allowing hackers to bypass face spoof detection and facial recognition by wearing them. Researchers can use datasets and repositories to study and develop methods for detecting these attacks.

Staying updated on the latest advancements in spoofing techniques is essential for researchers and developers working on facial recognition systems. Hackers continuously evolve their methods, making it crucial to stay informed about the latest techniques used to deceive these systems. By staying up to date, researchers can better understand the vulnerabilities of facial recognition systems and develop more robust datasets for testing and improving system security. By staying informed about emerging spoofing techniques, developers can enhance their anti-spoofing solutions for face recognition systems and ensure robust protection against such threats.

Face Spoofing Detection

Face spoofing detection plays a critical role in identifying genuine faces from spoofed ones. Advanced algorithms analyze various facial features such as texture, depth, motion, and liveness indicators to detect signs of spoofing attempts. These spoof detection algorithms compare captured images or videos with reference databases of genuine faces, looking for discrepancies that indicate potential fraud.

By leveraging sophisticated computer vision techniques and machine learning algorithms, face spoofing detection helps prevent unauthorized access and protects against fraudulent activities. It adds an extra layer of security by verifying the authenticity of faces presented for identification purposes, including spoof detection.

Importance of Anti-Spoofing

Effective anti-spoofing measures are vital for maintaining the integrity and reliability of facial recognition systems. Without robust anti-spoofing techniques in place, malicious actors can easily deceive the system and gain unauthorized access. This can lead to significant security breaches in face spoof detection, compromising sensitive data and potentially causing financial losses or reputational damage.

Investing in robust anti-spoofing solutions is crucial for organizations that rely on facial recognition technology. These solutions employ advanced algorithms and techniques to detect and prevent spoofing attempts, ensuring accurate identification and protecting against fraudulent activities.

Types of Facial Recognition Spoofing

Facial recognition technology, including face spoof detection, has gained popularity in applications such as unlocking smartphones and improving security systems. However, it is crucial to understand the different types of spoofing attacks that can compromise the accuracy and reliability of facial recognition systems. By understanding these attack methods, we can develop effective countermeasures to ensure the resilience of facial recognition technology, particularly in face spoof detection.

Photo Attacks

One common type of spoofing attack is a photo attack. We explore different approaches, research methods, and strategies that researchers have developed to enhance spoof resilience in facial recognition technology against face spoofing attacks. These methods aim to distinguish between fake faces and genuine faces in face pictures. This is a common technique used for spoof detection. This is a common technique used for spoof detection. This is a common technique used for spoof detection. To combat photo attacks, anti-spoofing methods employ techniques such as texture analysis, motion analysis, and passive detection techniques.

Texture analysis is a technique used in spoof detection to analyze the fine details and patterns present in a face. This analysis helps distinguish between real faces and static images. Motion analysis is crucial for face spoof detection as it helps in identifying subtle movements or changes in appearance that are indicative of live subjects. Passive detection techniques aim to identify anomalies in image properties or metadata that indicate the presence of a photo attack.

Developing face spoof detection algorithms is essential for improving the overall security and reliability of facial recognition systems against photo attacks.

Video Attacks

Video attacks, also known as face spoof attacks, pose another significant threat to facial recognition systems. Face spoof detection is crucial in preventing these attacks. Attackers use pre-recorded videos or deepfake technology to trick the system into recognizing them as someone else, making face spoof detection crucial. Detecting face spoof attacks requires analyzing subtle visual cues and temporal inconsistencies within the video footage for face spoof detection.

To enhance resilience against video attacks, combining motion analysis with liveness detection integration proves effective. Motion analysis is a crucial tool in face spoof detection as it aids in identifying unnatural movements or inconsistencies in facial expressions when compared to live subjects. Liveness detection integration involves incorporating additional measures like asking users to perform specific actions or challenges during authentication processes.

By leveraging neural network models alongside motion analysis and liveness detection integration, we can strengthen our defenses against video-based spoofing attempts.

3D Mask Attacks

Spoofers also employ 3D mask attacks by using realistic masks or 3D models to impersonate someone’s face. Detecting face spoof attacks requires advanced techniques such as face spoof detection, reflectance analysis, and texture analysis.

Reflectance analysis is a method used to detect spoofing by analyzing how light interacts with the surface of a face. This analysis can identify irregularities that may indicate the presence of a mask. Texture analysis focuses on identifying discrepancies in fine details and patterns that distinguish real faces from masks. Integrating active detection methods, such as asking users to perform specific actions like blinking or smiling during authentication, can further enhance resilience against 3D mask attacks.

Preventing Biometric Spoofing

To enhance the resilience of facial recognition systems against spoofing attacks, several strategies can be implemented. Two effective approaches for enhancing security are multi-factor authentication and continuous authentication, particularly in the context of face spoof detection.

Multi-Factor Authentication

One way to prevent biometric spoofing is by implementing multi-factor authentication alongside facial recognition. This involves combining facial recognition with other authentication factors, such as fingerprints or voice recognition, to enhance face spoof detection. By requiring multiple factors for authentication, such as face spoof detection, the overall system resilience is significantly enhanced.

For example, if an attacker manages to create a convincing spoof of someone’s face, they would still need to provide additional authentication factors like their fingerprint or voice to gain access. This reduces the risk of unauthorized access even if one factor, such as face spoof detection, is compromised.

Multi-factor authentication adds an extra layer of security by increasing the complexity required for successful authentication, including face spoof detection. It provides a robust defense against biometric spoofing attempts and helps ensure that only legitimate users are granted access.

Continuous Authentication

Another effective approach to prevent biometric spoofing is through continuous authentication. Unlike traditional methods where identity verification occurs only at the initial login stage, continuous authentication continuously verifies the user’s identity throughout their session, including face spoof detection.

Continuous authentication monitors various biometric and behavioral factors in real-time to detect any signs of spoofing attempts. These factors can include facial movements, eye blinks, typing patterns, mouse movements, and more. By analyzing these ongoing cues, the system can identify anomalies that may indicate a potential spoofing attack.

By employing continuous authentication, organizations can ensure ongoing protection against unauthorized access and improve overall system security. This approach adds an additional layer of defense beyond the initial login stage and helps mitigate risks associated with biometric spoofing.

Implementing both multi-factor authentication and continuous authentication provides a comprehensive defense against biometric spoofing attacks. These strategies work hand in hand to create a robust and resilient facial recognition system that is capable of detecting and preventing fraudulent attempts effectively.

VGG-Face Architecture for Spoof Detection

Neural network models play a crucial role in developing accurate and robust anti-spoofing algorithms. These models are trained on large-scale datasets to improve detection performance. One such architecture that has shown promising results in spoof resilience is the VGG-Face architecture.

The VGG-Face architecture is a deep convolutional neural network (CNN) model that has been widely used for face recognition tasks. It consists of 16 weight layers and was originally designed for face identification. However, researchers have also leveraged its capabilities to detect spoofed faces.

By training the VGG-Face model on a diverse dataset containing both genuine and spoofed images, it can learn to distinguish between real and fake faces based on various features such as texture, shape, and color. The model extracts these features through multiple layers of convolutions and pooling operations, enabling it to capture intricate details that differentiate live faces from spoofed ones.

Liveness detection integration is another important aspect of enhancing spoof resilience in facial recognition systems. This involves incorporating mechanisms to determine if a presented face is live or fake. By integrating liveness detection techniques into facial recognition systems, their resistance against spoofing attacks can be significantly improved.

There are several liveness detection techniques that can be utilized to identify spoofed faces. For example, eye blinking analysis can be employed to check if the presented face exhibits natural blinking patterns. Spoofed faces often lack this characteristic behavior, making them distinguishable from genuine ones.

Texture consistency checks are another effective technique for liveness detection. By analyzing the texture patterns across different regions of the face, it becomes possible to detect inconsistencies that may indicate a fake image or video being presented.

Integrating these liveness detection techniques with facial recognition systems enhances their ability to accurately identify and reject spoofed attempts. By combining the power of neural network models like VGG-Face with liveness detection mechanisms, the overall spoof resilience of facial recognition systems can be greatly improved.

Continual research and development of neural network models, along with advancements in liveness detection techniques, contribute to the ongoing progress in spoof resilience. As technology evolves and attackers become more sophisticated, it is crucial to stay at the forefront of these developments to ensure the security and reliability of facial recognition systems.

Exploring Public Repositories

Public repositories play a crucial role in the development and advancement of spoof resilience in facial recognition technology. These repositories provide a wealth of resources, including benchmark datasets and open-source tools, that aid researchers and developers in their efforts to combat spoofing attacks.

Benchmark Datasets

Benchmark datasets serve as standardized evaluation criteria for testing and comparing anti-spoofing algorithms. These datasets encompass a wide range of spoofing attacks, allowing researchers to assess the performance of their solutions comprehensively. By evaluating their algorithms against these datasets, researchers can gain insights into the effectiveness of different anti-spoofing techniques.

Continuously updating and expanding benchmark datasets is vital for driving advancements in anti-spoofing techniques. As new types of spoofing attacks emerge, it becomes essential to incorporate them into the benchmark datasets. This ensures that researchers have access to realistic scenarios and challenges that reflect real-world conditions. The inclusion of diverse attack modalities helps improve the robustness and reliability of anti-spoofing solutions.

Open-Source Tools

Open-source tools and libraries are invaluable resources for developing and implementing effective anti-spoofing measures. These tools provide access to pre-trained models, data preprocessing techniques, and evaluation metrics that simplify the research process. Leveraging these open-source resources accelerates progress in the field by reducing duplication of effort and encouraging collaboration among researchers.

By utilizing open-source tools, developers can build upon existing work instead of starting from scratch. This not only saves time but also promotes innovation by enabling researchers to focus on improving existing algorithms or exploring new approaches. Open-source tools foster transparency by allowing others to scrutinize and validate the effectiveness of proposed methods.

The availability of pre-trained models through open-source repositories significantly reduces the barrier to entry for researchers who may not have access to large-scale training data or computational resources. It enables them to experiment with state-of-the-art models without investing significant time and effort in training their own models from scratch.

Notable Detection Techniques

Texture Analysis

Texture analysis is a crucial technique in the field of facial recognition to detect spoofing attempts. By extracting unique patterns and features from facial images, this method aims to identify subtle differences that distinguish real faces from fake ones. One way texture analysis achieves this is by analyzing texture variations caused by different materials or printing techniques used in spoofing attacks. For instance, a printed photograph or a mask may exhibit distinct textures that can be detected through careful analysis. By leveraging advanced algorithms, texture analysis contributes to improving the accuracy and reliability of anti-spoofing systems.

Motion Analysis

Another effective detection technique is motion analysis, which involves examining facial movements to differentiate between genuine faces and spoofed faces. This method focuses on detecting unnatural or inconsistent motion patterns that may indicate video or replay attacks. For example, when an individual’s face remains static without any natural movement such as blinking or slight head movements, it could be a sign of a spoofing attempt. Combining motion analysis with other detection methods enhances the overall resilience of facial recognition systems against various spoofing techniques.

Reflectance Analysis

Reflectance analysis plays a significant role in identifying signs of spoofing in facial recognition systems. This technique examines how light interacts with facial surfaces to differentiate between real skin and materials used in mask attacks. By analyzing the reflectance properties of different surfaces, it becomes possible to detect discrepancies that are indicative of attempted fraud. For instance, masks often have different reflectance properties compared to human skin due to variations in material composition and surface characteristics. Incorporating reflectance analysis into anti-spoofing measures helps develop more robust countermeasures against 3D mask attacks.

These notable detection techniques contribute to enhancing the resilience of facial recognition systems against spoofing attempts by providing accurate identification and discriminative features for distinguishing between genuine and fake faces.

Incorporating texture analysis allows for the extraction of unique patterns and features from facial images, enabling the detection of subtle differences that indicate a spoofing attempt. By analyzing texture variations caused by different materials or printing techniques, anti-spoofing systems can improve their accuracy and reliability.

Motion analysis focuses on examining facial movements to distinguish between genuine faces and spoofed faces. Detecting unnatural or inconsistent motion patterns is crucial for identifying video or replay attacks. Combining motion analysis with other detection methods enhances overall system resilience against various spoofing techniques.

Reflectance analysis examines how light interacts with facial surfaces to identify signs of spoofing.

Liveness Detection in Biometrics

Liveness detection plays a crucial role in ensuring the resilience of facial recognition systems. By distinguishing between real faces and spoofing attempts, it adds an extra layer of security to biometric authentication processes. There are two main approaches to liveness detection: passive detection and active detection.

Passive Detection

Passive detection techniques analyze static images or videos without requiring user cooperation. These methods are particularly effective in detecting photo attacks or deepfake videos during the enrollment process. By examining various visual cues, such as texture, color, and depth, passive detection algorithms can identify signs of tampering or manipulation.

For example, when analyzing a static image, these algorithms can detect inconsistencies in lighting conditions or unnatural reflections on the face that may indicate the presence of a printed photograph instead of a live person. Similarly, during video analysis, they can identify discrepancies in facial movements or lack of natural eye blinking patterns that suggest the use of deepfake technology.

The advantage of passive detection is that it adds an extra layer of security without inconveniencing users during authentication. It operates seamlessly in the background, ensuring that only legitimate users are granted access while minimizing false positives.

Active Detection

Active detection involves engaging users in specific actions to verify their liveness during the authentication process. By asking users to perform certain tasks like blinking their eyes, smiling, or following instructions displayed on-screen, active detection methods can effectively detect spoofing attempts in real-time.

These actions help capture dynamic facial features and movements that are difficult for attackers to replicate accurately. For instance, blinking requires rapid movement of eyelids and changes in light reflection patterns on the eyes—a characteristic challenging for static images or deepfake videos to imitate convincingly.

Integrating active detection methods significantly improves the resilience of facial recognition systems by increasing the level of certainty regarding user authenticity. It provides an additional layer of protection against sophisticated spoofing techniques and enhances overall system security.

Digital Identity Verification with Biometrics

Facial recognition technology has become increasingly prevalent in various applications, from unlocking smartphones to verifying identities at border control. One of the key aspects of facial recognition is ensuring the authenticity of the presented face and protecting against spoofing attacks.

Verification Process

The verification process is a crucial step in digital identity verification using biometrics. It involves comparing the presented face with the enrolled template to determine if they match. To ensure accuracy and reliability, anti-spoofing measures are applied during this process. These measures are designed to detect and prevent fraudulent attempts such as presenting a photograph or wearing a mask.

By analyzing various facial features, such as eye movement, skin texture, and micro-expressions, facial recognition systems can differentiate between real faces and spoofed ones. Advanced algorithms enable these systems to identify inconsistencies or unnatural patterns that may indicate a spoofing attempt.

Implementing robust anti-spoofing techniques enhances the resilience of facial recognition systems by reducing false acceptance rates and increasing their ability to detect spoofing attacks accurately. A reliable verification process is essential for maintaining trust in biometric authentication methods.

Security Enhancements

Continuous research and development efforts focus on enhancing the security of facial recognition systems against evolving spoofing techniques. New algorithms, techniques, and hardware advancements contribute significantly to improving system resilience.

Advanced algorithms utilize machine learning models trained on extensive datasets containing both genuine samples and diverse spoofing attacks. By continuously learning from new data, these algorithms can adapt to emerging threats effectively.

Techniques such as 3D depth mapping provide an additional layer of security by capturing depth information about the face being verified. This helps distinguish between a live person and a printed photo or video playback.

Hardware advancements also play a crucial role in enhancing system security. For example, infrared cameras can capture thermal images of the face, enabling the system to detect temperature variations that occur naturally in live faces. This prevents spoofing attempts using masks or other artificial materials.

The ongoing efforts to improve system resilience ensure that facial recognition systems can adapt to new threats and maintain their effectiveness in verifying digital identities.

Challenges of Biometric Systems

System Reliability

Ensuring the reliability of biometric systems is crucial for their widespread adoption, especially in the case of facial recognition technology. Users need to have confidence that the system will accurately identify and authenticate individuals while minimizing the risk of unauthorized access.

To address this challenge, robust anti-spoofing measures are implemented in facial recognition systems. These measures aim to detect and prevent spoofing attempts, where an imposter tries to deceive the system by presenting a fake or manipulated biometric sample. By incorporating advanced algorithms and techniques, such as liveness detection, texture analysis, or 3D face modeling, these anti-spoofing measures enhance the resilience of facial recognition systems against various types of attacks.

Furthermore, continual testing, evaluation, and improvement play a significant role in ensuring system reliability. Regular assessments help identify vulnerabilities and weaknesses in the system’s anti-spoofing capabilities. By analyzing real-world scenarios and adapting to emerging threats, developers can strengthen the overall effectiveness of facial recognition technology.

Privacy Concerns

While facial recognition technology offers numerous benefits, it also raises valid privacy concerns. The potential for misuse or unauthorized surveillance has sparked debates about striking a balance between security and privacy when implementing biometric systems.

To address these concerns, it is essential to incorporate privacy-enhancing features into facial recognition systems. One approach is data anonymization, where personally identifiable information (PII) is removed or encrypted before processing or storing biometric data. This helps protect individuals’ identities while still allowing for accurate identification within authorized contexts.

Implementing user consent mechanisms provides individuals with control over how their biometric data is used. By obtaining explicit consent before capturing or utilizing facial images for identification purposes, organizations can respect individuals’ privacy rights and build trust with users.

Furthermore, transparency plays a vital role in addressing privacy concerns associated with facial recognition technology. Organizations should provide clear information about how biometric data is collected, stored, and used. This transparency allows individuals to make informed decisions about whether they want to participate in such systems.

Facial Recognition and Privacy Issues

Facial recognition technology has become increasingly prevalent in our society, offering convenience and efficiency in various applications. However, it also raises significant concerns regarding privacy and ethical implications.

Data Protection

Protecting sensitive user data is of utmost importance. These systems rely on capturing and analyzing facial images to identify individuals accurately. To ensure the privacy and security of this information, robust measures must be implemented.

One essential aspect is the use of strong encryption protocols during data transmission and storage. By encrypting the facial images and other personal information, unauthorized access can be prevented, safeguarding against potential breaches or misuse.

Secure storage practices play a crucial role in protecting user data. Facial recognition systems should employ advanced security measures such as firewalls, intrusion detection systems, and regular vulnerability assessments to maintain the integrity of stored information.

Furthermore, compliance with data protection regulations is vital for responsible use of facial recognition technology. Adhering to established guidelines ensures that organizations handle personal information ethically and responsibly. Such regulations often require obtaining explicit consent from individuals before using their facial images for identification purposes.

Ethical Implications

The widespread adoption of facial recognition technology has sparked discussions about its ethical implications. Several key concerns revolve around privacy, bias, and consent.

Firstly, there are valid concerns about privacy infringement due to the extensive collection of biometric data through facial recognition systems. People may feel uncomfortable knowing that their faces are being captured without their knowledge or explicit consent. Transparent communication about how these systems operate and what happens to the collected data helps address these concerns.

Secondly, algorithmic biases pose a significant challenge in facial recognition technology. If not appropriately trained or calibrated with diverse datasets representing different populations adequately, these systems can exhibit biases that disproportionately affect certain groups. To ensure fairness and prevent discrimination, it is crucial to address these biases by continuously monitoring and improving the algorithms.

Lastly, obtaining informed consent is essential in deploying facial recognition systems ethically. Individuals should have a clear understanding of how their facial images will be used and for what purposes. This requires organizations to provide detailed information about data usage, storage practices, and potential risks associated with the technology.

Conclusion

So there you have it! We’ve explored the fascinating world of facial recognition spoofing and its implications for biometric systems. From understanding the different types of spoofing techniques to discussing the challenges faced by these systems, we’ve gained valuable insights into the importance of ensuring resilience in facial recognition technology.

As technology continues to advance, it’s crucial that we stay one step ahead of potential threats. By implementing robust spoof detection techniques like the VGG-Face architecture and liveness detection methods, we can enhance the security and reliability of biometric systems. Furthermore, public repositories provide a wealth of resources for researchers and developers to collaborate and improve these detection techniques even further.

In conclusion, the fight against facial recognition spoofing requires a collective effort from researchers, developers, and policymakers.

Frequently Asked Questions

FAQ

Can facial recognition systems be spoofed?

Yes, facial recognition systems can be spoofed. Biometric spoofing refers to the act of tricking a biometric system, such as facial recognition, by using artificial means to imitate someone else’s biometric data.

What are the types of facial recognition spoofing?

There are various types of facial recognition spoofing techniques, including photo attacks (using a printed or digital photo), video attacks (using a recorded video), 3D mask attacks (using a lifelike mask), and makeup attacks (altering one’s appearance with cosmetics).

How can we prevent biometric spoofing?

To prevent biometric spoofing in facial recognition systems, robust anti-spoofing measures should be implemented. These may include liveness detection techniques that verify the presence of live human features like eye movement or blink analysis, texture analysis, infrared imaging, depth sensing, and multi-modal authentication.

What is VGG-Face architecture for spoof detection?

VGG-Face is an architecture used for detecting spoofs in facial images or videos. It utilizes deep convolutional neural networks to extract high-level features from faces and classify them as genuine or fake based on learned patterns.

What are the challenges of biometric systems?

Biometric systems face several challenges including accuracy issues due to variations in lighting conditions and pose changes, vulnerability to presentation attacks or spoofs, privacy concerns regarding the storage and use of personal biometric data, and potential biases in algorithmic decision-making.